So, how is our Pokedex going to “know” what Pokemon is in an image? How are we going to describe each Pokemon? Are we going to characterize the color of the Pokemon? The texture? Or the shape?

Well, do you remember playing Who’s that Pokemon as a kid?

You were able to identify the Pokemon based only on its outline and silhouette.

We are going to apply the same principles in this post and quantify the outline of Pokemon using shape descriptors.

You might already be familiar with some shape descriptors, such as Hu moments. Today I am going to introduce you to a more powerful shape descriptor — Zernike moments, based on Zernike polynomials that are orthogonal to the unit disk.

Sound complicated?

Trust me, it’s really not. With just a few lines of code I’ll show you how to compute Zernike moments with ease.

Previous Posts

This post is part of an on-going series of blog posts on how to build a real-life Pokedex using Python, OpenCV, and computer vision and image processing techniques. If this is the first post in the series that you are reading, go ahead and read through it (there is a lot of awesome content in here on how to utilize shape descriptors), but then go back to the previous posts for some added context.

- Step 1: Building a Pokedex in Python: Getting Started (Step 1 of 6)

- Step 2: Building a Pokedex in Python: Scraping the Pokemon Sprites (Step 2 of 6)

Building a Pokedex in Python: Indexing our Sprites using Shape Descriptors

At this point, we already have our database of Pokemon sprite images. We gathered, scraped, and downloaded our sprites, but now we need to quantify them in terms of their outline (i.e. their shape).

Remember playing “Who’s that Pokemon?” as a kid? That’s essentially what our shape descriptors will be doing for us.

For those who didn’t watch Pokemon (or maybe need their memory jogged), the image at the top of this post is a screenshot from the Pokemon TV show. Before going to commercial break, a screen such as this one would pop up with the outline of the Pokemon. The goal was to guess the name of the Pokemon based on the outline alone.

This is essentially what our Pokedex will be doing — playing Who’s that Pokemon, but in an automated fashion. And with computer vision and image processing techniques.

Zernike Moments

Before diving into a lot of code, let’s first have a quick review of Zernike moments.

Image moments are used to describe objects in an image. Using image moments you can calculate values such as the area of the object, the centroid (the center of the object, in terms of x, y coordinates), and information regarding how the object is rotated. Normally, we calculate image moments based on the contour or outline of an image, but this is not a requirement.

OpenCV provides the HuMoments function which can be used to characterize the structure and shape of an object. However, a more powerful shape descriptors can be found in the mahotas package — zernike_moments. Similar to Hu moments, Zernike moments are used to describe the shape of an object; however, since the Zernike polynomials are orthogonal to each other, there is no redundancy of information between the moments.

One caveat to look out for when utilizing Zernike moments for shape description is the scaling and translation of the object in the image. Depending on where the image is translated in the image, your Zernike moments will be drastically different. Similarly, depending on how large or small (i.e. how your object is scaled) in the image, your Zernike moments will not be identical. However, the magnitudes of the Zernike moments are independent of the rotation of the object, which is an extremely nice property when working with shape descriptors.

In order to avoid descriptors with different values based on the translation and scaling of the image, we normally first perform segmentation. That is, we segment the foreground (the object in the image we are interested in) from the background (the “noise”, or the part of the image we do not want to describe). Once we have the segmentation, we can form a tight bounding box around the object and crop it out, obtaining translation invariance.

Finally, we can resize the object to a constant NxM pixels, obtaining scale invariance.

From there, it is straightforward to apply Zernike moments to characterize the shape of the object.

As we will see later in this series of blog posts, I will be utilizing scaling and translation invariance prior to applying Zernike moments.

The Zernike Descriptor

Alright, enough overview. Let’s get our hands dirty and write some code.

# import the necessary packages import mahotas class ZernikeMoments: def __init__(self, radius): # store the size of the radius that will be # used when computing moments self.radius = radius def describe(self, image): # return the Zernike moments for the image return mahotas.features.zernike_moments(image, self.radius)

As you may know from the Hobbits and Histograms post, I tend to like to define my image descriptors as classes rather than functions. The reason for this is that you rarely ever extract features from a single image alone. Instead, you extract features from a dataset of images. And you are likely utilizing the exact same parameters for the descriptors from image to image.

For example, it wouldn’t make sense to extract a grayscale histogram with 32 bins from image #1 and then a grayscale histogram with 16 bins from image #2, if your intent is to compare them. Instead, you utilize identical parameters to ensure you have a consistent representation across your entire dataset.

That said, let’s take this code apart:

- Line 2: Here we are importing the

mahotaspackage which contains many useful image processing functions. This package also contains the implementation of our Zernike moments. - Line 4: Let’s define a class for our descriptor. We’ll call it

ZernikeMoments. - Lines 5-8: We need a constructor for our

ZernikeMomentsclass. It will take only a single parameter — theradiusof the polynomial in pixels. The larger the radius, the more pixels will be included in the computation. This is an important parameter and you’ll likely have to tune it and play around with it to obtain adequately performing results if you use Zernike moments outside this series of blog posts. - Lines 10-12: Here we define the

describemethod, which quantifies our image. This method requires an image to be described, and then calls themahotasimplementation ofzernike_momentsto compute the moments with the specifiedradius, supplied in Line 5.

Overall, this isn’t much code. It’s mostly just a wrapper around the mahotas implementation of zernike_moments. But as I said, I like to define my descriptors as classes rather than functions to ensure the consistent use of parameters.

Next up, we’ll index our dataset by quantifying each and every Pokemon sprite in terms of shape.

Indexing Our Pokemon Sprites

Now that we have our shape descriptor defined, we need to apply it to every Pokemon sprite in our database. This is a fairly straightforward process so I’ll let the code do most of the explaining. Let’s open up our favorite editor, create a file named index.py, and get to work:

# import the necessary packages

from pyimagesearch.zernikemoments import ZernikeMoments

from imutils.paths import list_images

import numpy as np

import argparse

import pickle

import imutils

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-s", "--sprites", required = True,

help = "Path where the sprites will be stored")

ap.add_argument("-i", "--index", required = True,

help = "Path to where the index file will be stored")

args = vars(ap.parse_args())

# initialize our descriptor (Zernike Moments with a radius

# of 21 used to characterize the shape of our pokemon) and

# our index dictionary

desc = ZernikeMoments(21)

index = {}

Lines 2-8 handle importing the packages we will need. I put our ZernikeMoments class in the pyimagesearch sub-module for organizational sake. We will make use of numpy when constructing multi-dimensional arrays, argparse for parsing command line arguments, pickle for writing our index to file, glob for grabbing the paths to our sprite images, and cv2 for our OpenCV functions.

Then, Lines 11-16 parse our command line arguments. The --sprites switch is the path to our directory of scraped Pokemon sprites and --index points to where our index file will be stored.

Line 21 handles initializing our ZernikeMoments descriptor. We will be using a radius of 21 pixels. I determined the value of 21 pixels after a few experimentations and determining which radius obtained the best performing results.

Finally, we initialize our index on Line 22. Our index is a built-in Python dictionary, where the key is the filename of the Pokemon sprite and the value is the calculated Zernike moments. All filenames are unique in this case so a dictionary is a good choice due to its simplicity.

Time to quantify our Pokemon sprites:

# loop over the sprite images

for spritePath in list_images(args["sprites"]):

# parse out the pokemon name, then load the image and

# convert it to grayscale

pokemon = spritePath[spritePath.rfind("/") + 1:].replace(".png", "")

image = cv2.imread(spritePath)

image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# pad the image with extra white pixels to ensure the

# edges of the pokemon are not up against the borders

# of the image

image = cv2.copyMakeBorder(image, 15, 15, 15, 15,

cv2.BORDER_CONSTANT, value = 255)

# invert the image and threshold it

thresh = cv2.bitwise_not(image)

thresh[thresh > 0] = 255

Now we are ready to extract Zernike moments from our dataset. Let’s take this code apart and make sure we understand what is going on:

- Line 25: We use

globto grab the paths to our all Pokemon sprite images. All our sprites have a file extension of .png. If you’ve never usedglobbefore, it’s an extremely easy way to grab the paths to a set of images with common filenames or extensions. Now that we have the paths to the images, we loop over them one-by-one. - Line 28: The first thing we need to do is extract the name of the Pokemon from the filename. This will serve as our unqiue key into the index dictionary.

- Line 29 and 30: This code is pretty self-explanatory. We load the current image off of disk and convert it to grayscale.

- Line 35 and 36: Personally, I find the name of the

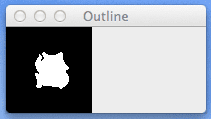

copyMakeBorderfunction to be quite confusing. The name itself doesn’t really describe what it does. Essentially, thecopyMakeBorder“pads” the image along the north, south, east, and west directions of the image. The first parameter we pass in is the Pokemon sprite. Then, we pad this image in all directions by 15 white (255) pixels. This step isn’t necessarily required, but it gives you a better sense of the thresholding on Line 39. - Line 39 and 40: As I’ve mentioned, we need the outline (or mask) of the Pokemon image prior to applying Zernike moments. In order to find the outline, we need to apply segmentation, discarding the background (white) pixels of the image and focusing only on the Pokemon itself. This is actually quite simply — all we need to do is flip the values of the pixels (black pixels are turned to white, and white pixels to black). Then, any pixel with a value greater than zero (black) is set to 255 (white).

Take a look at our thresholded image below:

This process has given us the mask of our Pokemon. Now we need the outermost contours of the mask — the actual outline of the Pokemon.

# initialize the outline image, find the outermost # contours (the outline) of the pokemone, then draw # it outline = np.zeros(image.shape, dtype = "uint8") cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = imutils.grab_contours(cnts) cnts = sorted(cnts, key = cv2.contourArea, reverse = True)[0] cv2.drawContours(outline, [cnts], -1, 255, -1)

First, we need a blank image to store our outlines — we appropriately a variable called outline on Line 45 and fill it with zeros with the same width and height as our sprite image.

Then, we make a call to cv2.findContours on Lines 46 and 47. The first argument we pass in is our thresholded image, followed by a flag cv2.RETR_EXTERNAL telling OpenCV to find only the outermost contours. Finally, we tell OpenCV to compress and approximate the contours to save memory using the cv2.CHAIN_APPROX_SIMPLE flag.

Line 48 handles parsing the contours for various versions of OpenCV.

As I mentioned, we are only interested in the largest contour, which corresponds to the outline of the Pokemon. So, on Line 49 we sort the contours based on their area, in descending order. We keep only the largest contour and discard the others.

Finally, we draw the contour on our outline image using the cv2.drawContours function. The outline is drawn as a filled in mask with white pixels:

We will be using this outline image to compute our Zernike moments.

Computing Zernike moments for the outline is actually quite easy:

# compute Zernike moments to characterize the shape # of pokemon outline, then update the index moments = desc.describe(outline) index[pokemon] = moments

On Line 54 we make a call to our describe method in the ZernikeMoments class. All we need to do is pass in the outline of the image and the describe method takes care of the rest. In return, we are given the Zernike moments used to characterize and quantify the shape of the Pokemon.

So how are we quantifying and representing the shape of the Pokemon?

Let’s investigate:

>>> moments.shape (25,)

Here we can see that our feature vector is of 25-dimensionality (meaning that there are 25 values in our list). These 25 values represent the contour of the Pokemon.

We can view the values of the Zernike moments feature vector like this:

>>> moments [ 0.31830989 0.00137926 0.24653755 0.03015183 0.00321483 0.03953142 0.10837637 0.00404093 0.09652134 0.005004 0.01573373 0.0197918 0.04699774 0.03764576 0.04850296 0.03677655 0.00160505 0.02787968 0.02815242 0.05123364 0.04502072 0.03710325 0.05971383 0.00891869 0.02457978]

So there you have it! The Pokemon outline is now quantified using only 25 floating point values! Using these 25 numbers we will be able to disambiguate between all of the original 151 Pokemon.

Finally on Line 55, we update our index with the name of the Pokemon as the key and our computed features as our value.

The last thing we need to do is dump our index to file so we can use when we perform a search:

# write the index to file f = open(args["index"], "wb") f.write(pickle.dumps(index)) f.close()

To execute our script to index all our Pokemon sprites, issue the following command:

$ python index.py --sprites sprites --index index.cpickle

Once the script finishes executing all of our Pokemon will be quantified in terms of shape.

Later in this series of blog posts, I’ll show you how to automatically extract a Pokemon from a Game Boy screen and then compare it to our index.

What's next? I recommend PyImageSearch University.

30+ total classes • 39h 44m video • Last updated: 12/2021

★★★★★ 4.84 (128 Ratings) • 3,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 30+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 30+ Certificates of Completion

- ✓ 39h 44m on-demand video

- ✓ Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 500+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post, we explored Zernike moments and how they can be used to describe and quantify the shape of an object.

In this case, we used Zernike moments to quantify the outline of the original 151 Pokemon. The easiest way to think of this is playing “Who’s that Pokemon?” as a kid. You are given the outline of the Pokemon and then you have to guess what the Pokemon is, using only the outline alone. We are doing the same thing — only we are doing it automatically.

This process of describing and quantifying a set of images is called “indexing”.

Now that we have our Pokemon quantified, I’ll show you how to search and identify Pokemon later in this series of posts.

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!