Update – January 27, 2015: Based on the feedback from commenters, I have updated the source code in the download to include the original MNIST dataset! No external downloads required!

Update – March 2015, 2015: The nolearn package has now deprecated and removed the dbn module. When you go to install the nolearn package, be sure to clone down the repository, checkout the 0.5b1 version, and then install it. Do not install the current version without first checking out the 0.5b1 version! In the future I will post an update on how to use the updated nolearn package!

Deep learning.

This probably isn’t the first time you’ve heard of it. It’s everywhere. In academic papers. On /r/machinelearning. On DataTau. On Hacker News. And even on primetime TV.

Now I’m not exactly a wagering man, but I bet that after my long-winded rant on getting off the deep learning bandwagon, the last thing you would expect me to do is write a post on Deep Learning, right?

Well. Let’s back up a step.

Remember, that post wasn’t saying that deep learning is bad or should be avoided — in fact, quite the contrary!

Instead, the post was simply a reminder that deep learning is still just a tool.

And with every tool, there is a time and a place to use it. Just because you have a “hammer”, doesn’t mean that every problem you come across will be a “nail”. It takes a conscientious effort to pick the right tool for the job.

Anyway, one of my favorite deep learning packages for Python is nolearn.

It’s beautiful. It’s simple. And if you’re familiar with scikit-learn, then you’ll feel right at home. The models included in nolearn have implemented the fit and predict functions just like scikit-learn, and the output predictions are even compatible with the scikit-learn metric functions.

Really cool, right?

Read on to find out how to utilize the nolearn package to construct a Deep Belief Network.

OpenCV and Python versions:

This example will run on Python 2.7 and OpenCV 2.4.X/OpenCV 3.0+.

Getting Started with Deep Learning and Python

So in this blog post we’ll review an example of using a Deep Belief Network to classify images from the MNIST dataset, a dataset consisting of handwritten digits. The MNIST dataset is extremely well studied and serves as a benchmark for new models to test themselves against.

However, in my opinion, this benchmark doesn’t necessarily translate into real-world viability. And this is mainly due to the dataset itself where each and every image has been pre-processed — including cropping, clean thresholding, and centering.

In the real-world, your dataset will not be as “nice” as the MNIST dataset. Your digits won’t be as cleanly pre-processed.

Still, this is a great starting point to get our feet wet utilizing Deep Belief Networks and nolearn .

Deep Learning Concepts and Assumptions

Deep learning is all about hierarchies and abstractions. These hierarchies are controlled by the number of layers in the network along with the number of nodes per layer. Adjusting the number of layers and nodes per layer can be used to provide varying levels of abstraction.

In general, the goal of deep learning is to take low level inputs (feature vectors) and then construct higher and higher level abstract “concepts” through the composition of layers. The assumption here is that the data follows some sort of underlying pattern generated by many interactions between different nodes on many different layers of the network.

Now that we have a high level understanding of Deep Learning concepts and assumptions, let’s look at some definitions to aide us in our learning.

The Input Layer, Hidden Layers, and Output Layer

Before we get to the code, let’s quickly discuss what Deep Belief Networks are, along with a bit of terminology.

This review is by no means meant to be complete and exhaustive. And in some cases I am greatly simplifying the details. But that’s okay. This is meant to be a gentle introduction to DBNs and not a hardcore review with tons of mathematical notation. If that’s what you’re looking for, then sorry, this isn’t the post for you. I would suggest reading up on the DeepLearning.net Tutorials (trust me, they are really good, but if this is your first exposure to deep learning, you might want to get through this post first).

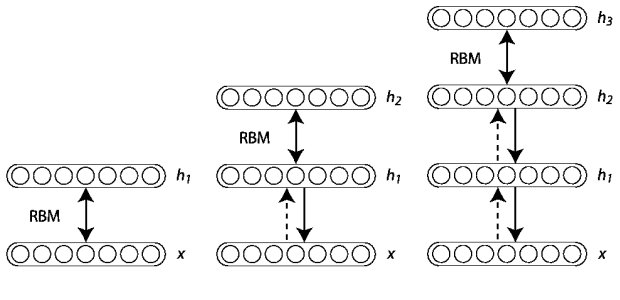

Deep Belief Networks consist of multiple layers, or more concretely, a hierarchy of unsupervised Restricted Boltzmann Machines (RBMs) where the output of each RBM is used as input to the next.

The major breakthrough came in 2006 when Hinton et al. published their A Fast Learning Algorithm for Deep Belief Networks paper. Their seminal work demonstrated that each of the hidden layers in a neural net can be treated as an unsupervised Restricted Boltzmann Machine with a supervised back-propagation step for fine-tuning. Furthermore, these RBMs can be trained greedily — and thus were feasible as highly scalable and efficient machine learning models.

This notion of efficiency was further demonstrated in the coming years where Deep Nets have been trained on GPUs rather than CPUs leading to a reduction of training time by over an order of magnitude. What once took weeks, now takes only days.

From there, deep learning has taken off.

But before we get too far, let’s quickly discuss this concept of “layers” in our DBN.

Input Layer

The first layer is our is a type of visible layer called an input layer. This layer contains an input node for each of the entries in our feature vector.

For example, in the MNIST dataset each image is 28 x 28 pixels. If we use the raw pixel intensities for the images, our feature vector would be of length 28 x 28 = 784, thus there would be 784 nodes in the input layer.

Hidden Layer

From there, these nodes connect to a series of hidden layers. In the most simple terms, each hidden layer is an unsupervised Restricted Boltzmann Machine where the output of each RBM in the hidden layer sequence is used as input to the next.

The final hidden layer then connects to an output layer.

Output Layer

Finally, we have our another visible layer called the output layer. This layer contains the output probabilities for each class label. For example, in our MNIST dataset we have 10 possible class labels (one for each of the digits 1-9). The output node that produces the largest probability is chosen as the overall classification.

Of course, we could always sort the output probabilities and choose all class labels that fall within some epsilon of the largest probability — doing this is a good way to find the most likely class labels rather than simply choosing the one with the largest probability. In fact, this is exactly what is done for many of the popular deep learning challenges, including ImageNet.

Now that we have some terminology, we can jump into the code.

Utilizing a Deep Belief Network in Python

Alright, time for the fun part — let’s write some code.

It is important to note that this tutorial (by in large) is based on the excellent example on the nolearn website. My goal here is to simply take the example, tweak it slightly, as well as throw in a few extra demonstrations — and provide a detailed review of the code, of course.

Anyway, open up a new file, name it dbn.py , and let’s get started.

# import the necessary packages from sklearn.cross_validation import train_test_split from sklearn.metrics import classification_report from sklearn import datasets from nolearn.dbn import DBN import numpy as np import cv2

We’ll start by importing the packages that we’ll need. We’ll import train_test_split (to generate our training and testing splits of the MNIST dataset) and classification_report (to display a nicely formatted table of accuracies) from the scikit-learn package. We’ll import the dataset module from scikit-learn to download the MNIST dataset.

Next up, we’ll import our Deep Belief Network implementation from the nolearn package.

And finally we’ll wrap up our import statements by importing NumPy for numerical processing and cv2 for our OpenCV bindings.

Let’s go ahead and download the MNIST dataset:

# grab the MNIST dataset (if this is the first time you are running

# this script, this make take a minute -- the 55mb MNIST digit dataset

# will be downloaded)

print "[X] downloading data..."

dataset = datasets.fetch_openml("mnist_784", version=1)

We make a call to the fetch_mldata function on Line 13 that downloads the original MNIST dataset from the mldata.org repository.

The actual dataset is roughly 55mb so it may take a few seconds to download. However, once the dataset is downloaded it is cached locally on your machine so you will not have to download it again.

If you take the time to examine the data, you’ll notice that each feature vector contains 784 entries in the range [0, 255]. These values are the grayscale pixel intensities of the flattened 28 x 28 image. Background pixels are black (0) whereas foreground pixels appear to be lighter shades of gray or white.

Time to generate our training and testing splits:

# scale the data to the range [0, 1] and then construct the training

# and testing splits

(trainX, testX, trainY, testY) = train_test_split(

dataset.data / 255.0, dataset.target.astype("int0"), test_size = 0.33)

In order to train our Deep Belief network, we’ll need two sets of data — a set for training our algorithm and a set for evaluating or testing the performance of the classifier.

We perform the split on Lines 17 and 18 by making call to train_test_split. The first argument we specify is the data itself, which we scale to be in range [0, 1.0]. The Deep Belief Network assumes that our data is scaled in the range [0, 1.0] so this is a necessary step.

We then specify the “target” or the “class labels” for each feature vector as the second argument.

The last argument to train_test_split is the size of our testing set. We’ll utilize 33% of the data for testing, while the remaining 67% will be utilized for training our Deep Belief Network.

Speaking of training the Deep Belief Network, let’s go ahead and do that:

# train the Deep Belief Network with 784 input units (the flattened, # 28x28 grayscale image), 300 hidden units, 10 output units (one for # each possible output classification, which are the digits 1-10) dbn = DBN( [trainX.shape[1], 300, 10], learn_rates = 0.3, learn_rate_decays = 0.9, epochs = 10, verbose = 1) dbn.fit(trainX, trainY)

We initialize our Deep Belief Network on Lines 23-28.

The first argument details the structure of our network, represented as a list. The first entry in the list is the number of nodes in our input layer. We’ll want to have an input node for each entry in our feature vector list, so we’ll specify the length of the feature vector for this value.

Our input layer will now feed forward into our second entry in the list, a hidden layer. This hidden layer will be represented as RBM with 300 nodes.

Finally, the output of the 300 node hidden layer will be fed into the output layer, which consists of an output for each of the class labels.

We can then define our learn_rate , which is the learning rate of the algorithm, the decay of the learn rate (learn_rate_decays ), the number of epochs , or iterations of the training data, and the verbosity level.

Both learn_rates and learn_rates_decays can be specified as a single floating point values or a list of floating point values. If you specify only a single value, this learning rate/decay rate will be applied to all layers in the network. If you specify a list of values, the the corresponding learning rate and decay rate will be used for the respective layers.

Training the actual algorithm takes place on Line 29. If you have a slow machine, you way want to make a cup of coffee or go for a quick walk during this time.

Now that our Deep Belief Network is trained, let’s go ahead and evaluate it:

# compute the predictions for the test data and show a classification # report preds = dbn.predict(testX) print classification_report(testY, preds)

Here we make a call to the predict method of the network on Line 33 which takes our testing data and makes predictions regarding which digit each image contains. If you have worked with scikit-learn at all, then this should feel very natural and comfortable.

We then present a table of accuracies on Line 34.

Finally, I thought it might be interesting to inspect images individually rather than on aggregate as a further demonstration of the network:

# randomly select a few of the test instances

for i in np.random.choice(np.arange(0, len(testY)), size = (10,)):

# classify the digit

pred = dbn.predict(np.atleast_2d(testX[i]))

# reshape the feature vector to be a 28x28 pixel image, then change

# the data type to be an unsigned 8-bit integer

image = (testX[i] * 255).reshape((28, 28)).astype("uint8")

# show the image and prediction

print "Actual digit is {0}, predicted {1}".format(testY[i], pred[0])

cv2.imshow("Digit", image)

cv2.waitKey(0)

On Line 37 we loop over 10 randomly chosen feature vectors from the test data.

We then predict the digit in the image on Line 39.

To display our image on screen, we need to reshape it on Line 43. Since our data is in the range [0, 1.0], we first multiply by 255 to put it back in the range [0, 255], change the shape to be a 28 x 28 pixel image, and then change the data type from floating point to an unsigned 8-bit integer.

Finally, we display the results of the prediction on Lines 46-48.

Results

Now that the code is done, let’s look at the results.

Fire up a shell, navigate to your dbn.py file, and issue the following command:

$ python dbn.py

gnumpy: failed to import cudamat. Using npmat instead. No GPU will be used.

[X] downloading data...

[DBN] fitting X.shape=(46900, 784)

[DBN] layers [784, 300, 10]

[DBN] Fine-tune...

100%

Epoch 1:

loss 0.288535176023

err 0.0842298497268

(0:00:04)

100%

Epoch 2:

loss 0.170946833078

err 0.0495645491803

(0:00:05)

100%

Epoch 3:

loss 0.127217275595

err 0.0362662226776

(0:00:04)

100%

Epoch 4:

loss 0.0930059491925

err 0.0268954918033

(0:00:05)

100%

Epoch 5:

loss 0.0732877224143

err 0.0234161543716

(0:00:04)

100%

Epoch 6:

loss 0.0563644782051

err 0.0173539959016

(0:00:04)

100%

Epoch 7:

loss 0.0383996891073

err 0.012487192623

(0:00:05)

100%

Epoch 8:

loss 0.027456679965

err 0.00817537568306

(0:00:05)

100%

Epoch 9:

loss 0.0208912373799

err 0.00589139344262

(0:00:05)

100%

Epoch 10:

loss 0.0203280455254

err 0.00616888661202

(0:00:05)

precision recall f1-score support

0 0.98 0.99 0.99 2280

1 0.99 0.98 0.99 2617

2 0.98 0.98 0.98 2285

3 0.97 0.98 0.97 2356

4 0.98 0.98 0.98 2268

5 0.98 0.97 0.98 2133

6 0.98 0.98 0.98 2217

7 0.99 0.98 0.98 2430

8 0.97 0.97 0.97 2255

9 0.97 0.97 0.97 2259

avg / total 0.98 0.98 0.98 23100

Here you can see that our Deep Belief Network is trained over 10 epochs (iterations over the training data). At each iteration our our loss function is minimized and the error on the training set is lower.

Taking a look at our classification report we see that we have obtained 98% accuracy (the precision column) on our testing set. As you can see, the “1” and “7” digits was accurately classified 99% of the time. We could have perhaps obtained higher accuracy for the other digits had we let our network train for more epochs.

And below we can see some screenshots of our Deep Belief Network correctly classifying the digit in their respective images.

Note: You’ll notice that the loss, error, and accuracy values do not 100% match the output above. That is because I gathered these sample images on a separate run of the algorithm. Deep Belief Networks are stochastic algorithms, meaning that the algorithm utilizes random variables; thus, it is normal to obtain slightly different results when running the learning algorithm multiple times. To account for this, it is normal to obtain multiple sets of results and average them together prior to reporting final accuracies.

Here we can see that we have correctly classified the “1” digit.

Again, we can see that our digit is correctly classified.

But take a look at this “8” digit below. This is far from a “legible digit”, but the Deep Belief Network is still able to sort it out:

Finally, let’s try a “7”:

Yep, that one is correctly classified as well!

What's next? I recommend PyImageSearch University.

30+ total classes • 39h 44m video • Last updated: 12/2021

★★★★★ 4.84 (128 Ratings) • 3,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 30+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 30+ Certificates of Completion

- ✓ 39h 44m on-demand video

- ✓ Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 500+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

So there you have it — an brief, gentle introduction to Deep Belief Networks.

In this post we reviewed the structure of a Deep Belief Network (at a very high level) and looked at the nolearn Python package.

We then utilized nolearn to train and evaluate a Deep Belief Network on the MNIST dataset.

If this is your first experience with DBNs, I highly recommend that you spend the next few days researching and reading up on Artificial Neural Networks (ANNs); specifically, feed-forward networks, the back-propagation algorithm, and Restricted Boltzmann Machines.

Honestly, if you are serious about exploring Deep Learning, the algorithms I mentioned above are required, non-optional reading!

You won’t get very far into deep learning without reading up on these techniques. And don’t be afraid of the academic papers either! That’s where you’ll find all the gory details.

What’s Next?

Training a Deep Belief Network on a CPU can take a long, long time.

Luckily, we can speed up the training process using our GPUs, leading to training times being reduced by an order of magnitude or more.

In my next post I’ll show you how to setup your system to train a Deep Belief Network on your GPU. I think the speedup in training time will be quite surprising…

Be sure to enter your email address in the form at the bottom of this post to be updated when the next post goes live! You definitely won’t want to miss it.

Interested in Handwriting Recognition?

Did you enjoy this post on handwriting recognition?

If so, you’ll definitely want to check out my Practical Python and OpenCV book!

Chapter 6, Handwriting Recognition with HOG details the techniques the pro’s use…allowing you to become a pro yourself! From pre-processing the digit images, utilizing the Histogram of Oriented Gradients (HOG) image descriptor, and training a Linear SVM, this chapter covers handwriting recognition from front-to-back.

Simply put — if you loved this blog post, you’ll love this book.

Sound interesting?

Click here to pickup a copy of the Practical Python and OpenCV

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Ah! Simply beautiful!

Thanks for the explanation 🙂

Glad you enjoyed it!

Sure I did. I am a regular follower now 🙂

Very clear and concise… thanks

One small mistake, one for each of the digits 0-9 than 1-10. BTW, nice article.

Thanks, I have updated the post!

deep learning is mostly about unsupervised learning from unlabeled data. Can you show more clearly where this unlabeled data plays a role in your demo?

Because this demo looks like traditional supervised learning (but maybe I did not understand something well, I am a beginner in deep learning)

Hi Mostafa. I would argue that deep learning is more than just unsupervised learning and definitely more than unlabeled data. However, in this example a Deep Belief Network is constructed using a set of stacked RBMs that are trained in an unsupervised manner. These RBMs are then fine-tuned during a back-propagation stage which utilizes the labeled data.

Hello, could you provide more details as to why your fit() call uses two training sets?

dbn.fit(trainX, trainY), as opposed to simply dbn.fit(trainX)

The DBN algorithm is a supervised learning algorithm. It requires both the data (i.e., features) and class labels. The

trainXis your data andtrainYare your class labels.Hey there, I have problems to download the data. Is there anything missing in the code, or how can I integrate the data manually?

THANKS

Hi Nito, the

dataset = datasets.fetch_mldata("MNIST Original")function should take care of everything for you.Thanks a lot.

Do you know if it is possible to access the hidden layers or extract the weights of the DBN in the nolearn framework?

|Cheers

Not off the top of my head, no, I haven’t gotten that far into the nolearn implementation. However, I would suggest taking a look at the DBN implementation directly on GitHub. It seems fairly simple to parse through.

Thanks for the quick responses to this great article, Adrian.

Cheers

Hi Nito, I just wanted to leave an update for you and let you know that the original MNIST dataset is now included in the source code download. No extra calls to

fetch_mldataneed to be made!Hi,

thank you for the great introduction. As far as i know a deep belief network doesn’t have to be

a stack of restricted boltzmann machines. Where is de evidence for the use of rbms in the implementation of the nolearn library?

Cheers

Hi Mehmet, you are certainly right. There are many different flavors of deep learning models, including your standard Deep Belief Networks, your Convolutional Neural Networks, and now your Recurrent systems. I was simply trying to provide a high level overview of deep learning so beginners could get up to speed.

Hi Adrian,

Great article. I tried to load the data but couldn’t get it right. It says

IOError: could not read bytes

Have any guess why?

Hi Ed, I’m not sure why that would happen. I assume you are getting that error when downloading the MNIST dataset? If so, I would contact the maintainer of the nolearn package and see if the MNIST dataset has moved location.

Thanks. I tried everything, but just doesn’t do. There is a way to load manually the data with python?

If not, thanks anyway!

I will figure out a fix and post an update when I can.

Thanks. this is fantastic. But I’m in lower level! I want to start my programming in python in the linux. I want some suggestion about IDEs and how to bundle openCV within it and etc.

I will very thankfull if you could give some links or hints to get to start it.

Check out the PyCharm IDE.

Hi Adrian Rosebrock,

Really well written and to the point on practical issue of using Deep learning.

Today, I tried to run your code but nolearn module is giving error.

I downloaded the module from Github and install using setup.py

import nolearn # is going smooth

from nolearn.dbn import DBN # is thowing no DBN module found error

Do you have any update on change of module implementation? I searched but can’t found.

Thanking you.

With Regards.

Ajit

Hi Ajit, I’m not aware of that issue. You should open up an issue on the nolearn GitHub and talk with the developers (I’m not one of them).

UPDATE: Take a look at the CHANGES.txt file. It looks like the

dbnpackage has been deprecated and removed and replaced in favor oflasange. Let me see how complicated it will be to do an update on this post.UPDATE 2: If you want to the code in this example to work, you’ll need to clone down the repo and use the ‘0.5b1’ version. Something like this should work:

Hi Adrian,

Thanks you sir…!!! With your suggestion and bootstrapping code, Finally, first time I am running Deep Learning program.

Will try to update the nolearn document.

Great!!!

Cheers!!!!

Awesome post. Thank you very much. I wonder why you preferred no learn to theano. I tend to use sklearn for deep learning but it does not look as sophisticated as theano.

Theano is indeed really nice, but I think it’s easier to teach the basics (at least with practical) examples using nolearn.

Hi Adrian

Thank you for such a beautiful introduction to deep learning using nolearn. I would like to know if face recognition can be done using the same. I could not find an image dataset in scikit-learn. Can you please help me

You can certainly do face recognition with deep learning. There is actually the “Labeled Faces in the Wild” dataset that is already in scikit-learn. Be sure to take a look again and you’ll find it.

hey how to give our own test images to for recognition ?

Hi Karthik, I actually review how to use your own custom test images for handwriting recognition in Chapter 6 of Practical Python and OpenCV + Case Studies.

Hi Adrian

I am new to your blog, but i really like what i have seen so far. I have a favour I like to ask you.

Can you give a tutorial on (webcam) face detection with deep learning (potentially or preferably with convolutional neural networks) using theano og torch (for the benefit of having the tool of utilizing gpu).

I have a bagground in machine learning and deep learning, but have never utilized it for video/webcam face detection.

I am gonna use my weekend to go through your Case Studies..(maybe I could build upon your tutorial from there )

Hey Rishok, thanks for the comment. I’ll be sure to consider this for a tutorial idea in the future! In the meantime, you’ll definitely want to take a look at the PyImageSearch Gurus course — I’ll be covering both deep learning and face recognition inside the course.

Hi again

Thank for the fast responce. I definitely look at your course, but it starts a bit to late. I would like to have a model running within 2 weeks (without it being optimized). Can i ask for your advise if needed. I do not really know how to tackle face detection for video, even though I have developed deep learning models for digit recognition.

Feel free to shoot me an email, I’ll do the best I can to point you in the right direction — but over the next two weeks I won’t be online much. I’ll be doing a ton of writing for the PyImageSearch Gurus course.

Hi again

Can you give me an initial feedback on this. How should my pipe line look like?

My idea is to use convolutional neural networks (potentially with the LFW Face Database) for (webcam) face detection. Here i would like to implement face alignment in order to detect faces although the face is not full visible (only the side of the face). If i have time i can then extend to face recognition.

I will hereafter continue through mail.

Hmmm. Using CNNs to actually detect the faces in webcam footage seems like overkill. Is there a reason why Haar cascades or HOG + Linear SVM does not work in your case? The reason I ask is because webcam footage is pretty standard and controlled with the user sitting directly in front of their computer and I’m not sure the CNN is worth the added effort.

For the actual identification of the face you have a ton of different algorithm options. I would start by using deep learning and deep funneling for the alignment. From there you have options like Eigenfaces, Fisherfaces, and LBPs for face recognition.

Best ever blog which provide information in a very precise manner, really helpful

Thanks Pakeeze, I’m glad it helped! 🙂

Hello!

Great tutorial, thanks for sharing it! I have one question about the feature vector. What about color images? Should I still you grayscale insensitive pixel or does it makes sense to try out different color spaces or gradients?

Thanks!

Whether or not you use color is entire dependent on your application. When recognizing handwritten digits, color has no real influence in the actual identification, so we simply discard it. But in your application if you think color can help influence the classification, then it’s worth looking into. Finally, if you’re interested in image gradients, or rather learning convolutional filters to classify images, then you should look into Convolutional Neural Networks — I should (hopefully) have a post on CNNs on the PyImageSearch blog in the next few months.

Ok, thanks a lot!

Thanks for Ur did. when do you explant the Theano tools ,and I want to follow U ,

Awesome post, thank you Adrian !

May I ask just one thing? What’s the minimum amount of data it would require to perform Deep Learning in general?

Cheers

Hi Clinton, thanks for the comment. The minimum amount of data required for deep learning really depends on the type of problem. But for algorithms such as CNNs, you’re probably looking at a bare minimum of 1,000 samples per class that you want to identify for a reasonably challenging problem. You might be able to use less data for certain problems, so in general, you’ll want to spot-check a few algorithms to see which gives you good performance, and double down on the ones that look the most promising.

Hi Adrian, I tried it and it worked well. As a beginner, am so excited to use this but i have a challenge. how do i provide my own training and test data? also, what should be the format of the data? my data actually has over 20 features and several instances(100,000 training and 15,000 test data) all labeled.The features are integers, float and strings. please how do i go about it? you can also mail me a sample code. Thanks in advance

The format of the data should be numeric, either floats or integers. For strings you’ll need to perform what’s called “one hot encoding” to transform the strings/categories into integers. You theoretically can mix all these together for a deep learning system, although I’m not entirely sure what your results would look like. I honestly wouldn’t start with deep learning — instead I would try some basic machine learning algorithms to get a benchmark first, and then only move to deep learning if necessary.

Hi Andrian, i have used other ML algorithms and achieved over 80% accuracy. It is a classification task where i have 20 features and 2 classes {good, bad}. i want to reduce the features yet achieve high accuracy. Using nolearn, i realized i could get above 77% accuracy but i don’t know how its possible.

Not every classification problem is suitable for deep learning — it could very well be that this is one of them. You could try increasing your network size to see if that improves your results, but in general, given only 20 features and 2 classes, I would be more interested in using other algorithms such as SVM.

HI Adrian,

How would you tweak this to handle a zip file of images? Right now, it is just inputing images one at a time?

Jeremy

Hey Jeremy, the code is actually classifying multiple images by storing the images to be classified in the

testXvariable and then passing them on to thepredictmethod — that’s how I was able to grab the accuracy of the model so quickly. The bottom of the code example simply shows how to classify images one at a time. If you want to classify multiple, just look at Line 33.If you have multiple image files on disk (on in .zip file), you just need to create a

forloop that loops over them, loads them into an array (like thetestXarray), and the code will work just fine.hello, I don’t know whether do it or not.

But I want to use this example’s output for my NN’s Initial weight (Originally is Random)

(I want to improve my accuracy)

Can I do that? thanks.

Hi Adrian , I am trying to explore deep belief network in Python for audio data . Do you think I can use the same process as you have explained here for audio data as well? Or would that require other tools and libraries?

Deep learning with audio is very much an active field; however, I have not explored it or even read any papers on it, so unfortunately I’m not the right person to ask about this. You would likely need other tools and libraries.

Hi Adrian,

Very interesting post.

I have seen that the post is focused on image process and as Rashmi asks in the last question, there are also people looking for audio or other raw data.

I would like to ask if there is any possibility to use deep learning from features that I already have. I mean that I already have all features of the samples and I have done a feature selection/feature engineering work which works quite well, but I would like to know if it would be possible to apply Deep Learning to these features to create new high added value features which can improve the accuracy of the model. If so, how it is done? Which tools are available on python?

It certainly is possible to use features you have already extracted as inputs to a deep learning network. In fact, you can use the code from this post! Just modify the DBN to accept your feature vectors as inputs rather than the raw pixel intensities.

Hi,

I ran this code. However, my training error is 0.89 ? did anyone had the same problem ?

Hi, I ran this code, and the prediction is the same for 10 epochs, which makes the acc 0.1..is there any problem with the nolearn package as i already have the 0.5b1 version

10% accuracy is extremely low, your accuracy should be similar to what I am getting in this blog post. I would make sure (1) you are indeed running the 0.5b1 version and (2) you are using the code download at the bottom of the post to make sure the code is 100% the same.

I know I am late to this, but I am also getting very low accuracy. In all 10 epochs my loss > 2 and err 0.9.

Versions:

nolearn 0.5b1

numpy 1.13.1+mkl

scipy 0.19.1

scikit-learn 0.18.2

I think this is an issue with the newer NumPy compatiblity with the old version of nolearn. Try using

numpy==1.11.0Hi, Thanks for such an amazing tutorial ..

I have a question regarding DBN,

How did you choose the number of hidden nodes in different layers?

Choosing the number of hidden layers, along with the number of nodes per hidden layer, are hyperparmeters to the network that you’ll need to cross-validate. However, a general rule of thumb is to use between 2/3 and 3/4 the number of nodes from the previous layer while never going below 1/4 the size of the original input (except for the output layer, of course). This allows the network to learn progressively higher-level features from the previous layers.

hi Adrian Rosebrock, I work now on the handwritten digits recognition my number is “1” our teacher give us aa base image of 10k “from yan LeCun site ” and it asks us to make a handwritten digits recognition system in python you can m ‘help please..

This tutorial uses the MNIST dataset which is (likely) what your teacher gave to you based on the Yann LeCun website. This code can be used to train your classifier, you just need to convert the binary file to a NumPy array. There are also alternative methods to construct handwritten digit classifiers. I cover how to use Histogram of Oriented Gradients features for handwriting recognition inside Practical Python and OpenCV.

the problem that I have encountered is that I could not to partition my text file that contains the data to :

dapprentissage data

dapprentissage labels

test data

test labels…

Sure you can, you just need to read-up on the documentation of the MNIST dataset in binary format. See the “File formats for the MNIST database” section of this page.

Thanks for this nice post. If I want to use my own “local” dataset, how can I do that?

It depends on your actual dataset and how it is structured. But in general, you need to “flatten” each image so it’s just a list of pixel intensities. The input size of the network is therefore the size of the flattened image.

Hi Adrian! First of all I must say I really appreciate your work, you’re doing great job!

Now, I have few questions. I am working on some kind a car logo tracker app as faculty project. My goal is to find car brand by giving the program random car logo image, or whole car image. I tried with SIFT and SURF but the results were very bad. I also tried with HOG but I got stuck. And now I’m thinking of neural networks. I found a lot of useful stuff here, but I’m not sure what is the best way to make my app give the best results. Can you please give me some advice? Than you in advance,

Marko.

If you’re using just the car logo then HOG will work extremely well for this. I actually detail how to build such a car logo recognition system inside the PyImageSearch Gurus course. If you’re using the entire car image, then you’ll likely need deep learning.

Im running this script using Python3 and it throws me this error:

ImportError: No module named ‘sklearn’

I downloaded the following, https://pythonhosted.org/nolearn/ Is there anything else that Im missing?

Yes, you need to install scikit-learn as well:

$ pip install -U scikit-learnAlso, please check my note at the top of this blog post. You should be using the 0.5b1 version of nolearn. If you installed it directly via pip, then you are not using the correct version.

Thanks for your help, the code works perfectly.

How did you do running nolearn 0.5b1 with python3? dbn from nolearn was said to be incompatilbe with python3

Hey, where you left pictures which you trained neural network?

The MNIST dataset is automatically downloaded via the

datasets.fetch_mldata("MNIST Original")function call. You can check your home directory for the scikit-learn data directory, where you can find the original data.Hi Adrian

Do you also have some example for recognizing handwritten characters, such as those that people fill out inside square boxes for each character?

I am in need of some help and pointers to some sample training data and tools or examples to help with this.

Thanks

– Pradeep

Indeed, I absolutely do. Take a look at Practical Python and OpenCV. Inside the book I have an entire chapter dedicated to handwritten digit recognition. This approach can be extended to work with other characters as well.

Hi Adrian,

It was very useful tutorial for beginners just like me. But I had a problem in this program and it related with scipy package.

My OS was Windows 8.1, and version of scipy was 0.17.0. when I ran this program, it was crashed but there is no reason shown at console. I tried to find out the reason and I solve this problem by downgrade the scipy package to 0.16.0. Here is the issue about this problem. https://github.com/ContinuumIO/anaconda-issues/issues/650

I hope this can help people who suffer same problem just like me.

Thanks for sharing Jun!

Can color histograms like the Hobbits and Histograms be used to recognize characters, or is deep learning the best approach?

Thanks,

Jet

Color histograms are typically a poor choice for recognizing characters. Instead, Histogram of Oriented Gradients tends to work well. I cover HOG for character recognition inside Practical Python and OpenCV. Otherwise, deep learning tends to work well for character recognition.

how you decieded all parameters such as nodes,hidden units,layers and rates ??

You normally would cross-validate all of these parameters in practice.

Hi,

It’s really a great post for beginners like me. It will surely help others to. I am putting a link to this blog post on my ML blog. will come back if any issue arises while executing the code.

Thanks for the link-back Sapan, I appreciate it!

Hi,

I could successfully run my first ever deep learning code with the help of this post. Thanks a lot. I also tried by changing different parameters and obtained some insights. Can you please post some useful details or similar sample code for beginners on speech processing using RNNs or any other deep network?

Thanks again.

I personally don’t have any experience with speech processing, so I’m not sure I’ll be able to do a tutorial on that. Sorry about that!

Hey Adrian,

Thats great introduction to Deep learning with the best example.. Thank you very much.

However, I would like to know whether can i do speech recognition using no learn? My plan is to feed the data taken from MFCC into Deep net. If no learn is not enough to do speech recognition, what are the alternatives(best ones)? Please do suggest.

I personally don’t have any experience with speech recognition, so I’m not the right person to ask regarding this question.

Hi,

Great article, really well explained introduction! Quick question, though: I understand why the input layer has 784 nodes, but for the hidden layer, why did you pick 300 nodes? Is it semi-arbitrary, or is there a reason for it?

For Deep Belief Networks, you commonly reduce the number of layers in subsequent layers in the network. I discuss this in more detail inside the PyImageSearch Gurus course.

How to install nolearn for anaconda in windows

I don’t support Windows on this blog. I would suggest getting Unix-based box up and running if you want to work with nolearn.

How to install nolearn in windows? I can not install in python.explanation how install

Hey man.. Nice tutorial! I have two question:

– How Can I use this algorithm to classify my own dataset?

– This algorithm works with gestures or just numbers?

I’m doing this questions cause I have a dataset with 48.000 images to trainning and test, and I need an algorithm (Neural Network / deep learning) to be able to classify which gestures my image is showing.

Thanks.

This tutorial focuses on Deep Belief Networks which are not the best choice for recognizing/classifying objects in images. Instead, I would recommend using Convolutional Neural Networks (CNNs). I detail how to utilize CNNs for image classification and explain how to use them to train your own classifier inside the PyImageSearch Gurus course.

Hi man,

Would like to thank you first of all for a great tut. I had one doubt regarding the dataset, I would be sounding silly but, where exactly the data is downloaded when we write “datasets.fetch_mldata”?

It is stored in

~/scikit_learn_data. Change that directory and you’ll find the downloaded data.I am a beginner and found this excellent tutorial. but I could not make it work because the dbn module in nolearn (0.51b and other versions) is not compatible with python 3.5. you have some solution or way to make this possible?

Hi Adrian.

can you explain me about structure file *.xml if i want train pedestrian detection. i don’t know how to save *.xml to opencv can read later when i run real time. tks a lot

The pedestrian detection .xml files are pre-trained by the OpenCV developers and contributors. You can train your own using the Haar cascade training method.

Thank you. A very nice tutorial. I want to go more into deep neural networks. I am searching for your blogs . Please if you have, suggest me.

You can use the search functionality on the bottom of sidebar on the right-hand side. Just search for “deep learning”.

Also, here is a list of all blog posts tagged with deep learning.

Thank you for the tutorial. Is one able to specify the number of hidden layers in instantiating nolearn’s DBN? It seems the above example uses only 1 hidden layer. If I understood the docs correctly, k-number of hidden layers are specified by k-many pairs (n_vis_units_i, n_hid_untis_i) for i = 1, …, k

:param layer_sizes: A list of integers of the form

“[n_vis_units, n_hid_units1,

n_hid_units2, …, n_out_units]“.

Is my understanding correct?

The number of hidden layers is automatically determined by the length of the list passed to the DBN. For example, in this blog post we use hidden layer with 300 nodes. If I wanted to add a second hidden layer with 100 nodes I would change the list to be:

[trainX.shape[1], 300, 100, 10]Again, since we are passing the node counts into the DBN we do not need to explicitly define the number of inputs and outputs on a per-layer basis.

Thank you for this Tutorial, things are more clear.

Thanks Mejd, I’m happy I could help. Have a great day 🙂

Hi,

Thanks a lot because Your Site makes me to learn lot. Just give a idea how to train the image using svm in python.help me out in this criteria.

What do you mean by “train the image using SVM”? What types of images are you working with?

Hi Adrian, I’m looking to make a neural network that would recognize the terrain present in a given 3D lidar image, the image is stored in the form of point clouds, so is poosible to achieve this using DBN?

Hey Rishabh — I must admit that I have very little experience working with 3D data and no experience working with LIDAR, so I unfortunately don’t have any insight into this area. That said, I think Convolutional Neural Networks rather than DBNs are better suited for this problem. I would do a search for “LIDAR + convolutional neural networks” to see what the current research approaches are.

Hey Adrian, can you tell me how did you get those ‘digit’ dialogue boxes (those which show the digit image from the database) to appear in the results?

I use windows OS

I simply use the

cv2.imshowfunction to display the image to the screen (Line 47).HI, i am using the code from this post. thanks to the great tutorial on deep learning. i am using python 3.4, opencv 3.2, nolearn 0.5b1 and sklearn 0.18.1 and i am getting error

“except _cudamat.CUDAMatException, e: # this means that malloc failed” in file name gnumpy.py. even though i changed the code in gnumpy to use only cpu i got the same result.

how to solve this. thanks in advance.

I haven’t tried this code with Python 3 before. Could you try with Python 2.7 and see if the error still exists?

I ‘m from India can u pls tell me how can i get ur book “deeplearning for cv with python”?

Hi Vinod — I am currently running a Kickstarter campaign to fund the creation of my upcoming deep learning book. You can use the Kickstarter to pre-order the book at a discounted rate. The book itself will be released in autumn/winter of 2017.

hi,your post is amazing .I am doing a project on Emotion Recognition using DBN and I am using an FAU.io corpus.Can you please tell me how to prepare data for this and train and test the dbn.

Please help.

I haven’t used the FAU corpus before, but I will be covering emotion recognition in my upcoming deep learning book.

Love your blog and Great tutorial!

I want to implement this solution in c++ code on a real handwrite text image. is there a way to import only the trained model (like using yml file) without the need to train the model on every run and predict one specific digit every time?

another question,

I have also implemented Handwrite recognition with openCV3.2 using opencv 5000 handwrite database. I’m using HOG and SVM but my results in the real world are not good, is there a way to use MNST data base instead and do you think I’ll have better results with this database?.

final question, do you think Deep Belief Network approach as describe will give better results than HOG+SVM?

Thanks,

If you want to use C++ I would instead recommend using the mxnet library. As for your other questions, I would suggest applying a Convolutional Neural Network as I do in this blog post.

Hi sir, i hope that you’re fine.

please i just worked with your tutorial on DBN and digits recognition, the tutorial was great and every thing was clear.

After that, i just want to plot the ROC curve for the classifier and i am wondering since we have 10 classes, are we gonna have 10 ROC curves in the same figure or just one ROC curve that represente all the classes of the classifier.

thank you very much.

Unfortunately that’s a non-trivial question for > 2 classes. I would refer here for further reading.

I like your tutorial. You downloaded digit dataset over internet. But

How to download alphabetical character data.

In cases I download char74k-english .tgz dataset from mldata. Org. I don’t know how to use it?

Please help.

Hi Adrian. Will you make example for new nolearn package without dbn? It will be very useful.

I would highly recommend using Convolutional Neural Networks for most image classification tasks. Is there a reason you would like to use a DBN?

Hey Adrian, i face some problem when downloading the MNIST. how to solve this?

What is the problem you are encountering? Without knowing what the exact problem is I cannot suggest a solution.

Hi Adrian,

Can you please tell me whats the next post of yours after this (Getting Started with Deep Learning and Python) post.

Also can you please tell me how to move to your next post after the completion on reading the current post.

At the bottom of every post, just before the comments section, you’ll see two links. The first link points you to the previous post. The second link points you to the next post.

hi aidan

I tried running your code (from above) and it runs, but the training is horrible, the loss hardly decreases and I don’t get the nice results you get. Any idea what could be wrong: the only thing I changed was to update the MNIST call function to mnist.load_data() from keras.datasets? Tried changing the network architecture, but the loss is the same, suggesting there is some sort of a bug. Do you think something broke in the DBN implementation?

This is a very, very old tutorial and DBNs are frankly not used very much for image classification anymore. Furthermore, the DBN package itself is not being updated. I would suggest you follow a more recent tutorial, such as this one.

Simple!

Thank you

You are welcome!