Wow, last week’s blog post on building a basic motion detection system was awesome. It was a lot of fun to write and the feedback I got from readers like yourself made it well worth the effort to put together.

For those of you who are just tuning it, last week’s post on building a motion detection system using computer vision was motivated by my friend James sneaking into my refrigerator and stealing one of my last coveted beers. And while I couldn’t prove it was him, I wanted to see if it was possible to use computer vision and a Raspberry Pi to catch him in the act if he tried to steal one of my beers again.

And as you’ll see by the end of this post, the home surveillance and motion detection system we are about to build is not only cool and simple, but it’s also quite powerful for this particular goal.

Today we are going to extend our basic motion detection approach and:

- Make our motion detection system a little more robust so that it can run continuously throughout the day and not be (as) susceptible to lighting condition changes.

- Update our code so that our home surveillance system can run on the Raspberry Pi.

- Integrate with the Dropbox API so that our Python script can automatically upload security photos to our personal Dropbox account.

We’ll be looking at a lot of code into this post, so be prepared. But we’re going to learn a lot. And more importantly, by the end of this post you’ll have a working Raspberry Pi home surveillance system of your own.

You can find the full demo video directly below, along with a bunch of other examples towards the bottom of this post.

Update: 24 August 2017 — All code in this blog post has been updated to work with the Dropbox V2 API so you no longer have to copy and paste the verification key used in the video. Please see the remainder of this blog post for more details.

Before we start, you’ll need:

Let’s go ahead and get the prerequisites out of the way. I am going to assume that you already have a Raspberry Pi and camera board.

You should also already have OpenCV installed on your Raspberry Pi and be able to access your Raspberry Pi video stream using OpenCV. I’ll also assume that you have already read and familiarized yourself with last week’s post on a building a basic motion detection system.

Finally, if you want to upload your home security photos to your personal Dropbox, you’ll need to register with the Dropbox Core API to obtain your public and private API keys — but having Dropbox API access it not a requirement for this tutorial, just a little something extra that’s nice to have.

Other than that, we just need to pip-install a few extra packages.

If you don’t already have my latest imutils package installed, you’ll want to grab that from GitHub or install/update it via pip install --upgrade imutils

And if you’re interested in having your home surveillance system upload security photos to your Dropbox, you’ll also need the dropbox package: pip install --upgrade dropbox

Note: The Dropbox API v1 is deprecated. This post and associated code download now works with Dropbox API v2.

Now that everything is installed and setup correctly, we can move on to actually building our home surveillance and motion detection system using Python and OpenCV.

So here’s our setup:

As I mentioned last week, my goal of this home surveillance system is to catch anyone who tries to sneak into my refrigerator and nab one of my beers.

To accomplish this I have setup a Raspberry Pi + camera on top of my kitchen cabinets:

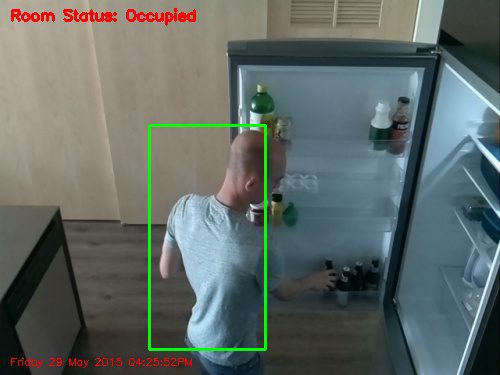

Which then looks down towards the refrigerator and front door of my apartment:

If anyone tries to open the refrigerator door and grab one of my beers, the motion detection code will kick in, upload a snapshot of the frame to my Dropbox, and allow me to catch them red handed.

DIY: Home surveillance and motion detection with the Raspberry Pi, Python, and OpenCV

Alright, so let’s go ahead and start working on our Raspberry Pi home surveillance system. We’ll start by taking a look at the directory structure of our project:

|--- pi_surveillance.py |--- conf.json |--- pyimagesearch | |--- __init__.py | |--- tempimage.py

Our main home surveillance code and logic will be stored in pi_surveillance.py . And instead of using command line arguments or hardcoding values inside the pi_surveillance.py file, we’ll instead use a JSON configuration file named conf.json .

For projects like these, I really find it useful to break away from command line arguments and simply rely on a JSON configuration file. There comes a time when you just have too many command line arguments and it’s just as easy and more tidy to utilize a JSON file.

Finally, we’ll define a pyimagesearch package for organization purposes, which will house a single class, TempImage , which we’ll use to temporarily write images to disk before they are shipped off to Dropbox.

So with the directory structure of our project in mind, open up a new file, name it pi_surveillance.py , and start by importing the following packages:

# import the necessary packages

from pyimagesearch.tempimage import TempImage

from picamera.array import PiRGBArray

from picamera import PiCamera

import argparse

import warnings

import datetime

import dropbox

import imutils

import json

import time

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-c", "--conf", required=True,

help="path to the JSON configuration file")

args = vars(ap.parse_args())

# filter warnings, load the configuration and initialize the Dropbox

# client

warnings.filterwarnings("ignore")

conf = json.load(open(args["conf"]))

client = None

Wow, that’s quite a lot of imports — much more than we normally use on the PyImageSearch blog. The first import statement simply imports our TempImage class from the PyImageSearch package. Lines 3-4 import classes from picamera that will allow us to access the raw video stream of the Raspberry Pi camera (which you can read more about here). And then Line 8 grabs the Dropbox API. The remaining import statements round off the other packages we’ll need. Again, if you have not already installed imutils , you’ll need to do that before continuing with this tutorial.

Lines 15-18 handle parsing our command line arguments. All we need is a single switch, --conf , which is the path to where our JSON configuration file lives on disk.

Line 22 filters warning notifications from Python, specifically ones generated from urllib3 and the dropbox packages. And lastly, we’ll load our JSON configuration dictionary from disk on Line 23 and initialize our Dropbox client on Line 24.

Our JSON configuration file

Before we get too further, let’s take a look at our conf.json file:

{

"show_video": true,

"use_dropbox": true,

"dropbox_access_token": "YOUR_DROPBOX_KEY",

"dropbox_base_path": "YOUR_DROPBOX_PATH",

"min_upload_seconds": 3.0,

"min_motion_frames": 8,

"camera_warmup_time": 2.5,

"delta_thresh": 5,

"resolution": [640, 480],

"fps": 16,

"min_area": 5000

}

This JSON configuration file stores a bunch of important variables. Let’s look at each of them:

show_video: A boolean indicating whether or not the video stream from the Raspberry Pi should be displayed to our screen.use_dropbox: Boolean indicating whether or not the Dropbox API integration should be used.dropbox_access_token: Your public Dropbox API key.dropbox_base_path: The name of your Dropbox App directory that will store uploaded images.min_upload_seconds: The number of seconds to wait in between uploads. For example, if an image was uploaded to Dropbox 5m 33s after starting our script, a second image would not be uploaded until 5m 36s. This parameter simply controls the frequency of image uploads.min_motion_frames: The minimum number of consecutive frames containing motion before an image can be uploaded to Dropbox.camera_warmup_time: The number of seconds to allow the Raspberry Pi camera module to “warmup” and calibrate.delta_thresh: The minimum absolute value difference between our current frame and averaged frame for a given pixel to be “triggered” as motion. Smaller values will lead to more motion being detected, larger values to less motion detected.resolution: The width and height of the video frame from our Raspberry Pi camera.fps: The desired Frames Per Second from our Raspberry Pi camera.min_area: The minimum area size of an image (in pixels) for a region to be considered motion or not. Smaller values will lead to more areas marked as motion, whereas higher values ofmin_areawill only mark larger regions as motion.

Now that we have defined all of the variables in our conf.json configuration file, we can get back to coding.

Integrating with Dropbox

If we want to integrate with the Dropbox API, we first need to setup our client:

# check to see if the Dropbox should be used

if conf["use_dropbox"]:

# connect to dropbox and start the session authorization process

client = dropbox.Dropbox(conf["dropbox_access_token"])

print("[SUCCESS] dropbox account linked")

On Line 27 we make a check to our JSON configuration to see if Dropbox should be used or not. If it should, Line 29 authorizes our app with the API key.

At this point it is important that you have edited the configuration file with your API key and Path. To find your API key, you can create an app on the app creation page. Once you have an app created, the API key may be generated under the OAuth section of the app’s page on the App Console (simply click the “Generate” button and copy/paste the key into the configuration file).

Home surveillance and motion detection with the Raspberry Pi

Alright, now we can finally start performing some computer vision and image processing.

# initialize the camera and grab a reference to the raw camera capture

camera = PiCamera()

camera.resolution = tuple(conf["resolution"])

camera.framerate = conf["fps"]

rawCapture = PiRGBArray(camera, size=tuple(conf["resolution"]))

# allow the camera to warmup, then initialize the average frame, last

# uploaded timestamp, and frame motion counter

print("[INFO] warming up...")

time.sleep(conf["camera_warmup_time"])

avg = None

lastUploaded = datetime.datetime.now()

motionCounter = 0

We setup our raw capture to the Raspberry Pi camera on Lines 33-36 (for more information on accessing the Raspberry Pi camera, you should read this blog post).

We’ll also allow the Raspberry Pi camera module to warm up for a few seconds, ensuring that the sensors are given enough time to calibrate. Finally, we’ll initialize the average background frame, along with some bookkeeping variables on Lines 42-44.

Let’s start looping over frames directly from our Raspberry Pi video stream:

# capture frames from the camera

for f in camera.capture_continuous(rawCapture, format="bgr", use_video_port=True):

# grab the raw NumPy array representing the image and initialize

# the timestamp and occupied/unoccupied text

frame = f.array

timestamp = datetime.datetime.now()

text = "Unoccupied"

# resize the frame, convert it to grayscale, and blur it

frame = imutils.resize(frame, width=500)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(gray, (21, 21), 0)

# if the average frame is None, initialize it

if avg is None:

print("[INFO] starting background model...")

avg = gray.copy().astype("float")

rawCapture.truncate(0)

continue

# accumulate the weighted average between the current frame and

# previous frames, then compute the difference between the current

# frame and running average

cv2.accumulateWeighted(gray, avg, 0.5)

frameDelta = cv2.absdiff(gray, cv2.convertScaleAbs(avg))

The code here should look pretty familiar to last week’s post on building a basic motion detection system.

We pre-process our frame a bit by resizing it to have a width of 500 pixels, followed by converting it to grayscale, and applying a Gaussian blur to remove high frequency noise and allowing us to focus on the “structural” objects of the image.

On Line 60 we make a check to see if the avg frame has been initialized or not. If not, we initialize it as the current frame.

Lines 69 and 70 are really important and where we start to deviate from last week’s implementation.

In our previous motion detection script we made the assumption that the first frame of our video stream would be a good representation of the background we wanted to model. For that particular example, this assumption worked well enough.

But this assumption is also easily broken. As the time of day changes (and lighting conditions change), and as new objects are introduced into our field of view, our system will falsely detection motion where there is none!

To combat this, we instead take the weighted mean of previous frames along with the current frame. This means that our script can dynamically adjust to the background, even as the time of day changes along with the lighting conditions. This is still quite basic and not a “perfect” method to model the background versus foreground, but it’s much better than the previous method.

Based on the weighted average of frames, we then subtract the weighted average from the current frame, leaving us with what we call a frame delta:

delta = |background_model – current_frame|

We can then threshold this delta to find regions of our image that contain substantial difference from the background model — these regions thus correspond to “motion” in our video stream:

# threshold the delta image, dilate the thresholded image to fill

# in holes, then find contours on thresholded image

thresh = cv2.threshold(frameDelta, conf["delta_thresh"], 255,

cv2.THRESH_BINARY)[1]

thresh = cv2.dilate(thresh, None, iterations=2)

cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

cnts = imutils.grab_contours(cnts)

# loop over the contours

for c in cnts:

# if the contour is too small, ignore it

if cv2.contourArea(c) < conf["min_area"]:

continue

# compute the bounding box for the contour, draw it on the frame,

# and update the text

(x, y, w, h) = cv2.boundingRect(c)

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)

text = "Occupied"

# draw the text and timestamp on the frame

ts = timestamp.strftime("%A %d %B %Y %I:%M:%S%p")

cv2.putText(frame, "Room Status: {}".format(text), (10, 20),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2)

cv2.putText(frame, ts, (10, frame.shape[0] - 10), cv2.FONT_HERSHEY_SIMPLEX,

0.35, (0, 0, 255), 1)

To find regions in the image that pass the thresholding test, we simply apply contour detection. We then loop over each of these contours individually (Line 82) and see if the pass the min_area test (Lines 84 and 85). If the regions are sufficiently larger enough, then we can indicate that we have indeed found motion in our current frame.

Lines 89-91 then compute the bounding box of the contour, draw the box around the motion, and update our text variable.

Finally, Lines 94-98 take our current timestamp and status text and draw them both on our frame.

Now, let’s create the code to handle uploading to Dropbox:

# check to see if the room is occupied

if text == "Occupied":

# check to see if enough time has passed between uploads

if (timestamp - lastUploaded).seconds >= conf["min_upload_seconds"]:

# increment the motion counter

motionCounter += 1

# check to see if the number of frames with consistent motion is

# high enough

if motionCounter >= conf["min_motion_frames"]:

# check to see if dropbox sohuld be used

if conf["use_dropbox"]:

# write the image to temporary file

t = TempImage()

cv2.imwrite(t.path, frame)

# upload the image to Dropbox and cleanup the tempory image

print("[UPLOAD] {}".format(ts))

path = "/{base_path}/{timestamp}.jpg".format(

base_path=conf["dropbox_base_path"], timestamp=ts)

client.files_upload(open(t.path, "rb").read(), path)

t.cleanup()

# update the last uploaded timestamp and reset the motion

# counter

lastUploaded = timestamp

motionCounter = 0

# otherwise, the room is not occupied

else:

motionCounter = 0

We make a check on Line 101 to see if we have indeed found motion in our frame. If so, we make another check on Line 103 to ensure that enough time has passed between now and the previous upload to Dropbox — if enough time has indeed passed, we’ll increment our motion counter.

If our motion counter reaches a sufficient number of consecutive frames (Line 109), we’ll then write our image to disk using the TempImage class, upload it via the Dropbox API, and then reset our motion counter and last uploaded timestamp.

If motion is not found in the room (Lines 129 and 130), we simply reset our motion counter to 0.

Finally, let’s wrap up this script by handling if we want to display the security stream to our screen or not:

# check to see if the frames should be displayed to screen

if conf["show_video"]:

# display the security feed

cv2.imshow("Security Feed", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key is pressed, break from the lop

if key == ord("q"):

break

# clear the stream in preparation for the next frame

rawCapture.truncate(0)

Again, this code is quite self-explanatory. We make a check to see if we are supposed to display the video stream to our screen (based on our JSON configuration), and if we are, we display the frame and check for a key-press used to terminate the script.

As a matter of completeness, let’s also define the TempImage class in our pyimagesearch/tempimage.py file:

# import the necessary packages

import uuid

import os

class TempImage:

def __init__(self, basePath="./", ext=".jpg"):

# construct the file path

self.path = "{base_path}/{rand}{ext}".format(base_path=basePath,

rand=str(uuid.uuid4()), ext=ext)

def cleanup(self):

# remove the file

os.remove(self.path)

This class simply constructs a random filename on Lines 8 and 9, followed by providing a cleanup method to remove the file from disk once we are finished with it.

Raspberry Pi Home Surveillance

We’ve made it this far. Let’s see our Raspberry Pi + Python + OpenCV + Dropbox home surveillance system in action. Simply navigate to the source code directory for this post and execute the following command:

$ python pi_surveillance.py --conf conf.json

Depending on the contents of your conf.json file, your output will (likely) look quite different than mine. As a quick refresher from earlier in this post, I have my Raspberry Pi + camera mounted to the top of my kitchen cabinets, looking down at my kitchen and refrigerator — just monitoring and waiting for anyone who tries to steal any of my beers.

Here’s an example of video being streamed from my Raspberry Pi to my MacBook via X11 forwarding, which will happen when you set show_video: true :

And in this video, I have disabled the video stream, while enabling the Dropbox API integration via use_dropbox: true , we can see the results of motion being detected in images and the results sent to my personal Dropbox account:

Here are some example frames that the home surveillance system captured after running all day:

And in this one you can clearly see me reaching for a beer in the refrigerator:

If you’re wondering how you can make this script start each time your Pi powers up without intervention, see my post on Running a Python + OpenCV script on reboot.

Given my rant from last week, this home surveillance system should easily be able to capture James if he tries steal my beers again — and this time I’ll have conclusive proof from the frames uploaded to my personal Dropbox account.

What's next? I recommend PyImageSearch University.

30+ total classes • 39h 44m video • Last updated: 12/2021

★★★★★ 4.84 (128 Ratings) • 3,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 30+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 30+ Certificates of Completion

- ✓ 39h 44m on-demand video

- ✓ Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 500+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post we explored how to use Python + OpenCV + Dropbox + a Raspberry Pi and camera module to create our own personal home surveillance system.

We built upon our previous example on basic motion detection from last week and extended it to (1) be slightly more robust to changes in the background environment, (2) work with our Raspberry Pi, and (3) integrate with the Dropbox API so we can have our home surveillance footage uploaded directly to our account for instant viewing.

This has been a great 2-part series on motion detection, I really hope you enjoyed it. But we’re honestly only scratching the surface on motion detection/background subtraction — this will most certainly not be the last time we cover it on the PyImageSearch blog. So if you want to keep up to date regarding new posts on PyImageSearch, I would definitely recommend signing up for the PyImageSearch Newsletter at the bottom of this page.

And finally, if you enjoyed this tutorial, please consider sharing it with others!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Your proyects are awesome. They inspire me.

Keep it up!

Thanks Cristian!

so do we need the wifi dongle to make this work or what?

I am just curious

The Raspberry Pi 2 would require a WiFi dongle. The Pi 3 ships with WiFi built-in. Otherwise you could use an ethernet cable. Please note that an internet connection is only required if you want to upload individual frames to Dropbox.

I got a problem

after they print ” [info]warming up”

it shows

illegal instruction .

I don’t know why.

How are you accessing your webcam? Is it a USB webcam? Or an RPi camera module?

I will do a project that for human detection on Raspebbery Pi 4. Would will work? I can apply your code for human detection? I will use raspberry model B, picamera with infrared (night vision).

Hey Adrian-

What version Pi are you using? What is the oldest version Pi that you think could be used for this project? I would envision trying to set up multiple cameras at my home (I think 4, for the number of entry points into my home) and as a DIY solution I would try to get the oldest/cheapest version Pi that would still be effective 24/7.

Thanks for posting this! It inspires me to get involved with projects like this!

Hey Andy, no worries — I updated your previous comment so it reads correctly 🙂

I am using the Raspberry Pi 2 for this project. You might be able to get away with the B+ for this, but I would really recommend against it. The Pi 2 is substantially faster (4 cores and 1gb of RAM) and is well worth it. In reality, a Pi 2 will cost you $35, along with a camera module per each, so you’re probably looking at $60 per system, which isn’t too bad.

Thanks for the great tutorial. it’s very informative and very well explained.

Thanks so much Berkay, I’m glad you found it helpful! 🙂

As always a great tutorial.

Though instead of copy-pasting the url for the dropbox auth, you could let python handle that as well with webbrowser.open()

🙂

Good point. I was using X11 at that point, so launching a browser over X11, even on a local network, can be quite slow, hence I went with the copy-and-paste solution. Definitely not the most elegant solution, but it worked!

Hi Adrian,

This is a great post!

Recently I am trying to make a little surveillance system and have read plenty of your tutorials.

I have a rough idea that 1) using the frame difference method you mentioned here and last week for initializing the search area; 2) implement OpenCV default hog human detection method around the initialization area and find the bounding box of human beings as the input box in step 3; 3) using the dlib library correlation tracker to track the detected people.

Will this work or not and is there any suggestions to improve the surveillance performance to make it robust?

Thanks again for your wonderful tutorials!

Hi Alex — thanks so much, I’m glad you enjoyed the post!

So in general, the solution to your surveillance system will depend on (1) what gives you the best results, and (2) how complicated you want to make it. Using HOG requires training your own custom object detector which can non-trivial, especially if you are just getting started in computer vision and machine learning. Furthermore, this classifier would only really work to detect what you trained it to detect — it wouldn’t be able to detect true “motion” for arbitrary objects in a video stream.

That said, I think you’re on the right track. You want to use some basic motion detection, followed by more advanced methods for tracking the bounding box. I don’t think dlib’s correlation tracker has Python bindings, but I know this one for OpenCV does.

Dlib just added a Python binding for correlation tracker, see https://github.com/davisking/dlib/blob/master/python_examples/correlation_tracker.py

Nice, thanks for passing this along Tim! I’m really excited to play around with it.

Adrian and everyone else here. Does anyone know of sample applications for installing and then calling either DLIB or the MOSSE.PY mentioned above? I’m ready to get the tracking side of this working well. I have detection working pretty well, but now need to go to the next level.

When I run the MOSSE.PY standalone, it never seems to allow me to draw the rectangles needed to track an object. I’d like to pass an object to it for tracking, but am not sure how.

And on DLIB, I am not sure how to best install into the virtual environment being used here (Workon CV).

Adrian, please write a new blog post on this!!!! (grin)

If anyone has experience, please comment. Thanks

I’ve used the dlib tracker with success in many applications. I’ll add doing a post on installing dlib, along with a post on how to do track with dlib to my queue.

Is it possible to extend it to night surveillance camera as well? Using this night vision camera? Without adding more custom code?

http://s.aliexpress.com/rM3eqaa2

Hi Alex, I have made similiar project. With some modification, I combine Adrian’s scripts with OpenCV peopledetect.py sample. I perform HOG human detection when the contours found (countour>0). Need 2-3 seconds to get result from HOG human detection for every frame loop. Not very efficient, but it’s enough for my case. I use Raspberry Pi 2 with Pi Camera.

Sorry for my bad english.

I think your work will be helpful for me so. If you can provide the sample code and the command. It will be great.

you can contact me on EMAIL REMOVED

Hi Adrian,

Thank you for works, it’s very interesting,

If I don’t use Pi Camera but another usb webcam,

Is this OK ? how about a usb hub for multi webcam ?

Hi Quan, if you have a USB webcam you can certainly use this code. You’ll just need to modify the code that actually grabs the frames from the camera to use the

cv2.VideoCapturefunction like in this post.It looks like really fun to play with (ok, it costed me already an extra Raspberry2B & cam … My A&B wouldn’t do it properly I think, and my 2B was in use already)… I got this even working, which is of course easy with this much details… But instead of writing pictures, I would like to combine the “compromising” pictures into a video… either one for the whole running time or for each “occupied session”.

It doesn’t look as I can use VideoWriter for rawCapture frames … Would there be an option? And if so, what function should I look at?

Hi Alain. Indeed, I would definitely suggest using the B+ or the Pi 2 for this example. You’ll get much better results with the Pi 2 since it’s much faster than the B+. As for your question, you can certainly use the

cv2.VideoWriter. Take a look at Line 57:frame = f.array. Theframevariable is simply a NumPy array which you can pass to thecv2.VideoWriter.Thanks Adrian,

I found after I wrote the question, an example with the writing of ‘raw’ pictures … But not as clear as yours … I hope I find time to implement this in the next days (and follow the basic advice … RTFM :)) … But I don’t have enough network ports in my living room to do all I need to right now…

BR

Alain

OK … Found one of my errors already … I was trying to write “frame” to the file, but that one was modified already, and not writing at all … using f.array works, I now added a frame_2 (which is a copy of frame), so I could add the timestamp … but now play-time is over … Time to do something useful … but this will be continued…

Hi Alain, did you ever get this solved? I need to do the same thing – capture video of my dog when he’s playing around. I’ll be poking around but if you already have the code written, would love to borrow because I’m a python and rPi newbie.

I’m a beginner to the RPi, Python and OpenCV and find your blog posts really helpful! So thank you.

Maybe I am being silly, but is there anything that should be included in the project directory/pyimagesearch/__init__.py file?

Technically no, the

__init__.pyfile indicates that thepyimagesearchdirectory is a Python module that can be imported into a script. There are special commands you can put in the__init__.pyfile, but its real purpose is to indicate the Python interpreter that the directory is a module.Aah, I see. Thanks for replying.

I’m going to take this further and try to implement a human detection/ known person recognition feature. I’ll do some more research.

The pi-in-the-sky goal would be getting some crude navigation going using computer vision for a RPi robot 🙂

Hi Adrian,

Again your work is amazing, I have a question, the camera that you are using works during night???

Thanks for your time and work.

Hey Jose, thank you for such a kind compliment 🙂 The camera I am using does not work well at night. For that you’ll want an IR (infrared) camera.

Welp, got it working. You write wonderful tutorials. I just *really* need to pay attention to details. Is there any way to customize this script so that I don’t have to re-authenticate to the API everytime?

Hey Mike, awesome job getting it working, I’m very excited for you! As for the re-authentication, I’m not sure about that one. I have really only used the Dropbox API for this particular example, so you might want to chat with a more experienced Dropbox developer.

I would like to know if you have ever tinkered with adding audio to the video or what recommendation you might have to address audio.

Hi Adrian, thanks for the great tutorial!

I am new to Raspberry Pi and have what is probably a really silly question. I keep getting an error ” No JSON object could be decoded”, even though I have the complete conf.json file in the folder with pi_surveillance.py. Any ideas what I’m doing wrong? Any help would be greatly appreciated.

Hey Grant, that’s definitely quite the strange error message! Did you download the source code to this post or did you copy and paste it into your editor? There is a chance that the copying and pasting might introduce some extra characters. As for debugging the error, I think this StackOverflow thread should be helpful.

Thanks Adrian, I copy and pasted rather than downloading the source code. After downloading it, everything worked wonderfully! Thanks for the help!

Hi,

These are great tutorials!

I don’t understand what the rawCapture variable is for. It seems all the work is done with the frame variable taken from f.array. Do rawCapture and frame point to the same thing and rawCapture.truncate(0) is just used to clear it?

The

rawCapturevariable actually interfaces with the Raspberry Pi camera and determines the format of the image that is grabbed from the sensor (in this case, in BGR order). Without usingrawCapturethecapture_continuouswouldn’t know how to grab the frame from the camera sensor.Mr Adrian

thanksfor this tutorial, When run this program, this want “Enter auth code here:” what is this?

If you want to use the Dropbox API integration (so that images can be uploaded to your personal Dropbox account), you need to enter your Dropbox API credentials in the .json file, followed by supplying an authorization code. If you do not want to use the Dropbox API integration, just set the Dropbox variables in the .json file to

null:"dropbox_key": null,

"dropbox_secret": null,

"dropbox_base_path": null,

I have done both with and without Dropbox, but i am curious – is it possible to hard code the auth code so that I don’t have to use a web browser to start it every time?

Hey Robert, that’s a great question — the answer is that I’m honestly not sure. This project was the first time I had used the Dropbox API. I would check the Dropbox API documentation and look for alternative authorization methods.

Hey Robert,

This might be a few months too late, but I was having the same issue and figured out how to solve it.

1. The first step is to understand all of the codes that you’re getting from Dropbox.

When you paste the Dropbox link into your browser, enter your email and password, they give you an auth code which is a temporary and can only be used once. You enter this into the command line and the code pulls your access token and uses it to link to your account. The access token never changes and this is what you need to use.

2. Now you need to find out what your access token actually is.

I did this by adding a line of code that says:

print accessToken

You’ll have to run the program again, copy the link into your browser, get the auth code, etc. Once you’ve done that all again it should spit out your access Token and save it.

3. Hard code the access token into the code.

Comment out the lines that reference the auth code (so you don’t have to deal with those dang auth codes any more)

Add in a line to define the access token.

Here’s what my final code looked like…

if conf["use_dropbox"]: # connect to dropbox and start the session authorization process flow = DropboxOAuth2FlowNoRedirect(conf["dropbox_key"], conf["dropbox_secret"]) # print "[INFO] Authorize this application: {}".format(flow.start()) # authCode = raw_input("Enter auth code here: ").strip() # finish the authorization and grab the Dropbox client # (accessToken, userID) = flow.finish(authCode) accessToken = ("Jcbiuehr2hfiuefoiuwerhf9377h4....") client = DropboxClient(accessToken) print "[SUCCESS] dropbox account linked"Hope this helped!

Awesome, thanks for sharing Danny!

hi danny – i’m having the same issue. where did you put

print accessToken

Hi Michael – I put it at the end of this section of code so it looked like this:

if conf["use_dropbox"]: # connect to dropbox and start the session authorization process flow = DropboxOAuth2FlowNoRedirect(conf["dropbox_key"], conf["dropbox_secret"]) # print "[INFO] Authorize this application: {}".format(flow.start()) # authCode = raw_input("Enter auth code here: ").strip() # finish the authorization and grab the Dropbox client # (accessToken, userID) = flow.finish(authCode) accessToken = ("Jcbiuehr2hfiuefoiuwerhf9377h4....") client = DropboxClient(accessToken) print "[SUCCESS] dropbox account linked" print accessTokenThanks for sharing Danny. In general, I would recommend commenting out that entire section or even deleting it if you do not want to use the Dropbox API.

Another way to get your access token is:

Go to your app’s info page

Scroll down to: “Generated access token” icon and click on it to obtain your access token. You will be seeing the warning says:

This access token can be used to access your account (your dropbox account) via the API. Don’t share your access token with anyone

Late to the party, actually here looking for something else, noticed you responded…forever ago. Thank you so much for this! It worked flawlessly! Excellent work my friend.

HI danny I already done this, and it’s working but in just first run after that i cannot upload the image captured, why?? any help? Thanks

Thanks, It saved my project.

Thanks so much

Hi Danny

thanks for your sharing. but when I put the same code like yours, it comes out error message

accessToken(“fdpNK91….”)

IndentationError: unexpected indent

am I right to put my access token here?

and should I keep my dropbox broser on

Please make sure you are using the “Downloads” section of this blog post to download the source code. It seems that you are copying and pasting the code and likely introduced a indentation error.

Even after doing what you said above, am still not able to run the program. It still asks for the auth code. What to do now??

please help

Mr.Adrain

Thank you for the tutorial I learned a lot.

I’m trying to create a system which will track people moving in a corridor and identify the ones spending too much time in the given area using a raspberry pi. Currently I’m thinking about using camshift+kalman filters. Can you give me some advise please? It would be much appreciated. thank you.

Obviously, the first step is to perform some sort of motion detection to determine where people are moving in the corridor. From there, you I would probably suggest optical flow. A better choice could be correlation based methods such as MOSSE. Once you have (1) detected the person, and (2) started the tracking, it’s fairly trivial to start a timer to keep track of the amount of time a person spends in the corridor.

mr.Adrain

Thank you for your advise. So basically what I have to do is use background subtraction to detect motion and when people are detected use correlation based method(mosse) to track them .Am I correct? and can I track multiple people using this method?

(I’m fairly new to this field.) thank you!!!

Yep, that’s the general idea! Correlation based methods require an initial bounding box, so you’ll utilize motion detection to grab that initial bounding box and then pass it on to your tracker, whether that’s optical flow, correlation, etc. And if you’re new to computer vision and OpenCV, I would definitely suggest taking a look at Practical Python and OpenCV + Case Studies, it will definitely help you jumpstart your computer vision education.

mr.Adrian

Thank you so much for your advise. I will definitely go through the links you provided. Keep up the good work. 🙂

Mr Adrian, I use Webcam logitech C615 for more advantage in quality frames, but this coding for PiCamera, I change line 5 “from picamera.array import PiRGBArray” to “from camera.array import PiRGBArray” , follow this error “No module named camera array”

I know that the captchar frames (line 54)need to work properly. HELP ME TO DOING.

regards.

If you are using a Logitech camera rather than the Raspberry Pi camera, then you will not be able to use the

picameramodule to access the frames of the video feed. Instead, you’ll have to use thecv2.VideoCapturefunction as detailed in this post.Great tutorial. I’ve tried it at my office. Perfectly works. Thanks Adrian.

I’m glad it worked for you Asha! 🙂

I am curious if it is possible to get this running headless. I have tried via SSH with the video not being displayed, but the program is shut down upon exiting the session. Could this be done via xrdp?

This should be possible to run headless provided your camera is connected. You could always SSH into the Pi, start the script, and then push it to the background so it’s still running before exiting your session. You could also start the script on reboot using a cronjob.

Dear Mr.Adrian,

Great tutorial.Thank you. I have a small request. Would you be able to do a small tracking example based on correlation filters such as dlib or MOSSE to track multiple objects? I have being trying to do so for a while now with no luck.

Absolutely! Doing a post on correlation filters is very, very high up on my priority list!

If I don’t want to store video in Dropbox than wt was the changes in code

This question has been addressed multiple times in the comments section. Please read the comments before posting. You simply need to comment out the dropbox import, the code used to connect to the Dropbox API, and the actual upload code.

If you don’t want to have the camera LED active then add…

# camera led

disable_camera_led=1

To the config.txt and the LED will no longer be active

Nice, thanks for the tip Scott!

where is the config.txt file? can this disable_camera_led variable go into the conf.json file?

It should be located in

/boot/config.txt. There should also be an option in there that allows the LED to be disabled. Once you modify it, you’ll need to reboot your Pi. This configuration (since it’s a boot configuration) cannot go into theconf.jsonfile.This is awesome, great tip Scott!

Mr. Adrian,

After performing background subtraction is there a way to create a “fixed size bounding box” instead of using the looping over contours method mentioned here? So it can be passed to the dlib tracker? Any advise would be a great help. Thank you.

Hey Nipuna, I’m not sure what you mean by a “fixed size bounding box”. If you have the initial bounding box that should be enough to pass into the dlib correlation tracker, no?

sorry mr.adrian. my mistake. yes , What I want to know is how to create the intial bounding box. Is using the contour method the only way or is there another way to create the bounding box?

The actual bounding box is created via the

cv2.findContoursandcv2.boundingRectfunctions. If you can obtain the bounding box for an object you want to track, you can then pass it on to something like MOSSE or dlib without too much of an issue.Thank you mr.adrain. Can I use this implementation of MOSSE

https://github.com/Itseez/opencv/blob/master/samples/python2/mosse.py

directly for tracking?

Yes, that implementation of MOSSE does indeed work.

Thank you Mr.Adrain. You have helped a lot. I looked at your case studies bundle and learned a lot in a small amount of time. I regret not having a look at it sooner , then I would have been able to save a lot of time I spent on searching the web about image processing. Thank you.

I’m glad myself and the Practical Python and OpenCV + Case Studies books were able to help! 🙂

Thanks a lot Adrian, this is a great tutorial and it helped me (as a python novice) immensely!

I integrated the flask html streaming from

http://www.chioka.in/python-live-video-streaming-example/

and

http://blog.miguelgrinberg.com/post/video-streaming-with-flask

and would like to share.

Very nice Martin! I had to remove the code from the bottom of the comment since the formatting got messed up. Can you please create a GitHub Gist for the code and link it by replying to this comment?

Hi Martin,

Do you have the code for this? I’m attempting to do the exact same thing, your code would help.

Thanks!

Great work on these tutorials, worked through the pi-camera and opencv installation and setup without a hitch.

I like your implementation of the dropbox oauth2 process, but made a small change that allows the generated access token to be stored in a text file or in the conf.json. Here’s the file on github for saving the token in the JSON: https://github.com/levybooth/pi_surveillance_auth/blob/master/pi_surveillance_auth.py

Note that I added: import os.path to the list of imports, and changed the path for saving the images on line 144.

Thanks again for your excellent courses – so far they’re the only walk-through of opencv with the pi camera module that actually worked for me.

David,

Thanks for the mods that allow permanent storage of the access token. Does it ever need to be refreshed or is it truly permanent once stored in conf.json?

Thanks

Andrew

Hello Adrian,

While writing this code, I initially tried writing this code by hardcoding the values in the json file (I also didn’t want to use dropbox) and I kept getting a syntax error on line 139 (the “if conf[“show_video”]” part. Then I tried writing the json part in IDLE, but I’m not sure if thats the correct way to write a json file. I was wondering what workaround there is to the json file, if not, then how to properly write the json file.

Thank you in advance.

The easiest way to get around the JSON file is to just hardcode the values into the code. The JSON file is just meant to make configuration easier — but if you do not want any configuration (and no Dropbox), just hardcode the variables.

Secondly, I would suggesting downloading the code to the post instead of writing it out line-by-line. Writing it out is a great exercise and something that can help you learn a new language or a technique, but for this problem, it would be best to download the code and having a working “standard” that you can base your modifications off of.

Hello,

thanks for the project.

Is there a way to integrate the Dropbox permanently, without requesting a auth code all the time I start the programm?

Hi Thomas, that’s a great question, thanks for asking. I honestly do not know the answer to that question off the top of my head. This project was the first time I had used the Dropbox API. I would suggest going through the Core API and seeing what other functions are available.

Thomas,

I used the dropbox-uploader script listed on this site … It maintains the API key so that you don’t have to request each time.. http://raspberrypitutorials.themichaelvieth.com/tutorials/raspberry-pi-surveillance-camera-dropbox-upload/

Hey Guys

I turned off Dropbox integration and am using the other Dropbox uploader script, how do I configure where the images are being saved? I have a shared folder on my desktop that is synced via cron regularly and would like them to go there.

Another great feature would be to have an email notification when motion is triggered, can anyone give any tips on that? I’m new to Pi and Python 🙂

Thanks for the awesome tutorial and script btw Adrian 🙂

Thanks for the great tutorial!

I am facing an issue in letting the python script run on cronjob. I would like it to run every single time the Rpi reboots, without the aid of a monitor.

I have created a launcher.sh in my /home/pi directory.

——————————————————————–

#!/bin/bash

# launcher.sh

# activate the cv environment ,then execute the python script

cd /home/pi

source ~/.profile && workon cv

python /home/pi/pi_surveillance.py –conf conf.json

——————————————————————–

Then I add on the reboot command at crontab on the last line.

$ sudo crontab -e

——————————————————————–

@reboot bash /home/pi/launcher.sh

——————————————————————–

But to no avail, it gives this error.

stdin: is not a tty

Traceback (most recent call last):

File “/home/pi/pi_surveillance.py”, line 13, in

import imutils

File “/usr/local/lib/python2.7/dist-packages/imutils/__init__.py”, line 5, in$

from convenience import translate

File “/usr/local/lib/python2.7/dist-packages/imutils/convenience.py”, line 7,$

import cv2

ImportError: No module named cv2

Anyone has any idea to make it work? Thanks in advance.

Hey Darius, it looks like your cronjob is running as root where the

cvvirtual environment does not work. You have two options to resolve this. The first is to create thecvvirtual environment for therootuser. The second option is to modify yourlaunch.shscript to switch to thepiat the top of the file.Thanks for the advice! But now, I face with another problem which I am not too sure about.

I have created a cron log for debugging purposes. Now, it reflects this instead:

Traceback (most recent call last):

File “/home/pi/pi_surveillance.py”, line 28, in

conf = json.load(open(args[“conf”]))

IOError: [Errno 13] Permission denied: ‘conf.json’

I am pretty sure I have granted permission to all the files. Any solution will be much appreciated!! Thanks!

The user executing the script that is trying to access the

conf.jsonfile does not have access to read it. You should use chown to switch ownership of the user. A completely terrible hack would be to give full permissions to everyone on the file usingchmod 777 conf.json. I would suggest reading up on Unix file permissions before proceeding any further.The solution I adopted is to activate the user’s crontab, rather than root’s. That way the virtual environment can be initiated as if the user logs in.

This small change to Darius’ method helped install a background process that survives reboot.

$ crontab -e -u pi

where pi is the user name. The rest of the steps are largely as his.

Nice, thanks for sharing Eli! 🙂

Hey Adrian

Great tutorial 🙂 If we dont use Dropbox (because of the need to re-authenticate), how do we make the script save the image to a folder instead?

I see you do this:

if conf[“use_dropbox”]:

# write the image to temporary file

t = TempImage()

cv2.imwrite(t.path, frame)

Could you have an else that sets a path maybe from the conf json and writes the image there?

Cheers

Hey Lucas, as you suggested, I would just have an

elsestatement and then have a configuration that points to the directory where images should be saved. From there, all you need to do is generate the filename and write it to file usingcv2.imwrite.Hi Andrew,

Firstly i would like to say thanks for a great site. i have spent many hours on it during the last few days getting to grips with image collection and raspberry pi.

What a great resource.

Lucas, i am new to python(been developing in php for years off an on though, so am familiar with programming) to answer you question i modified Andrew’s script so right after the t.cleanup() in the dropbox block i do an else and then write the frame out to a local/network path.

here is code

else: path = "{base_path}/{timestamp}.jpg".format( base_path=conf["file_base_path"], timestamp=ts) # client.put_file(path, open(t.path, "rb"))) cv2.imwrite(path, frame)Add the file_base_path to the json configuration file(use forward slashes instead of backslashes as python will convert them to backslashes, saves having to escape them).

It’s Adrian, actually 😉 But thanks for sharing your code.

Yea, realised that after i had replied to the post, duh 🙂

Het Adrian, I have found my errors. Now it can boot up with cronjob!

But when motion is detected and it starts to upload to the dropbox client.

It comes up with the error:

Traceback (most recent call last):

File “/home/pi/pi_surveillance.py”, line 138, in

client.put_file(path, open(t.path, “rb”))

IOError: [Errno 13] Permission denied: ‘.//88eec3c0-5b20-406f-9d68-49bd941a7410.jpg’

I was thinking it was probably because the absolute path should be declared, since I am running on cronjob.

But I have no idea how do u change the t.path to the absolute path. Needs some enlightenment!

Thanks!

The reason you are getting that error is because your account does not have permission to create a file under that directory. I would suggest reading up on Unix file permissions before you continue. Alternatively, you might be able to get away with modifying the

TempImageline to look something like this:t = TempImage(basePath="/tmp/")The

/tmpdirectory should be writeable without any file permission changes.A MILLION THANKS!! YOU ARE REALLY AMAZING!!

pi@raspberrypi ~/pi-home-surveillance $ python pi_surveillance.py –conf conf.json

Traceback (most recent call last):

File “pi_surveillance.py”, line 6, in

from dropbox.client import DropboxOAuth2FlowNoRedirect

ImportError: No module named dropbox.client

Can you please help me sort out this error ..

thanks in advance

You need to install the

dropboxPython package first:$ pip install dropboxI’m having the same problem as Bhuvan. I’ve already installed pip install dropbox.

Make sure you’re installing it into the same Python virtual environment you’re using for OpenCV as well. For example,

sir whrere to find dropbox path

and my capturing stops as soon as “occupied” occures on image and only one image is stored . what to do to have cantinous capture of images and storing of occupied images

plz help sir

The problems is caused by old version of urllib3. You need to download the pip via Github and update your urllib3. I faced this problems because my pip cannot upgrade the urllib3 it written there owned by OS. Anyways, i managed to solved it this ways.

Hi Sky,

i am currently facing the same problem. However, i did not understand completely how you solved this problem. Did you update pip by installing itself from github, or did you get the urllib3 from github?

Your help would be much appreciated.

Anyone get a solution to this? i’ve install everything in the same virtual environment and still getting an error here. so close yet so far!!!

Do you still need a solution?

Same here. I’ve run the dropbox install within the virtual environment and done the same with urllib3, but still the same error as Bhuvan. Any ideas on what I could try next?

I would suggest updating to the latest version of the Dropbox library to see if that resolves the issue:

$ pip install --upgrade dropboxIf you are using Python virtual environments make sure you access them first.

Never mind – was still using old version I downloaded before August 2017. Redownloaded and working well, thanks very much.

I was wondering if you are aware of some code that would allow to check for motion in a specific ROI within a live video ? I am not sure what would be the best way to do it.

Thanks

If you want to detection motion within only a specific ROI, there are two ways to do it. The first way is to perform NumPy array slicing to crop the region of the image you want to check for motion — then you apply motion detection to only the cropped image, not the entire image.

Another option is to perform motion tracking on the entire image, and then check the bounding boxes of the contours. If they fall into the (x, y)-coordinates of your ROI, then you know there is motion in your specific region.

Thanks for these advice. I will try that. I’m quite novice with python and programming in general so your website and advice are greatly appreciated !

Thank you for tutorial!

the 2 line in ‘pi_surveillance.py’

no module named pyimagesearch.tempimage

is there any other package that i have to install?

I installed imutils and dropbox.

I made a folder ‘pyimagesearch’ and created a file ‘tempimage.py’ in it

Download the source code using the form at the bottom of this page. You will receive a .zip file of the source code download that includes the

pyimagesearchmodule.The zip file I downloaded does not include the full directory structure, it only includes the main pi_surveillance.py and json files, but no pyimagesearch module (so no init or tempimage files). Am I missing something? I keep getting the error for missing the pyimagesearch module. Please help, thanks.

Hi Chris — I just checked the .zip of the download. It does indeed include the

conf.json,pi_surveillance.py, andpyimagesearchfiles and directories. Perhaps you accidentally deleted the directory? I would suggest re-downloading the .zip archive.hi Adrian, how many files will be after extracting in zip file

plz tell what are things required to edit in downloaded code

it is showing something like maxretry error and new connection error

Hey Sahil — this sounds like you have a problem with your internet connection. Please ensure you have a strong connection and retry the download.

Thank you so much for all your hard work!I followed your tut and finally I finished my own surveillance system,cheers!

Awesome, glad to hear it! 😀

Hi Adrian,

This is looks awesome. Seems like I can use it for a pet project.

BTW, do you have any idea how to adapt the code to use webcam, instead of the Raspberry Pi camera module?

I use a Logitech C170 webcam, and your other code:

https://www.pyimagesearch.com/2015/05/25/basic-motion-detection-and-tracking-with-python-and-opencv/

Works nicely.

This one doesn’t, though.

Thank you 🙂

Indeed, this code is meant for the Raspberry Pi camera which uses the

picameramodule. Thepicameramodule (obviously) is only compatible with the Raspberry Pi camera.Luckily, switching it over to use a normal webcam is very simple — checkout the sister post to this one here. All it really amounts to is changing some boilerplate code related to

cv2.VideoCapture.Hello Adrian

The tutorials you write is just Amazing. Thank you very much.

When I run your code Adrian, I see that the processor is only running at mostly 25% and I am getting quite a lag in real time.

Adrian I am wondering since I have B+ model that has 4 cores is this program running on just one or should it be adapted with multi processing to run on all 4 cores?

Thank you very much Andre, I’m glad you are enjoying the tutorials!

Which B+ model are you running? The original B+ model had only one core, whereas the Pi 2 has four cores. If I were to make use of multiple cores for this project, I would give a core entirely to performing the “movement detection” allowing the frames from the camera to be read in a non-blocking fashion. That would take a considerable amount of hacking on the codebase, but it certainly could be done.

Cool, I will give it a go. I made a mistake, yes I am using the Pi 2.

Good.Thank you.

Thanks for tutorial.

How to fix this problems:

1. (cv)pi@raspberrypi ~ $ ~/.profile

-bash: /home/pi/.profile: Permission denied

2. (cv)pi@raspberrypi ~ $ sudo python test_image.py

(Image:3311): Gtk-WARNING **: cannot open display:

Thanks.

1. Are you trying to edit it or reload it? You need to supply a command, such as

vi ~/.profileorsource ~/.profile2. You should enable X11 forwarding when you login to your pi:

ssh -X pi@your_ip_addressGtk-WARNING **: cannot open display:

how can we solve this one(i tried ssh -X pi@ipaddress but not worked)

Thanks, ok for the first question, but as for your second answer, it didn’t work.

I have Windows 10 and Putty of course.

I’m not a Windows users so unfortunately I’m not sure how to enable X11 forwarding on Windows and Putty. However, there has been some discussion about it over in the comments section of this post, so I would start there.

Hi Adrian, I’m enjoying this project, but need your help diagnosing this error message just after launching:

Traceback (most recent call last):

File “sophiecam.py”, line 105, in

cv2.CHAIN_APPROX_SIMPLE)

ValueError: too many values to unpack

It sounds like you’re using OpenCV 3. This blog post was meant to be used with OpenCV 2.4. But you can change the

cv2.findContoursline to be:cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)[-2]And that will make it compatible with both OpenCV 3 and OpenCV 2.4.

EDIT: Below follows a much better method to access contours, regardless of which version of OpenCV you are using:

Thanks Adrian, I’ll try that tonight. So you and others know how I wound up with OpenCV 3, I’m new at this and started following the steps at the top of this tutorial with:

“Let’s go ahead and get the prerequisites out of the way. I am going to assume that you already have a Raspberry Pi and camera board.

You should also already have OpenCV installed on your Raspberry Pi…”

That link led to: “Install OpenCV and Python on your Raspberry Pi 2 and B+” with an UPDATE: “I have just released a brand new tutorial that covers installing OpenCV 3…”

So thinking “newer is better”, I went down that path, but ended in the ValueError message. I’m really glad it’s easy to make it compatible with both OpenCV versions!

That worked, “Occupied” image uploaded to Dropbox server! But Dropbox wouldn’t sync with my Windows PC because the date_timestamp.jpg filename had colons. Replaced colon with dash in ts=timestamp formating command.

Next: WiFi, Static IP, launch from boot, live video stream to phone, SMS alerts

Nice, congrats on getting it to work 🙂

Adrian, I got WiFi and a static IP to work, and am now wondering how you would approach adding a live video stream to an IP port? I found this link, but not sure… :http://stackoverflow.com/questions/5825173/pipe-raw-opencv-images-to-ffmpeg The pipe to VLC approach sounds the most reliable.

I want to stick with OpenCV and your planned features. TIA

Hey Tom, I’ll be honest — I have not tried to setup video streaming from the Pi to another system who then reads in the frames and processes them. I’ll look into and perhaps try to do a post on it in the future.

hey Tom Kiernan, did you manage to get it to stream to vlc?

Hi Adrian,

thank you for the tutorial!

using CV3 I still get the error:

(cv)pi@d3:~/Downloads/pi-home-surveillance $ python pi_surveillance.py --conf conf.json Traceback (most recent call last): File "pi_surveillance.py", line 88, in cv2.CHAIN_APPROX_SIMPLE)[-2] ValueError: need more than 0 values to unpackWhat might be wrong?

Kind Regards:

Martin

This blog post was meant to run with OpenCV 2.4, hence the error message. Please see my reply to Tom Kiernan above to fix the error. Additionally, be sure to read this post.

Hi Adrian,

you fixed it! Thank you.

this worked:

(_, cnts, _) = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)this did not work:

cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)[-2]Kind regards:

Martin

Were you getting an error for the second one? Because the code does the exact same thing, only with list slicing.

Adrian, same thing for me. Your suggestion for Tom did not work but Martin’s code did. Everything else is 100% your code.

btw, really really nice work on this and every other post I’ve seen of yours.

The opencv 3 documentation lists the following for findContours()

Python: cv2.findContours(image, mode, method[, contours[, hierarchy[, offset]]]) → image, contours, hierarchyWhy did you add the ‘[-2]’ ?

In an experiment to make it compatible with OpenCV 2.4 + OpenCV 3, which logically did not work out 🙂 This is how I suggest grabbing contours irrespective of your OpenCV version:

That works for my opencv 3 install. Thanks!

No problem, happy to help!

Hi Adrian,

The code above is throwing the following error :

Traceback (most recent call last):

File “pi_surveillance.py”, line 90, in

cnts = cv2.findContours(edged.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

NameError: name ‘edged’ is not defined

When I change it to the first suggestion you had on how to make the code compatible with OpenCV 3 which is as follows, it works!

cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)[-2]

Any ideas why I’m having this trouble? Since I have a working solution this question is more academic than anything.

Thanks much for all your support. Regards,

Kerem

Hi Kerem:

My original comment was incorrect. I actually suggest the following method for the

cv2.findContoursreturn tuple to compatible with both OpenCV 2.4 and OpenCV 3:You can read more about the change to

cv2.findContoursbetween OpenCV versions in this blog post.I’ve just test this with OpenCV 3 and Python 3.4.2

in addition the print function has been changes

Other than the

printfunction, thecv2.findContourschange mentioned above should be the only changes to convert the code from OpenCV 2.4 to OpenCV 3.This is just what I have been waiting for a long time. Any ideas how to make a trigger that starts recording from an event ? I only want to record when there are a burgler and not when I am at home. I have been thinking to use a microphone triggeret from my alarm system ( read the noise that the siren produces) to start taking pictures.

Only recording when a specific event happens would be straight forward. Just modify the

if on Line 107 to beif text == "Occupied" and YOUR_EVENT:where you can define whatever criteria you want for the event to trigger.Hello again, now I found out how to implement a microphone. That you to FabLab RUC. The microphone that I have ordered is Microphone Sensor High Sensitivity Sound Detection Module For Arduino AVR PIC. I will keep you posted

This is truly awesome! I got this working tonight! A logical extension of this would be to add scheduling constraints in conf.json (so that the capture is only performed on certain days, during certain times). For example, I want to keep surveillance on jewelry in a bedroom, but not when my wife is getting dressed (which usually happens around 6AM on weekdays, and 7AM on weekends).

I am going to investigate launching this script via cron and killing it off somehow. Not sure how this will work since it is running in a virtual python environment.

Thanks for writing!

Andrew

Great suggestion Andrew!

Here is my solution for starting the script at 8AM on weekdays only, and then killing it after 9 hours (approximately 5PM). Also, I used Scott’s method of storing the Dropbox auth code in conf.json.

The key is the use of the timeout command, which will kill the process after x hours:

Here is my

/etc/crontab:Thanks for sharing Andrew!

Adrian –

Your work is great! Thanks for providing all of the basics and guidance.

I’m developing an animatronic scarecrow for halloween. I will be using the motion detection to trigger a sequence of other effects running on another pi. I would like to be able to count the number of people in the frame to alter the behavior of the scarecrow. Exact numbers aren’t needed. I also want to track the direction of the motion so that I can move the scarecrow’s head to follow the “primary” object moving in the frame.

I’m thinking that it will be best to keep the camera fixed so we don’t introduce additional variables into the motion detection routines. What are your thoughts on how to estimate the object’s distance from the camera? I would need that in order to triangulate it’s position in the frame to calculate the pan & tilt for the head.

I’d love your insight and any advice you have.

Hey Cameron — I love the idea of using the Pi for Halloween. Here are some tips to point you in the right direction:

1. Use the

len(cnts)to get an approximate number of regions detected containing motion. These may or may not be people, but it will be a good estimate.2. As for as determining the primary direction of movement, take a look at this post.

3. Calculating the distance to an object is also straightforward.

Hi, Adrian,

I would like to know if it possible to write to Dropbox (or somewhere else) video, not pictures?

For example: start recording when state is occupied and check every minute if state is still occupied, when proceed recording, else if unoccupied, stop recording.

Thanks

You can certainly upload any arbitrary data type to Dropbox, it is not specific to pictures. I don’t have a tutorial related to saving actual video streams to file, but that’s something I can cover on the PyImageSearch blog in the future.

Did you do this? I’m looking like crazy for a video streaming and recording but no one seems to have found a solution

I covered how to write video clips to disk here.

Is there a cv function which allows me to look exclusively at one specific part of the cameras video? For example I want one python script look at the left half and another one on the right half of the screen. I checked the cv2.rectangle but it just draws instead of “cropping” (?). Thanks

You bet, all you need is to use simple NumPy array slicing. See the “cropping” section towards the bottom of this post.

Hello Adrian, I’m new in Raspberry Pi and Python. Based of what i’m reading, the idea of drawing the box in the object subjected to motion is first we capture the frame then using background subtraction etc2 till drawing contours and drawing the box around the contours right?

what if i want to build this kind of system

so basically i want to integrate it with PIR

first when there’s a movement captured by PIR (human body in this case), my raspi will capture 1 image then capture 10s of video . after that the image and video will be sent to specific email address notifying intruder or motion. so if i want to do the subtraction and drawing the contours within the video, is it possible? or it is only possible if we capture if per frame? thanks

Your intuition is correct — background subtraction must be performed first before we can find contours and draw a bounding box.

Your project is also 100% possible. I simply saved a single frame to disk which was then uploaded to Dropbox.You could also save a video file by writing each frame to the file, that’s absolutely possible. I don’t have any tutorials on writing frames to video files, but I’ll be sure to do one in the future.

did we use videowriter module in opencv? so basically I change the part of Uploading file in dropbox to writing frame to video file using videowriter?

Yep, that is correct!

if you are not busy, can you give me the snippets of taking each frame while looping and insert each of them in the video? i’m trying every move possible but i’m kind of stuck (python newbie here hehe)

As I said, I don’t have any code ready for that right now — I’ll be sure to do a tutorial on video writing in the future.

Hello Adrian,

Your post has been very useful. Will it be possible to get a link to purchase the components required for this project? So that i dont purchase a non-compatible camera module and all that. I am new to raspberry pi. & I will be very grateful if you could help me with that(via my email).

Hey Adams — I actually provide links to the Pi and camera modules I used inside this post.

Instead of motion you can also use Kerberos.io, it’s also open-source and a lot more user friendly to install and configure. You can find more information on the website.

Thank you for this very good tutorial,

How can I record voice that hapen y the area of video survillance?

Best regards

Please see my reply to Alain above. I’ll be covering how to write clips to video files in a future PyImageSearch blog post.

I am new to opencv and thanks for your tutorial.

I follow your steps to bring up in model B. It woks perfectly.

Look forward to your future opencv application.

Fantastic, I’m happy to hear it worked for you! 😀

Hi, master!!

I want to turn on a red LED (using the GPIO) when motion is detected. How can I do that?

I’m a noob!

Cheers!

disable_camera_led=1 in /boot/config.txt

That wasn’t my question. I want to connect a LED using the GPIO, like this https://projects.drogon.net/wp-content/uploads/2012/06/1led_bb1.jpg

hi, Diocletian, have you figure it out? coz I have the same issue with you

Is there any way to move this processing on to the GPU?

Unfortunately not for this particular algorithm (or for the Pi in particular). But you can compile OpenCV itself with OpenCL/CUDA support on your laptop or desktop and leverage the GPU there.

Thank you very much Adrian! This is really an awesome and comprehensive tutorial. You explained all the details about the techniques and the improvement method which I enjoy most. I finished reading your book which is very practical and get this home surveillance project done.

Nice work Fang! I’m glad the book and tutorials were helpeful 🙂

i have problem when i try python pi_surveillance.py –conf conf.json

File "pi_surveillance.py", line 34 print "[INFO] Authorize this application: {}".format(flow.start()) ^ SyntaxError: invalid syntaxi use the code that i downloaded from download code segment via email

It looks like you are using Python 3. The code for this post was intended for Python 2.7, not Python 3. That said, you can make the code compatible by doing a few things, namely changing the print statement to a print function:

print("[INFO] Authorize this application: {}".format(flow.start()))You’ll need to do this for every print statement in the code.

How can I use this without the use of dropbox? Im a newbie on python and opencv.

Just simply comment out any code related to Dropbox.

Hi Adrian,

Thanks for posting this project. I put it together and enjoyed the process of doing so. The instructions are detailed and well written.

My one small suggestion would be to make the code compatible with OpenCV3.0.0. The modifications are in the Q&A, but having it in the main body of the message would be useful.

I look forward to your blog where we have a choice of recording video snippets rather than just pictures.

Thanks for the feedback Sidd. I’m still trying to figure out how to handle the OpenCV 2.4 => OpenCV 3 conversion. As my blog post coming out on Monday will explain, the vast majority of users are still using OpenCV 2.4. It makes it a bit challenging to support both versions.

Is this project supports any alarm system so that it can notify the owner in case of intrusion detection before uploading the photo to the dropbox.

You could certainly update the code to trigger an alarm.

Hey Adrian, I’d like to know if it would be possible to find the numbers of contours programatically.

I tried many ways to implement that, but I couldn’t get a precise output.

I just need to get the numbers of contours that are being displayed, for example, if 2 people walk apart from each other, it displays 2 contours, but when I try to display that, I get a much higher number using len(cnts)

In this particular case, using the number of contours detected isn’t the best method to “count” the number of moving people in the image. If you take a look at the thresholded image from the motion detection step you’ll notice that many parts of the person are actually disconnected. You could try using morphological operations to close these gaps, having only a single “blob” per person. Otherwise, you might want to look into training custom object detectors or depending on your case, use the people detector supplied with OpenCV.

Many thanks for this and related projects.

I have eventually managed to get this working. I applied the mod

“cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)[-2] ”

that you mention above to get it to work with Open CV3. Also changed the colons to dashes in the ts=timestamp formating command that was also mentioned above to make it work with windows). I also rotated the image 180 degrees before saving it to dropbox as my camera is upside down using

“frame=imutils.rotate(frame,angle=180)” at line 103.

I am currently experiementing trying to get more images capturing the motion. Has anyone had good results without overloading the pi? My config file currently uses:

“min_upload_seconds”: 0.5,

“min_motion_frames”: 1,

“camera_warmup_time”: 2.5,

“delta_thresh”: 5,

“resolution”: [640, 480],

“fps”: 8,

“min_area”: 2000

Hi, Adrian, I have used your code to build a motion detection system, and I add some GPIO controls. However the control of GPIO must run with sudo, and your code can not run with sudo, so could you please help me to solve this?

The easiest way to solve this is to create a virtual environment for the root user:

And then sym-link OpenCV into your root virtual environment. Then just make sure you run your GPIO script as root and everything will work.

what you mean