I said it in last week’s blog post and I’ll say it again here today — I am not, by any stretch of the imagination, a GUI developer.

I think my aversion to GUI development started back in early-High school when I was teaching myself Java; specifically, how to write Java applets (remember what god damn nightmares applets were?) utilizing javax and Swing.

It was a dark time in my life.

I was sick often and missed a lot of school.

There were a great deal of family problems going on.

And I had yet to mature from an adolescent into an adult, stuck in the throes of puberty — an awkward teenage boy, drunk on apathy, while simultaneously lacking any direction or purpose.

At that point in my (early) programming career, I turned to Java and GUI development in a last-ditch effort to escape, much like a heroin addict turns to a spoon and a needle for a few minutes of relief, only for the world to come crashing back down once the initial high wears off.

You see, I found developing GUI applications fun. It was addictive. And it was quite the departure from the all-too-familiar command line interfaces.

But that “fun” came at a price.

Lengthly code blocks to accomplish even minutely simple tasks. Strange compile errors. And spending all-nighters trying to resolve race conditions, callback hell, and threading nightmares that not even an experienced seamstress could untangle.

Since then, I’ve always (mentally) correlated GUI development with painful, trying times. It’s a door that I honestly haven’t opened since…until now.

In this blog post, I’m going to confront my troubled past and write a bit of code to display a video feed with OpenCV and Tkinter. Ultimately, this GUI application will allow us to create a “Photo Booth” of sorts, letting us save frames from our video stream to disk at the click of a button.

As you’ll find out, I kinda-sorta failed, but I wanted to share my experience with you — hopefully more experienced GUI developers can help point me in the right direction.

Displaying a video feed with OpenCV and Tkinter

I’m going to go ahead and assume that you have already read last week’s blog post on using OpenCV with Tkinter. Inside this tutorial, I detailed what Tkinter is, how to install it, and how to write a simple Tkinter GUI application that can display images loaded via OpenCV.

Today we are going to build on the knowledge gained from last week’s post, as well as incorporate some special techniques discussed in earlier blog posts — specifically, how to access video streams in an efficient, threaded manner.

Note: I think my desire to utilize threaded streams is what ultimately caused problems with this application. As I’ve read from other sources, Tkinter doesn’t place nice with threads.

Mocking up the Photo Booth App

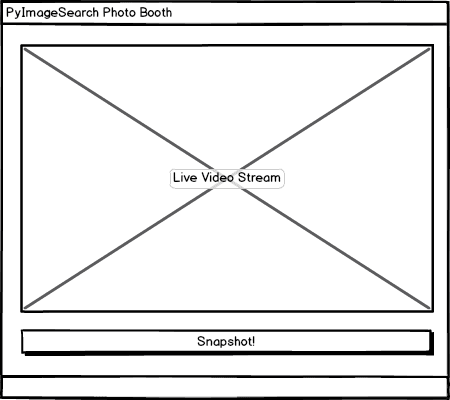

As we did in last week’s post, let’s start by creating a mockup of our application. Below you can see the main screen of our GUI application:

This screen has two elements. The first, at the bottom, is our Snapshot! button. Every time this button is clicked, the current frame read from the video stream will be stored on disk.

The second element, placed directly above the first, is a live display of the video stream itself.

Our goal is to write Python + OpenCV + Tkinter code to continuously poll frames from our video stream, update the live display, and then handle writing the current frame to file when the snapshot button is clicked.

Creating the Photo Booth App

Now that we’ve created the mockup of our project, let’s go ahead and get started coding the GUI. Open up a new file, name it photoboothapp.py , and insert the following code:

# import the necessary packages from __future__ import print_function from PIL import Image from PIL import ImageTk import Tkinter as tki import threading import datetime import imutils import cv2 import os

Lines 2-10 handle importing our required Python packages. We need PIL for the Image class, which is what the ImageTk and Label classes require in order to display an image/frame in a Tkinter GUI.

We’ll also need Python’s threading package to spawn a thread (separate from Tkinter’s mainloop ), used to handle polling of new frames from our video stream.

The datetime module will be used to construct a human-readable timestamp filename for each frame that we save to disk.

Lastly, we’ll need imutils, my collection of convenience functions used to make working with OpenCV easier. If you don’t already have imutils installed on your system, let pip install the package for you:

$ pip install imutils

Let’s move on to the definition of our PhotoBoothApp class:

class PhotoBoothApp: def __init__(self, vs, outputPath): # store the video stream object and output path, then initialize # the most recently read frame, thread for reading frames, and # the thread stop event self.vs = vs self.outputPath = outputPath self.frame = None self.thread = None self.stopEvent = None # initialize the root window and image panel self.root = tki.Tk() self.panel = None

Line 13 defines the constructor to our PhotoBoothApp class. This constructor requires two arguments — vs , which is an instantiation of a VideoStream , and outputPath , the path to where we want to store our captured snapshots.

Lines 17 and 18 store our video stream object and output path, while Lines 19-21 perform a series of initializations for the most recently read frame , the thread used to control our video polling loop, and stopEvent , a thread.Event object used to indicate when the frame pooling thread should be stopped.

We then initialize the root Tkinter window and the panel used to display our frame in the GUI (Lines 24 and 25).

We continue the definition of our constructor below:

# create a button, that when pressed, will take the current

# frame and save it to file

btn = tki.Button(self.root, text="Snapshot!",

command=self.takeSnapshot)

btn.pack(side="bottom", fill="both", expand="yes", padx=10,

pady=10)

# start a thread that constantly pools the video sensor for

# the most recently read frame

self.stopEvent = threading.Event()

self.thread = threading.Thread(target=self.videoLoop, args=())

self.thread.start()

# set a callback to handle when the window is closed

self.root.wm_title("PyImageSearch PhotoBooth")

self.root.wm_protocol("WM_DELETE_WINDOW", self.onClose)

Lines 29-32 create our Snapshot! button, that when clicked, will call the takeSnapshot method (which we’ll define later in this example).

In order to continuously poll frames from our video stream and update the panel in our GUI, we need to spawn a separate thread that will be used to monitor our video sensor and grab the the most recently read frame (Lines 36-38).

Finally, we set a callback to handle when our window is closed so we can perform cleanup operations and (ideally) stop the video polling thread and release any resources (unfortunately, this didn’t work as I intended it to in practice).

Next up, let’s define the videoLoop function, which as the name suggests, monitors our video stream for new frames:

def videoLoop(self):

# DISCLAIMER:

# I'm not a GUI developer, nor do I even pretend to be. This

# try/except statement is a pretty ugly hack to get around

# a RunTime error that Tkinter throws due to threading

try:

# keep looping over frames until we are instructed to stop

while not self.stopEvent.is_set():

# grab the frame from the video stream and resize it to

# have a maximum width of 300 pixels

self.frame = self.vs.read()

self.frame = imutils.resize(self.frame, width=300)

# OpenCV represents images in BGR order; however PIL

# represents images in RGB order, so we need to swap

# the channels, then convert to PIL and ImageTk format

image = cv2.cvtColor(self.frame, cv2.COLOR_BGR2RGB)

image = Image.fromarray(image)

image = ImageTk.PhotoImage(image)

# if the panel is not None, we need to initialize it

if self.panel is None:

self.panel = tki.Label(image=image)

self.panel.image = image

self.panel.pack(side="left", padx=10, pady=10)

# otherwise, simply update the panel

else:

self.panel.configure(image=image)

self.panel.image = image

except RuntimeError, e:

print("[INFO] caught a RuntimeError")

As I said at the top of this blog post — I’m not a GUI developer and I have very little experience with Tkinter. In order to get around a RunTime exception that Tkinter was throwing (likely due to threading), I resorted to really ugly try/except hack to catch the RunTime error. I tried to resolve the bug, but after a few hours of not getting anywhere, I eventually threw in the towel and resorted to this hack.

Line 51 starts a loop that will be used to read frames from our video sensor. This loop will continue until the stopEvent is set, indicating that the thread should return to its parent.

Lines 54 and 55 read the frame from our video stream and resize it using the imutils library.

We now need to perform a bit of formatting on our image. To start, OpenCV represents images in BGR order; however, PIL expects images to be stored in RGB order. To resolve this, we need to swap the channels by calling cv2.cvtColor . From there, we convert the frame to PIL/Pillow format, followed by ImageTk format. The ImageTk format is required when displaying images in a Tkinter window.

If our panel is not initialized, Lines 65-68 handle instantiating it by creating the Label . We take special care on Line 67 to store a reference to the image , ensuring that Python’s garbage collection routines do not reclaim the image before it is displayed on our screen.

Otherwise, if the panel has already been initialized, we simply update it with the most recent image on Lines 71-73.

Now, let’s take a look at the takeSnapshot callback:

def takeSnapshot(self):

# grab the current timestamp and use it to construct the

# output path

ts = datetime.datetime.now()

filename = "{}.jpg".format(ts.strftime("%Y-%m-%d_%H-%M-%S"))

p = os.path.sep.join((self.outputPath, filename))

# save the file

cv2.imwrite(p, self.frame.copy())

print("[INFO] saved {}".format(filename))

When the “Snapshot!” button is clicked, the takeSnapshot function is called. Lines 81-83 generate a filename for the frame based on the current timestamp.

We then save the frame to disk on Line 86 by making a call to cv2.imwrite .

Finally, we can define our last method, onClose :

def onClose(self):

# set the stop event, cleanup the camera, and allow the rest of

# the quit process to continue

print("[INFO] closing...")

self.stopEvent.set()

self.vs.stop()

self.root.quit()

This function is called when we click the “X” in the GUI to close the application. First, we set the stopEvent so our infinite videoLoop is stopped and the thread returns. We then cleanup the video stream pointer and allow the root application to finish closing.

Building the Photo Booth driver

The last step in creating our Photo Booth is to build the driver script, used to initialize both the VideoStream and the PhotoBoothApp . To create the driver script, I’ve added the following code to a file named photo_booth.py :

# import the necessary packages

from __future__ import print_function

from pyimagesearch.photoboothapp import PhotoBoothApp

from imutils.video import VideoStream

import argparse

import time

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-o", "--output", required=True,

help="path to output directory to store snapshots")

ap.add_argument("-p", "--picamera", type=int, default=-1,

help="whether or not the Raspberry Pi camera should be used")

args = vars(ap.parse_args())

# initialize the video stream and allow the camera sensor to warmup

print("[INFO] warming up camera...")

vs = VideoStream(usePiCamera=args["picamera"] > 0).start()

time.sleep(2.0)

# start the app

pba = PhotoBoothApp(vs, args["output"])

pba.root.mainloop()

Lines 9-14 handle parsing the command line arguments of our script. The first command line argument, --output , is required. The --output switch is simply the path to where we want to store our output snapshots.

We then have --picamera , an optional switch used to indicate whether the Raspberry Pi camera module should be used or not. By default, this value will be -1, indicating that our builtin/USB webcam should be used. We can specify a value > 0 if we want to utilize the Pi camera. You can learn more about this parameter and how to use it in conjunction with the VideoStream class in this blog post.

Lines 18 and 19 initialize our VideoStream and allow the camera sensor to warmup.

Finally, Lines 22 and 23 start the PhotoBoothApp .

Running our Photo Booth

To run our photo booth application, make sure you have the necessary libraries and packages installed (as detailed in the previous blog post). After you’ve ensured your system is configured properly, execute the following command:

$ python photo_booth.py --output output

After the camera sensor warms up, you should see the following display:

Notice how our GUI contains both the live stream from our webcam along with the button used to trigger a snapshot.

After clicking the snapshot button, I can see that my output directory contains the photo I just took:

Below I have included a short video to demonstrate the Photo Booth application:

What the hell are these errors?

As I alluded to at the top of this blog post, the Photo Booth application wasn’t a complete success. Without utilizing the try/except block in the videoLoop function of PhotoBoothApp , closing the application results in the following RunTime exception:

I think this is because the panel object is garbage-collected before the thread finishes executing, but I’m not entirely sure (again, I’m not very familiar with Tkinter).

The second error happens intermittently, but again, also occurs during the window close:

As you can see, I am getting an AttributeError error. It doesn’t happen all the time, only some of the time, so I’m pretty convinced that this must be a threading problem of some sort. A bit of research online has led me to believe that Tkinter doesn’t play nice with threading, but I’m not 100% sure if this is the exact issue or not.

Either way, I’ve decided to put this project to bed for now and let the more experienced GUI developers take over — I’ve had enough of Tkinter for the next few months.

What's next? I recommend PyImageSearch University.

30+ total classes • 39h 44m video • Last updated: 12/2021

★★★★★ 4.84 (128 Ratings) • 3,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 30+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 30+ Certificates of Completion

- ✓ 39h 44m on-demand video

- ✓ Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 500+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post, I delved into my troubled past and faced my fears — building GUI applications.

I discussed how to build a simple Photo Booth application that reads frames from a live stream via OpenCV (in a threaded, efficient manner) and then displays the stream to our Tkinter GUI. Upon clicking a button in the GUI, the current frame is then saved to disk.

However, this application wasn’t a complete success.

I ran into two different types of errors — a RunTime error and an AttributeError exception. I resolved the RunTime error by hacking together an ugly try/except block, but the AttributeError exception is still perplexing to me, due to its intermittent nature. If you know the solution to this problem, please leave a comment in the comments section at the bottom of this post.

To be honest, I’m not sure if I’ll be doing more OpenCV + Python + GUI applications in the future. It was neat to build one or two, but my interests in computer vision are more focused on the actual processing and understanding of images, not the development of full-fledged GUIs surrounding these applications.

That said, I could be convinced to write some tutorials on how to build web applications surrounding computer vision algorithms — that’s something I’m actually good at, have done a lot of in the past, and even enjoy to a degree.

Be sure to leave your comments and suggestions in the comments section at the bottom of this post — and be sure to signup for the PyImageSearch Newsletter using the form below!

See you next week.

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!