In this tutorial, you will learn how to use OpenCV’s “Deep Neural Network” (DNN) module with NVIDIA GPUs, CUDA, and cuDNN for 211-1549% faster inference.

Back in August 2017, I published my first tutorial on using OpenCV’s “deep neural network” (DNN) module for image classification.

PyImageSearch readers loved the convenience and ease-of-use of OpenCV’s dnn module so much that I then went on to publish additional tutorials on the dnn module, including:

- Object detection with deep learning and OpenCV

- Real-time object detection with deep learning and OpenCV

- YOLO object detection with OpenCV

- Mask R-CNN with OpenCV

Each one of those guides used OpenCV’s dnn module to (1) load a pre-trained network from disk, (2) make predictions on an input image, and then (3) display the results, allowing you to build your own custom computer vision/deep learning pipeline for your particular project.

However, the biggest problem with OpenCV’s dnn module was a lack of NVIDIA GPU/CUDA support — using these models you could not easily use a GPU to improve the frames per second (FPS) processing rate of your pipeline.

That wasn’t too much of a big deal for the Single Shot Detector (SSD) tutorials, which can easily run at 25-30+ FPS on a CPU, but it was a huge problem for YOLO and Mask R-CNN which struggle to get above 1-3 FPS on a CPU.

That all changed in 2019’s Google Summer of Code (GSoC).

Led by dlib’s Davis King, and implemented by Yashas Samaga, OpenCV 4.2 now supports NVIDIA GPUs for inference using OpenCV’s dnn module, improving inference speed by up to 1549%!

In today’s tutorial, I show you how to compile and install OpenCV to take advantage of your NVIDIA GPU for deep neural network inference.

Then, in next week’s tutorial, I’ll provide you with Single Shot Detector, YOLO, and Mask R-CNN code that can be used to take advantage of your GPU using OpenCV. We’ll then benchmark the results and compare them to CPU-only inference so you know which models can benefit the most from using a GPU.

To learn how to compile and install OpenCV’s “dnn” module with NVIDIA GPU, CUDA, and cuDNN support, just keep reading!

How to use OpenCV’s ‘dnn’ module with NVIDIA GPUs, CUDA, and cuDNN

In the remainder of this tutorial I will show you how to compile OpenCV from source so you can take advantage of NVIDIA GPU-accelerated inference for pre-trained deep neural networks.

Assumptions when compiling OpenCV for NVIDIA GPU support

In order to compile and install OpenCV’s “deep neural network” module with NVIDIA GPU support, I will be making the following assumptions:

- You have an NVIDIA GPU. This should be an obvious assumption. If you do not have an NVIDIA GPU, you cannot compile OpenCV’s “dnn” module with NVIDIA GPU support.

- You are using Ubuntu 18.04 (or another Debian-based distribution). When it comes to deep learning, I strongly recommend Unix-based machines over Windows systems (in fact, I don’t support Windows on the PyImageSearch blog). If you intend to use a GPU for deep learning, go with Ubuntu over macOS or Windows — it’s so much easier to configure.

- You know how to use a command line. We’ll be making use of the command line in this tutorial. If you’re unfamiliar with the command line, I recommend reading this intro to the command line first and then spending a few hours (or even days) practicing. Again, this tutorial is not for those brand new to the command line.

- You are capable of reading terminal output and diagnosing issues. Compiling OpenCV from source can be challenging if you’ve never done it before — there are a number of things that can trip you up, including missing packages, incorrect library paths, etc. Even with my detailed guides, you will likely make a mistake along the way. Don’t be discouraged! Take the time to understand the commands you’re executing, what they do, and most importantly, read the output of the commands! Don’t go blindly copying and pasting; you’ll only run into errors.

With all that said, let’s start configuring OpenCV’s “dnn” module for NVIDIA GPU inference.

Step #1: Install NVIDIA CUDA drivers, CUDA Toolkit, and cuDNN

This tutorial makes the assumption that you already have:

- An NVIDIA GPU

- The CUDA drivers for that particular GPU installed

- CUDA Toolkit and cuDNN configured and installed

If you have an NVIDIA GPU on your system but have yet to install the CUDA drivers, CUDA Toolkit, and cuDNN, you will need to configure your machine first — I will not be covering CUDA configuration and installation in this guide.

To learn how to install the NVIDIA CUDA drivers, CUDA Toolkit, and cuDNN, I recommend you read my Ubuntu 18.04 and TensorFlow/Keras GPU install guide — once you have the proper NVIDIA drivers and toolkits installed, you can come back to this tutorial.

Step #2: Install OpenCV and “dnn” GPU dependencies

The first step in configuring OpenCV’s “dnn” module for NVIDIA GPU inference is to install the proper dependencies:

$ sudo apt-get update $ sudo apt-get upgrade $ sudo apt-get install build-essential cmake unzip pkg-config $ sudo apt-get install libjpeg-dev libpng-dev libtiff-dev $ sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev $ sudo apt-get install libv4l-dev libxvidcore-dev libx264-dev $ sudo apt-get install libgtk-3-dev $ sudo apt-get install libatlas-base-dev gfortran $ sudo apt-get install python3-dev

Most of these packages should have been installed if you followed my Ubuntu 18.04 Deep Learning configuration guide, but I would recommend running the above command just to be safe.

Step #3: Download OpenCV source code

There is no “pip-installable” version of OpenCV that comes with NVIDIA GPU support — instead, we’ll need to compile OpenCV from scratch with the proper NVIDIA GPU configurations set.

The first step in doing so is to download the source code for OpenCV v4.2:

$ cd ~ $ wget -O opencv.zip https://github.com/opencv/opencv/archive/4.2.0.zip $ wget -O opencv_contrib.zip https://github.com/opencv/opencv_contrib/archive/4.2.0.zip $ unzip opencv.zip $ unzip opencv_contrib.zip $ mv opencv-4.2.0 opencv $ mv opencv_contrib-4.2.0 opencv_contrib

We can now move on with configuring our build.

Step #4: Configure Python virtual environment

If you followed my Ubuntu 18.04, TensorFlow, and Keras Deep Learning configuration guide, then you should already have virtualenv and virtualenvwrapper installed:

- If your machine is already configured, skip to the

mkvirtualenvcommands in this section. - Otherwise, follow along with each of these steps to configure your machine.

Python virtual environments are a best practice when it comes to Python development. They allow you to test different versions of Python libraries in sequestered, independent development and production environments. Python virtual environments are considered a best practice in the Python world — I use them daily and you should too.

If you haven’t yet installed pip, Python’s package manager, you can do so using the following command:

$ wget https://bootstrap.pypa.io/get-pip.py $ sudo python3 get-pip.py

Once pip is installed, you can install both virtualenv and virtualenvwrapper:

$ sudo pip install virtualenv virtualenvwrapper $ sudo rm -rf ~/get-pip.py ~/.cache/pip

You then need to open up your ~/.bashrc file and update it to automatically load virtualenv/virtualenvwrapper whenever you open up a terminal.

I prefer to use the nano text editor, but you can use whichever editor you are most comfortable with:

$ nano ~/.bashrc

Once you have the ~/.bashrc file open, scroll to the bottom of the file, and insert the following:

# virtualenv and virtualenvwrapper export WORKON_HOME=$HOME/.virtualenvs export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3 source /usr/local/bin/virtualenvwrapper.sh

From there, save and exit your terminal (ctrl + x , y , enter).

You can then reload your ~/.bashrc file in your terminal session:

$ source ~/.bashrc

You only need to run the above command once — since you updated your ~/.bashrc file, the virtualenv/virtualenvwrapper environment variables will be automatically set whenever you open a new terminal window.

The final step is to create your Python virtual environment:

$ mkvirtualenv opencv_cuda -p python3

The mkvirtualenv command creates a new Python virtual environment named opencv_cuda using Python 3.

You should then install NumPy into the opencv_cuda environment:

$ pip install numpy

If you ever close your terminal or deactivate your Python virtual environment, you can access it again via the workon command:

$ workon opencv_cuda

If you are new to Python virtual environments, I suggest you take a second and read up on how they work — they are a best practice in the Python world.

If you choose not to use them, that’s perfectly fine, but keep in mind that your choice doesn’t absolve you from learning proper Python best practices. Take the time now to invest in your knowledge.

Step #5: Determine your CUDA architecture version

When compiling OpenCV’s “dnn” module with NVIDIA GPU support, we’ll need to determine our NVIDIA GPU architecture version:

- This version number is a requirement when we set the

CUDA_ARCH_BINvariable in ourcmakecommand in the next section. - The NVIDIA GPU architecture version is dependent on which GPU you are using, so ensure you know your GPU model ahead of time.

- Failing to correctly set your

CUDA_ARCH_BINvariable can result in OpenCV still compiling but failing to use your GPU for inference (making it troublesome to diagnose and debug).

One of the easiest ways to determine your NVIDIA GPU architecture version is to simply use the nvidia-smi command:

$ nvidia-smi Mon Jan 27 14:11:32 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 410.104 Driver Version: 410.104 CUDA Version: 10.0 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla V100-SXM2... Off | 00000000:00:04.0 Off | 0 | | N/A 35C P0 38W / 300W | 0MiB / 16130MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

Inspecting the output, you can see that I am using an NVIDIA Tesla V100 GPU. Make sure you run the nvidia-smi command yourself to verify your GPU model before continuing.

Now that I have my NVIDIA GPU model, I can move on to determining the architecture version.

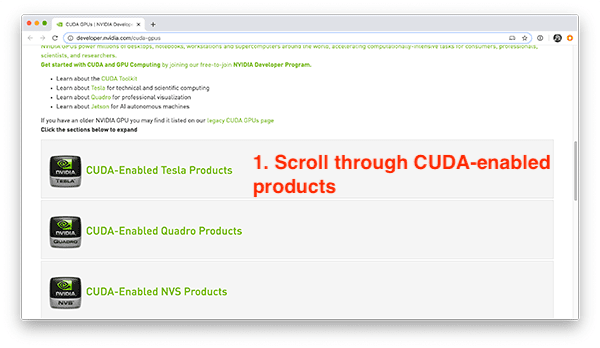

You can find your NVIDIA GPU architecture version for your particular GPU using this page:

https://developer.nvidia.com/cuda-gpus

Scroll down to the list of CUDA-Enabled Tesla, Quadro, NVS, GeForce/Titan, and Jetson products:

Since I am using a V100, I’ll click on the “CUDA-Enabled Tesla Products” section:

Scrolling down, I can see my V100 GPU:

As you can see, my NVIDIA GPU architecture version is 7.0 — you should perform the same process for your own GPU model.

Once you’ve identified your NVIDIA GPU architecture version, make note of it, and then proceed to the next section.

Step #6: Configure OpenCV with NVIDIA GPU support

At this point we are ready to configure our build using the cmake command.

The cmake command scans for dependencies, configures the build, and generates the files necessary for make to actually compile OpenCV.

To configure the build, start by making sure you are inside the Python virtual environment you are using to compile OpenCV with NVIDIA GPU support:

$ workon opencv_cuda

Next, change directory to where you downloaded the OpenCV source code, and then create a build directory:

$ cd ~/opencv $ mkdir build $ cd build

You can then run the following cmake command, making sure you set the CUDA_ARCH_BIN variable based on your NVIDIA GPU architecture version, which you found in the previous section:

$ cmake -D CMAKE_BUILD_TYPE=RELEASE \ -D CMAKE_INSTALL_PREFIX=/usr/local \ -D INSTALL_PYTHON_EXAMPLES=ON \ -D INSTALL_C_EXAMPLES=OFF \ -D OPENCV_ENABLE_NONFREE=ON \ -D WITH_CUDA=ON \ -D WITH_CUDNN=ON \ -D OPENCV_DNN_CUDA=ON \ -D ENABLE_FAST_MATH=1 \ -D CUDA_FAST_MATH=1 \ -D CUDA_ARCH_BIN=7.0 \ -D WITH_CUBLAS=1 \ -D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib/modules \ -D HAVE_opencv_python3=ON \ -D PYTHON_EXECUTABLE=~/.virtualenvs/opencv_cuda/bin/python \ -D BUILD_EXAMPLES=ON ..

Here you can see that we are compiling OpenCV with both CUDA and cuDNN support enabled (WITH_CUDA and WITH_CUDNN, respectively).

We also instruct OpenCV to build the “dnn” module with CUDA support (OPENCV_DNN_CUDA).

We also ENABLE_FAST_MATH, CUDA_FAST_MATH, and WITH_CUBLAS for optimization purposes.

The most important, and error-prone, configuration is your CUDA_ARCH_BIN — make sure you set it correctly!

The CUDA_ARCH_BIN variable must map to your NVIDIA GPU architecture version found in the previous section.

If you set this value incorrectly, OpenCV still may compile, but you’ll receive the following error message when you try to perform inference using the dnn module:

File "ssd_object_detection.py", line 74, in

detections = net.forward()

cv2.error: OpenCV(4.2.0) /home/a_rosebrock/opencv/modules/dnn/src/cuda/execution.hpp:52: error: (-217:Gpu API call) invalid device function in function 'make_policy'

If you encounter this error, then you know your CUDA_ARCH_BIN was not set properly.

You can verify that your cmake command executed properly by looking at the output:

... -- NVIDIA CUDA: YES (ver 10.0, CUFFT CUBLAS FAST_MATH) -- NVIDIA GPU arch: 70 -- NVIDIA PTX archs: -- -- cuDNN: YES (ver 7.6.0) ...

Here you can see that OpenCV and cmake have correctly identified my CUDA-enabled GPU, NVIDIA GPU architecture version, and cuDNN version.

I also like to look at the OpenCV modules section, in particular the To be built portion:

-- OpenCV modules: -- To be built: aruco bgsegm bioinspired calib3d ccalib core cudaarithm cudabgsegm cudacodec cudafeatures2d cudafilters cudaimgproc cudalegacy cudaobjdetect cudaoptflow cudastereo cudawarping cudev datasets dnn dnn_objdetect dnn_superres dpm face features2d flann fuzzy gapi hdf hfs highgui img_hash imgcodecs imgproc line_descriptor ml objdetect optflow phase_unwrapping photo plot python3 quality reg rgbd saliency shape stereo stitching structured_light superres surface_matching text tracking ts video videoio videostab xfeatures2d ximgproc xobjdetect xphoto -- Disabled: world -- Disabled by dependency: - -- Unavailable: cnn_3dobj cvv freetype java js matlab ovis python2 sfm viz -- Applications: tests perf_tests examples apps -- Documentation: NO -- Non-free algorithms: YES

Here you can see there are a number of cuda* modules, indicating that cmake is instructing OpenCV to build our CUDA-enabled modules (including OpenCV’s “dnn” module).

You can also look at the Python 3 section to verify that both your Interpreter and numpy point to your Python virtual environment:

-- Python 3: -- Interpreter: /home/a_rosebrock/.virtualenvs/opencv_cuda/bin/python3 (ver 3.5.3) -- Libraries: /usr/lib/x86_64-linux-gnu/libpython3.5m.so (ver 3.5.3) -- numpy: /home/a_rosebrock/.virtualenvs/opencv_cuda/lib/python3.5/site-packages/numpy/core/include (ver 1.18.1) -- install path: lib/python3.5/site-packages/cv2/python-3.5

Make sure you take note of the install path as well!

You’ll be needing that path when we finish the OpenCV install.

Step #7: Compile OpenCV with “dnn” GPU support

Provided cmake exited without an error, you can then compile OpenCV with NVIDIA GPU support using the following command:

$ make -j8

You can replace the 8 with the number of cores available on your processor.

Since my processor has eight cores, I supply an 8. If your processor only has four cores, replace the 8 with a 4 .

As you can see, my compile completed without an error:

A common error you may see is the following:

$ make make: * No targets specified and no makefile found. Stop.

If that happens you should go back to Step #6 and check your cmake output — the cmake command likely exited with an error. If cmake exits with an error, then the build files for make cannot be generated, thus the make command reporting there are no build files to compile from. If that happens, go back to your cmake output and look for errors.

Step #8: Install OpenCV with “dnn” GPU support

Provided your make command from Step #7 completed successfully, you can now install OpenCV via the following:

$ sudo make install $ sudo ldconfig

The final step is to sym-link the OpenCV library into your Python virtual environment.

To do so, you need to know the location of where the OpenCV bindings were installed — you can determine that path via the install path configuration in Step #6.

In my case, the install path was lib/python3.5/site-packages/cv2/python-3.5.

That means that my OpenCV bindings should be in /usr/local/lib/python3.5/site-packages/cv2/python-3.5.

I can confirm the location by using the ls command:

$ ls -l /usr/local/lib/python3.5/site-packages/cv2/python-3.5 total 7168 -rw-r--r- 1 root staff 7339240 Jan 17 18:59 cv2.cpython-35m-x86_64-linux-gnu.so

Here you can see that my OpenCV bindings are named cv2.cpython-35m-x86_64-linux-gnu.so — yours should have a similar name based on your Python version and CPU architecture.

Now that I know the location of my OpenCV bindings, I need to sym-link them into my Python virtual environment using the ln command:

$ cd ~/.virtualenvs/opencv_cuda/lib/python3.5/site-packages/ $ ln -s /usr/local/lib/python3.5/site-packages/cv2/python-3.5/cv2.cpython-35m-x86_64-linux-gnu.so cv2.so

Take a second to first verify your file paths — the ln command will “silently fail” if the path to OpenCV’s bindings are incorrect.

Again, do not blindly copy and paste the command above! Double and triple-check your file paths!

Step #9: Verify that OpenCV uses your GPU with the “dnn” module

The final step is to verify that:

- OpenCV can be imported to your terminal

- OpenCV can access your NVIDIA GPU for inference via the

dnnmodule

Let’s start by verifying that we can import the cv2 library:

$ workon opencv_cuda $ python Python 3.5.3 (default, Sep 27 2018, 17:25:39) [GCC 6.3.0 20170516] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import cv2 >>> cv2.__version__ '4.2.0' >>>

Note that I am using the workon command to first access my Python virtual environment — you should be doing the same if you are using virtual environments.

From there I import the cv2 library and display the version.

Sure enough, the OpenCV version reported is v4.2, which is indeed the OpenCV version we compiled from.

Next, let’s verify that OpenCV’s “dnn” module can access our GPU. The key to ensuring OpenCV’s “dnn” module uses the GPU can be accomplished by adding the following two lines immediately after a model is loaded and before inference is performed:

net.setPreferableBackend(cv2.dnn.DNN_BACKEND_CUDA) net.setPreferableTarget(cv2.dnn.DNN_TARGET_CUDA)

The above two lines instruct OpenCV that our NVIDIA GPU should be used for inference.

To see an example of a OpenCV + GPU model in action, start by using the “Downloads” section of this tutorial to download our example source code and pre-trained SSD object detector.

From there, open up a terminal and execute the following command:

$ python ssd_object_detection.py --prototxt MobileNetSSD_deploy.prototxt \ --model MobileNetSSD_deploy.caffemodel \ --input guitar.mp4 --output output.avi \ --display 0 --use-gpu 1 [INFO] setting preferable backend and target to CUDA... [INFO] accessing video stream... [INFO] elasped time: 3.75 [INFO] approx. FPS: 65.90

The --use-gpu 1 flag instructs OpenCV to use our NVIDIA GPU for inference via OpenCV’s “dnn” module.

As you can see, I am obtaining ~65.90 FPS using my NVIDIA Tesla V100 GPU.

I can then compare my output to using just the CPU (i.e., no GPU):

$ python ssd_object_detection.py --prototxt MobileNetSSD_deploy.prototxt \ --model MobileNetSSD_deploy.caffemodel --input guitar.mp4 \ --output output.avi --display 0 [INFO] accessing video stream... [INFO] elasped time: 11.69 [INFO] approx. FPS: 21.13

Here I am only obtaining ~21.13 FPS, implying that by using the GPU, I’m obtaining a 3x performance boost!

In next week’s blog post, I’ll be providing you with a detailed walkthrough of the code.

Help! I’m encountering a “make_policy” error

It is super, super important to check, double-check, and triple-check the CUDA_ARCH_BIN variable.

If you set it incorrectly, you may encounter the following error when running the ssd_object_detection.py script from the previous section:

File "real_time_object_detection.py", line 74, in

detections = net.forward()

cv2.error: OpenCV(4.2.0) /home/a_rosebrock/opencv/modules/dnn/src/cuda/execution.hpp:52: error: (-217:Gpu API call) invalid device function in function 'make_policy'

That error indicates that your CUDA_ARCH_BIN value was set incorrectly when running cmake.

You’ll need to go back to Step #5 (where you identify your NVIDIA CUDA architecture version) and then re-run both cmake and make.

I would also suggest you delete your build directory and recreate it before running cmake and make:

$ cd ~/opencv $ rm -rf build $ mkdir build $ cd build

From there you can re-run both cmake and make — doing so in a fresh build directory will ensure you have a clean build and any previous (incorrect) configurations are gone.

What's next? I recommend PyImageSearch University.

30+ total classes • 39h 44m video • Last updated: 12/2021

★★★★★ 4.84 (128 Ratings) • 3,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 30+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 30+ Certificates of Completion

- ✓ 39h 44m on-demand video

- ✓ Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 500+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial you learned how to compile and install OpenCV’s “deep neural network” (DNN) module with NVIDIA GPU, CUDA, and cuDNN support, allowing you to obtain 211-1549% faster inference and prediction.

Using OpenCV’s “dnn” module requires you to compile from source — you cannot “pip install” OpenCV with GPU support.

In next week’s tutorial, I’ll benchmark popular deep learning models for both CPU and GPU inference speed, including:

- Single Shot Detectors (SSDs)

- You Only Look Once (YOLO)

- Mask R-CNNs

Using this information, you’ll know which models will benefit the most using a GPU, ensuring you can make an educated decision on whether or not a GPU is a good choice for your particular project.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Thank you so much for this tutorial. I was trying to use cuda in my project for like a two weeks, I think this tutorial will help be resolve my error. Thanks a lot.

Thanks Aakar! And good luck with your project.

Adrian, this is great, I will take a peak to integrate some of your build recommendations.

As a piece of information, a while back we started releasing a “cuda_tensorflow_opencv” container, and have updated it in December to integrate the DNN updates based on Nvidia’s officially published cudnn container (that can be redistributed).

https://github.com/datamachines/cuda_tensorflow_opencv

Welcome your comments and feedback, but so far made my life much easier 🙂

That’s awesome, thanks for sharing Martial!

Nice tutorial!

Can this trick be used on an NVIDIA Xavier or Jetson Nano in order to run the inference of custom Faster RCNN models?

I’m not sure as NVIDIA provides their own .img for the Nano. I haven’t tried these instructions on the Nano but I’m guessing there would be a few hangups along the way. My guess is that NVIDIA will eventually release a new .img file for the Nano that includes support for OpenCV’s “dnn” module.

Hi.

I’ve been using a Xavier with the stock img nVidia provides.

I think later I’ll test this build.

I’ve been using tensorflow as inferencer for now; but maybe with this I could use OpenCV-DNN for inferencing.

I’m using heavy models (FRCNN-1920×1080, or FRCNN-1920-715). It should work… But currently I don’t have the time to test it thoroughly.

I have the Jetson Nano and succeeded to install/build OpenCV 4.2.0 according to your instructions. But it takes a very long time, for me around 6 hours so better do it over night. Also don’t forget to setup a 4G swapfile before starting

The result? Partly disappointing and partly better than before

Running your sample (without showing the video and without making an avi) looks not too good I think:

$ python3 ssd_object_detection.py –prototxt MobileNetSSD_deploy.prototxt –model MobileNetSSD_deploy.caffemodel –input guitar.mp4 –output “” –display -1 –use-gpu 1

[INFO] setting preferable backend and target to CUDA…

[INFO] accessing video stream…

[INFO] elasped time: 198.32

[INFO] approx. FPS: 1.25

Testing with my own Python scripts analyzing just one single picture

MobileNetSSD: CUDA: 0.65-0.7 sec CPU: 0.6 sec

YOLO V3 (full version): CUDA: 0.77 sec CPU: 11.3 sec

So for YOLO, a huge improvement!!

If I compare this with using the Jetson inference the same picture is analyzed in just 0.15 sec

I believe I have installed everything correctly, followed your instructions but I think your V100 is a monster compared to the tiny Jetson Nano

Kind regards, Walter

Thanks for sharing, Walter.

The CUDA backend in OpenCV DNN relies on cuDNN for convolutions. cuDNN performs depthwise convolutions very poorly on most devices. Hence, MobileNet is very slow.

MobileNet can be faster on some devices (like RTX 2080 Ti where you get 500FPS). It just depends on your luck whether cuDNN has an optimized kernel for depthwise convolution for your device.

More information here: https://github.com/opencv/opencv/pull/14827#issuecomment-528930005

A close friend of mine reported getting 5FPS on YOLOv3. Not really sure why you have less than 2FPS. You can try running this program to measure YOLOv3 FPS: https://gist.github.com/YashasSamaga/e2b19a6807a13046e399f4bc3cca3a49

You can get even better FPS on YOLOv3 if you a trick mentioned here: https://github.com/opencv/opencv/pull/14827#issuecomment-568156546

Thanks Yashas!

Thanks Adrian, was looking forward to this. I have tried these instructions on the Jetson Nano. I worked under the assumption that all prerequisites were already met, like the CUDA Driver and such. I downloaded the latest image from Nvidia to start with. The whole procedure in this tutorial can be executed without error. And it seems to work. But somehow inference using the example and gpu enabled is slower than using cpu. So while it works, and unless I missed something, this works but the Jetson doesn’t speed up things.

Thanks Hans. I haven’t personally tried with the Nano, but if you read through the comments section of this post you’ll see that others have also compiled OpenCV with NVIDIA GPU support for the Nano but didn’t find the results to be very good.

Thanks Adrian! Will the deep learning AMI dl4cv python environment be updated with NVIDIA support for opencv?

If not (or not soon), any advice/pointers on how to update? Is it as straightforward as pip uninstalling opencv-contrib-python and the using the instructions above?

Yes, I’ll be issuing a new DL4CV AMI in the near future. Right now I’m wrapping up Raspberry Pi for Computer Vision, then I can move back to the AMI.

As for uninstalling OpenCV via pip, yes, it’s a simple as:

$ pip uninstall opencv-contrib-pythonFrom there you can follow the instructions in this post.

Timing couldn’t be better! My team and I are going to try compiling on the nano, will be back with more info in coming days!

Thanks Guilherme! But unfortunately the Nano speed doesn’t seem to be very good if you read through the comments section of this post.

Hi, Adrian Rosebrock

It is very nice.

I am still working with python 2.7 because of the robot’s package (ROS).

do you think that it will work well with python 2.7 and GTX 1050?

I haven’t supported Python 2.7 in quite some time but I know OpenCV still supports Python 2.7. Provided you compile OpenCV with Python 2.7 support I don’t see why it wouldn’t work.

Well… My GTX960 is not qualified.. 🙁

“CMake Error at modules/dnn/CMakeLists.txt:99 (message):

CUDA backend for DNN module requires CC 5.3 or higher. Please remove

unsupported architectures from CUDA_ARCH_BIN option.”

Actually, the only Maxwell architecture qualified cards are the Jetsons.

I have been able to build for my GTX960 by cloning latest opencv (instead of wget ) and setting CUDA_ARCH_BIN=5.2.

But the tests results are not impressive:

python3 ssd_object_detection.py –prototxt MobileNetSSD_deploy.prototxt –model MobileNetSSD_deploy.caffemodel –input guitar.mp4 –output output.avi –display 0 –use-gpu 1

[INFO] setting preferable backend and target to CUDA…

[INFO] accessing video stream…

[INFO] elasped time: 22.20

[INFO] approx. FPS: 11.13

compared to CPU on 8 cores I7:

python3 ssd_object_detection.py –prototxt MobileNetSSD_deploy.prototxt –model MobileNetSSD_deploy.caffemodel –input guitar.mp4 –output output.avi –display 0

[INFO] accessing video stream…

[INFO] elasped time: 8.75

[INFO] approx. FPS: 28.23

Anyway, thanks for this great tutorial 😉

Thanks Patrick. I would recommend you test your GPU inference speed using next week’s tutorial on using OpenCV’s “dnn” module with YOLO and Mask R-CNN. Those models should show substantially faster speed on your GPU.

Nice tutorial

Thanks, I’m glad you enjoyed it!

Hi Adrian, I was able to install everything without any errors on my Jetson Nano, but my results are definately not using the GPU, I got the following results when running with and without the GPU:

$ python ssd_object_detection.py –prototxt MobileNetSSD_deploy.prototxt –model MobileNetSSD_deploy.caffemodel –input guitar.mp4 –output output.avi –display 0 –use-gpu 1

[INFO] setting preferable backend and target to CUDA…

[INFO] accessing video stream…

[INFO] elasped time: 121.98

[INFO] approx. FPS: 2.02

$ python ssd_object_detection.py –prototxt MobileNetSSD_deploy.prototxt –model MobileNetSSD_deploy.caffemodel –input guitar.mp4 –output output.avi –display 0

[INFO] accessing video stream…

[INFO] elasped time: 63.43

[INFO] approx. FPS: 3.89

Any idea where I went wrong…. As I said I didn’t have any errors.

Thanks for a great site !

I thought it might have something to do with the CUDA_ARCH_BIN, I rechecked the value on the CUDA GPU page, which was the same as I used originally 5.3

Hey Werner — this result looks correct based on what I’ve seen other PyImageSearch readers reporting for the Jetson Nano (refer to the other comments on this post).

And I got bellow results:

1, jetson agx :32.1fps with full fun speed

2, rtx2080+amd3800x: 92fps

3,jetson tx1 :2.3fps

Thank you for sharing your results, Jeff!

Thanks for sharing.Great tutorial

Thanks Firas!

Dear Adrian – thanks for this great tutorial! I took my Jestson Nano development kit and installed everything accordingly – no errors accounted. Now that I run the ssd_object_detection.py I get 3.63 FPS (CPU) and 1.98 FPS (–use-gpu 1). What could be wrong here? Did I miss something?

Refer to the other comments on this tutorial as many of them have addressed inference speeds on the Nano.

I have made some more tests: if I use a YOLO3 model, I get immediately approx 20FPS (after setting instructions in python code to use GPU). For me, this is now fine as I will use YOLO as detector, however it would be great to know why it’s not working with your source code you provided in this great post.

Maybe there is something small to be changed… any idea?

It’s unfortunately hard to say but it’s discussed in more detail in this week’s post.

Strange: I got the reverse with my CPU being faster than my GPU.

–use-gpu 1

elapsed time: 14.99

approx. FPS: 16.48

no GPU

elapsed time: 7.42

approx FPS: 33.29

I know it was trying to use the GPU because of the message:

setting preferable backend and target to CUDA…

What model of GPU were you using?

Titan Xp – compute capability 6.1. However I discovered I was using an older version of cuDNN (v7.3.1). I will update to v7.6.4, rebuild and try again.

Happens almost the same to me, i have a GTX 1070ti running on Ubuntu 18.04

Hey Gabriel — see my reply to Vadim in this thread.

I’ve got the similar result using GeForce GTX 1080Ti

CPU E31230 @ 3.20GHz

–use-gpu 1

[INFO] elasped time: 19.64

[INFO] approx. FPS: 12.57

no GPU

[INFO] elasped time: 12.19

[INFO] approx. FPS: 20.26

See this thread with Andrew Baker which addresses the issue.

I tried reinstalling CUDA and cuDNN to no avail. I rebuilt openCV. I have the same result with the the CPU version running faster than the GPU.

Versions:

Ubuntu 18.04.4 LTS

Python 3.6.9

CUDA v10.0.130

cuDNN v7.6.4

GPU Titan XP compute capability 6.1

NVIDIA Driver 440.48.02

CPU Intel i7-7800X 3.5GHz x 12

openCV v4.2.0

Output:

[INFO] use_gpu=True

[INFO] setting preferable backend and target to CUDA…

[INFO] accessing video stream…

[INFO] elasped time: 15.26

[INFO] approx. FPS: 16.18

[INFO] use_gpu=False

[INFO] accessing video stream…

[INFO] elasped time: 7.37

[INFO] approx. FPS: 33.52

I’ll see what happens using next week’s tutorial.

Take a look at this reply from Yashas who implemented the OpenCV + dnn + NVIDIA GPU support.

According to Yashas the MobileNet SSD could perform poorly as cuDNN does not have optimized support for depthwise convolutions on all GPU devices.

Thanks to your tutorial, I made jetson work with dnn

Almost identical result on clean ubuntu 19.10 using

Intel(R) Core(TM) i5-9400F CPU @ 2.90GHz

16G ram

GTX 1050Ti

Enjoyed setting it up though – great tutorials!

Hey Adrian, thank you for this tutorial.

However, I have a problem when I try to the cmake build of opencv. It cannot find my nvidia-cuda.

It shows like this :

— NVIDIA CUDA: NO

—

— cuDNN: NO

In fact, I can detect my Nvidia GPU using nvidia-smi command.

I also cannot find the numpy and python3 interpreter.

Can you please help me? Thank you for your attention.

I would double and triple-check your “cmake” command. It sounds like your CUDA_ARCH_BIN version may be set incorrectly.

Hello, thank you for the great tutorial. I followed it and managed to install opencv on a jetson nano. I set the CUDA_ARCH_BIN=5.3 as per the information on the nvidia web page. In the installation report it was correctly accepted – NVIDIA GPU arch: 53.

However, when I run the test object detection I get slower result with the GPU than without the GPU.

[INFO] setting preferable backend and target to CUDA…

[INFO] accessing video stream…

[INFO] elasped time: 126.79

[INFO] approx. FPS: 1.95

or…

[INFO] accessing video stream…

[INFO] elasped time: 67.02

[INFO] approx. FPS: 3.69

Please, let me know what might be going wrong. Thank you!

Nothing is wrong. see my comment here.

Hi Adrian,

I’m using Tesla K80, the NVIDIA GPU architecture version is 3.7 that given by the website, so I set CUDA_ARCH_BIN=3.7. But after I run “cmake” command, I get this error,

“CUDA backend for DNN module requires CC 5.3 or higher. Please remove unsupported architectures from CUDA_ARCH_BIN option.”

Does this mean OpenCV DNN module doesn’t support Tesla K80 GPU?

wonderful tutorial, use opencv dnn is fantastic.

But i tried to compile opencv on a azure server with ubuntu 18.04 and nvidia Tesla K80, and get a error , opencv dnn 4.2 just compile with GPU cards with compute capability(CUDA_ARCH_BIN) major of 5.3, my card has 3.7. I can not compile this 🙁

The K80 is not supported by OpenCV’s “dnn” module.

Hi Adrian,

Very timely tutorial for me. I used OpenCV on a Jetson TX2 development board recently. Unfortunately, I don’t have the stats now but I will try to post then later once I get a chance to run inferencing again.

Meantime, I want to ask you why it is important to build OpenCV within the python virtual environment as you have mentioned under step 6.

“To configure the build, start by making sure you are inside the Python virtual environment you are using to compile OpenCV with NVIDIA GPU support:”

Python virtual environments are a best practice. I would suggest you read the “Step #4: Configure Python virtual environment” section of this post.

Cool post. Is it possible to build a Docker image on top of nvidia-docker to make it easier to test out the OpenCV dnn module on GPUs?

Hi Adrian I have GTX 1660 TI which is not listed on the web page of Nvdia but I found Nvidia forum its supported for cuda. Anyway I installed cuda 10.2 to Ubuntu 18.04. I tried to cmake opencv but as you mention that I stucked finding the correct CUDA_ARCH_BIN so I gave 10.2 cmake returns

NVIDIA CUDA: YES (ver 10.2, CUFFT CUBLAS FAST_MATH)

— NVIDIA GPU arch: 102

— NVIDIA PTX archs:

—

— cuDNN: NO

no matter what I gave CUDA_ARCH_BIN I tried from 6.1 to 10.2 every number gives NVIDIA CUDA yes but no cuDNN

Keep in mind that CUDA and cuDNN are tow different things. Have you verified that cuDNN has been installed on your system?

Hi Adrian,

Thanks for this great tutorial. I have done the OpenCV setup as per this post and here are the system details:

Ubuntu Desktop: 18.04 LTS

GPU: GeForce GTX 1050 Ti

Nvidia Driver: 440.59

Cuda: ver 10.2, CUFFT CUBLAS FAST_MATH

CuDNN: ver 7.6.5

NVIDIA GPU arch: 6.1

OpenCV: ver 4.2.0

When I tested the example with SSD, I found that GPU result is not good as compared to CPU. I used a 5 seconds video clip:

SSD using CPU:

[INFO] elasped time: 4.85

[INFO] approx. FPS: 25.98

SSD using GPU:

[INFO] elasped time: 7.76

[INFO] approx. FPS: 16.24

I tried for other long videos also, but still CPU results are better than GPU for SSD. Any idea why this is happening?

Refer to the blog post comments as that question has been addressed multiple times. Thanks!

Hi Adrian, it looks like if you leave out `CUDA_ARCH_BIN`, OpenCV’s `OpenCVDetectCUDA` cmake rules (https://github.com/opencv/opencv/blob/master/cmake/OpenCVDetectCUDA.cmake) are correctly able to infer the right value. In my case, it correctly detected `6.1`. I wonder if its really necessary to specify this during build? Did you find issues in the auto detection?

I ran into issues with the auto detection (could have been user error) and wanted to document how you determine your CUDA_ARCH_BIN as I figured other readers would run into issues themselves.

Hi adrian i hope this comment reach you. I already installed opencv4 before on my ubuntu 18.04 using this tutorial https://www.pyimagesearch.com/2018/08/15/how-to-install-opencv-4-on-ubuntu/. FYI i installed it after i installed cuda and cudnn. Then i want to use opencv DNN using GPU so i need to have opencv cuda enabled. When i check my build using cv2.getBuildInformation() it show cuda is unavailable. Knowing this i happen to read your new post which is this post and doing the delete build and re make opencv build using config you provided. But when i check its build again still cuda unavailable. Do i need to reinstall my opencv ?

I would focus on getting “CUDA” to show up under your list of packages to be built. It’s likely that your CUDA configurations are incorrect. Double-check them.

I have built my system for a second time with the same error when I run the source code:

“setUpNet DNN module was not built with CUDA backend; switching to CPU”

I can not seem to find out what is causing this error, has anyone else run into this? I have a GeForce GTX 1080 and set -D CUDA_ARCH_BIN=6.1 in my cmake config

same error did you find a solution ?

I got the same error, then I change os from ubuntu 1604 to ubuntu 1804, and turned to be OK !

GTX 1050 mobile + cuda arch 6.1

[INFO] setting preferable backend and target to CUDA…

[INFO] accessing video stream…

[INFO] elasped time: 17.08

[INFO] approx. FPS: 14.46

when not use GPU, FPS is around 11.XX

there seems to be some conflicts between opencv4.2.0 with libopencv-dev.

after running the example guitar.mp4 with gpu, I install libopencv-dev using sudo apt install libopencv-dev. And guess what, the error of “setUpNet DNN module was not built with CUDA backend” comes again !

Hey Adrian,

Big Fan of your tutorials!

Will this installation work if I want to compile C/C++ code with OpenCV?

Do I need to do something different?

Thanks 🙂

Yes, this install method will work if you want to compile C/C++ code.

tried to install this on my Jetson Nano, but it says version is 4.1.0 and “module ‘cv2.dnn’ has no attribute ‘DNN_BACKEND_CUDA'” Does it means there is not a Aarch64 version out there?

That means that “cmake” could not find your GPU architecture when compiling. Double-check your cmake command, including your CUDA architecture version.

Adrian Rosebrock,

first of all thank you for all the tutorials and guidance you are providing!.

im following the tutorial to install opencv on jetson nano and everything goes well until step 7 Compile OpenCV with “dnn” GPU support, once it starts compiling at some point it just freezes and doesn”t work again. every time i restart the nano and run again the comand make -j4 it compiles a bit further but then it freezes again.

hope you can help me.

Thank you again

I recommend you follow my dedicated tutorial on configuring the Jetson Nano.

Hi Adrian. Thank you for sharing good materials. Your instruction really helped me a lot, to start my very first computer vision project. (I’m new to computer vision, I only handle signal, time-series data)

I’m trying to build OpenCV under anaconda environment, inside of the docker container.

But now I stuck at step8, sym-linking the OpenCV with conda environment.

My python_executable dir is /root/anaconda/envs/yolo/bin/python3. Could you tell me the correct install path, in my case?

Also, I can’t find python3 , numpy version, install path like your output. Mine shows as follows (no numpy, no install path!) :

– python(for build) : /root/anaconda3/envs/yolo/bin/python3

– install to : usr/local

BTW, I checked that output shows the correct CUDA & cuDNN version. I think it’s weird.

Best Regards,

Thanks, I’m glad the tutorial has helped you!

I’m not an Anaconda user and I don’t have Anaconda installed on my system so I unfortunately cannot provide any recommendations there. I’m sure another PyImageSearch reader will be able to assist you though!

Adrian, I’ve got to say. You always have awesome content. I have referenced your posts a number of time on my projects already. Thanks for making the world a better and more collaborative place!

Thank you so much for the kind words, Gavin!

Hello, thanks for putting together such detail tutorial. A couple of advice, which may further streamline the process.

1. The lengthy step 5 of locating the “Compute Capability” in order to set CUDA_ARCH_BIN properly, can be simplified down to this:

“Look up from https://en.wikipedia.org/wiki/CUDA your GPU, and grab the Compute Capability from the left most column of the table.”

2. Does compilation of opencv (stepp 3, and 5,6,7) depends on “virtual env”? Not really, right? OpenCV only needs to find numpy.

The Step 4 is a lengthy distraction to this simple need, *and*, forcing users to go through many hoops to install virtual env.

Not everyone needs virtual env, nor everyone needs numpy to be inside a virtual env. Not to mention, numpy is so popular in the data analytics, many install them site-wide already.

Perhaps swap the position of step 3 and 4, and make it as simple as

“Step 3. Install numpy the python library.

If you haven’t installed python packages before, or are interested in using virtual env, read on. Otherwise, skip to Step 4”.

1. Thanks for the tip on finding the CUDA_ARCH_BIN!

2. Python virtual environments are a best practice in the Python community and are highly recommended. If you choose not to use them, that’s perfectly fine, just realize that I can’t provide as much help/support.

-D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib/modules \

might be more flexible if the path is “../../opencv_contrib/modules” \

Not everyone would download/unzip them straight from home directory.

Same for the python executable path:

-D PYTHON_EXECUTABLE=~/.virtualenvs/opencv_cuda/bin/python

what if the user don’t use virtualenv and prefer to use system-wide python ? Perhaps only advise them of setting this variable if you do want to use python from “the virtual env setup step above”.

Very informatic tutorial in deep learning Adrain. Your blogs are very information or easy to understand and implement. Thanks for posting such tutorials. You are an excellent teacher and blogger.