Table of Contents

- Computer Graphics and Deep Learning with NeRF using TensorFlow and Keras: Part 2

- Configuring Your Development Environment

- Having Problems Configuring Your Development Environment?

- Project Structure

- Introduction to NeRF

- Input Data Pipeline

- NeRF Multi-Layer Perceptron

- Volume Rendering

- Photometric Loss

- Breather

- Enhancing NeRF

- Credits

- Summary

The uniqueness of NeRF is proved by the number of doors it opens up in the field of computer graphics and deep learning. These range from medical imaging, 3D scene reconstruction, animation industry, relighting a scene to depth estimation.

In our previous week’s tutorial, we familiarize ourselves with the prerequisites of NeRF. We have also explored the dataset that will be used. Now, it is best to remind ourselves of the initial problem statement.

What if there was a way to capture the entire 3D scene just from a sparse set of 2D pictures?

In this tutorial, we will focus on the algorithm that NeRF takes to capture the 3D scene from the sparse set of images.

This lesson is part 2 of a 3-part series on Computer Graphics and Deep Learning with NeRF using TensorFlow and Keras:

- Computer Graphics and Deep Learning with NeRF using TensorFlow and Keras: Part 1 (last week’s tutorial)

- Computer Graphics and Deep Learning with NeRF using TensorFlow and Keras: Part 2 (this week’s tutorial)

- Computer Graphics and Deep Learning with NeRF using TensorFlow and Keras: Part 3 (next week’s tutorial)

To learn about Neural Radiance Fields or NeRF, just keep reading.

Computer Graphics and Deep Learning with NeRF using TensorFlow and Keras: Part 2

In this tutorial, we dive straight into the concepts of NeRF. We have divided this tutorial into the following sections:

- Introduction to NeRF: overview of NeRF

- Input Data Pipeline: the

tf.datainput data pipeline- Utility and images: building the

tf.datapipeline for images - Generate rays: building the

tf.datapipeline for rays - Sample points: sampling points from the rays

- Utility and images: building the

- NeRF Multi-Layer Perceptron: the NeRF Multi-Layer Perceptron (MLP) architecture

- Volume Rendering: understanding the volume rendering process

- Photometric Loss: understanding the loss used in NeRF

- Enhancing NeRF: techniques to enhance NeRF

- Positional encoding: understanding positional encoding

- Hierarchical sampling: understanding hierarchical sampling

By the end of this tutorial, we will be able to understand the concepts proposed in NeRF.

Configuring Your Development Environment

To follow this guide, you need to have the TensorFlow library installed on your system.

Luckily, TensorFlow is pip-installable:

$ pip install tensorflow

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

We first need to review our project directory structure.

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

Let’s take a look at the directory structure:

$ tree --dirsfirst . ├── dataset │ ├── test │ │ ├── r_0_depth_0000.png │ │ ├── r_0_normal_0000.png │ │ ├── r_0.png │ │ ├── .. │ │ └── .. │ ├── train │ │ ├── r_0.png │ │ ├── r_10.png │ │ ├── .. │ │ └── .. │ ├── val │ │ ├── r_0.png │ │ ├── r_10.png │ │ ├── .. │ │ └── .. │ ├── transforms_test.json │ ├── transforms_train.json │ └── transforms_val.json ├── pyimagesearch │ ├── config.py │ ├── data.py │ ├── encoder.py │ ├── __init__.py │ ├── nerf.py │ ├── nerf_trainer.py │ ├── train_monitor.py │ └── utils.py ├── inference.py └── train.py

The parent directory has two python scripts and two folders.

- The

datasetfolder contains three subfolders:train,test, andvalfor the train, test, and validation images. - The

pyimagesearchfolder contains all of the python scripts we will be using for training. - Finally, we have the two driver scripts:

train.pyandinference.py. We will be looking at training and inference in next week’s tutorial.

Note: In the interest of time, we have divided the implementation of NeRF into two parts. This blog introduces the concepts, while next week’s blogs will cover the train and inference scripts.

Introduction to NeRF

Let’s talk about the premise of the paper. You have images of a particular scene from a few specific viewpoints. Now you want to generate an image of the scene from an entirely new view. This problem falls under novel image synthesis, as shown in Figure 2.

The immediate solution to novel view synthesis that comes to our mind is to use a Generative Adversarial Network (GAN) on the training dataset. With GANs, we are constraining ourselves to the 2D space of images.

Mildenhall et al. (2020), on the other hand, ask a simple question.

Why not capture the entire 3D scenery from the images itself?

Let’s take a moment and try to absorb this idea.

We are now looking at a transformed problem statement. From novel view synthesis, we have transited to 3D scene capture from a sparse set of 2D images.

This new problem statement will also serve as a solution to the novel view synthesis problem. How difficult is it to generate a novel view if we have the 3D scenery at our hands?

Note that, NeRF is not the first to tackle this problem. Its predecessors have used various methods, including Convolutional Neural Networks (CNN) and gradient-based mesh optimization. However, according to the paper, these methods could not scale to better resolution due to higher space and time complexity. NeRF aims at optimizing an underlying continuous volumetric scene function.

Do not worry if you don’t get all of these terms at first glance. The rest of the blog is dedicated to breaking each of these topics down in the finest details and explaining them one by one.

We begin with a sparse set of images and their corresponding camera metadata (orientation and position). Next, we want to achieve a 3D representation of the entire scene, as shown in Figure 3.

The steps for NeRF can be visualized in the following figures:

- Generate Rays: In this step, we march rays through each pixel of the image. The rays (Ray A and Ray B) are the red lines (Figure 4) that intersect the image and traverse through the 3D box (scene).

- Sample points: In this step we sample points

on the rays as shown in Figure 5. We must note that these points are located on the rays, making them 3D points inside the box.

Each point has a unique position

- Deep Learning: We pass these points into an MLP (Figure 7) and predict the color and density corresponding to that point.

- Volume Rendering: Let’s consider a single ray (Ray A here) and send all the sample points to the MLP to get the corresponding color and density, as shown in Figure 8. After we have the color and density of each point, we can apply classical volume rendering (defined in a later section) to predict the color of the image pixel (pixel P here) through which the ray passes.

- Photometric Loss: The difference between the predicted color of the pixel (shown in Figure 9) and the actual color of the pixel makes the photometric loss. This eventually allows us to perform backpropagation on the MLP and minimize the loss.

Input Data Pipeline

At this point, we have a bird’s eye view of NeRF. However, before describing the algorithm further, we need first to define an input data pipeline.

We know from the previous week’s tutorial that our dataset contains images and the corresponding camera orientations. So now, we need to build a data pipeline that produces images and the corresponding rays.

In this section, we will build this data pipeline step by step using the tf.data API. tf.data ensures an efficient way to build and use the dataset. If you want a primer on tf.data, you can refer to this tutorial.

The entire data pipeline is written in the pyimagesearch/data.py file. So, let’s open the file and start digging!

Utility and Images

# import the necessary packages from tensorflow.io import read_file from tensorflow.image import decode_jpeg from tensorflow.image import convert_image_dtype from tensorflow.image import resize from tensorflow import reshape import tensorflow as tf import json

We begin with importing the necessary packages on Lines 2-8

tensorflowto build the data pipelinejsonfor reading and working with json data

def read_json(jsonPath): # open the json file with open(jsonPath, "r") as fp: # read the json data data = json.load(fp) # return the data return data

On Lines 10-17, we define the read_json function. This function takes the path to the json file (jsonPath) and returns the parsed data.

We open the json file with the open function on Line 12. Then, with the file pointer in hand, we read the contents and parse it with the json.load function on Line 14. Finally, Line 17 returns the parsed json data.

def get_image_c2w(jsonData, datasetPath):

# define a list to store the image paths

imagePaths = []

# define a list to store the camera2world matrices

c2ws = []

# iterate over each frame of the data

for frame in jsonData["frames"]:

# grab the image file name

imagePath = frame["file_path"]

imagePath = imagePath.replace(".", datasetPath)

imagePaths.append(f"{imagePath}.png")

# grab the camera2world matrix

c2ws.append(frame["transform_matrix"])

# return the image file names and the camera2world matrices

return (imagePaths, c2ws)

On Lines 19-37, we define the get_image_c2w function. This function takes the parsed json data (jsonData) and the path to the dataset (datasetPath) and returns the path to the images (imagePaths) and its corresponding camera-to-world (c2ws) matrices.

On Lines 21-24, we define two empty lists: imagePaths and c2ws. On Lines 27-34, we iterate over the parsed json data and add the image paths and camera-to-world matrices to the empty lists. After iterating over the entire data, we return both lists (Line 37).

Working with tf.data.Dataset instances, we will need a way to transform our dataset while feeding it to the model. To efficiently do this, we use the map functionality. The map function takes in the tf.data.Dataset instance and a function that is applied to each element of the dataset.

The later part of the pyimagesearch/data.py defines functions used with the map function to transform the dataset.

class GetImages(): def __init__(self, imageWidth, imageHeight): # define the image width and height self.imageWidth = imageWidth self.imageHeight = imageHeight def __call__(self, imagePath): # read the image file image = read_file(imagePath) # decode the image string image = decode_jpeg(image, 3) # convert the image dtype from uint8 to float32 image = convert_image_dtype(image, dtype=tf.float32) # resize the image to the height and width in config image = resize(image, (self.imageWidth, self.imageHeight)) image = reshape(image, (self.imageWidth, self.imageHeight, 3)) # return the image return image

Before moving ahead, let’s discuss why we chose to build a class with a __call__ method instead of building a function that could be applied with the map function.

The problem is that the function passed to the map function cannot accept anything other than the element of the dataset. This is an imposed constraint which we need to bypass.

To overcome this problem, we have created a class that can hold some properties (here imageWidth and imageHeight) used during the function call.

On Lines 39-60, we build the GetImages class with a custom __call__ and __init__ function.

__init__: we will be using this function to initialize the parameters imageWidth and imageHeight (Lines 40-43)

__call__: this method makes the object callable. We will be using this function to read the images from the imagePaths (Line 47). Next, it is now decoded in a usable jpeg format (Line 50). We then convert the image from uint8 to float32 and reshape it (Lines 53-57).

Generate Rays

A ray in computer graphics can be parameterized as

where

is the ray

is the origin of the ray

is the unit vector for the direction of the ray

is the parameter (e.g., time)

To build the ray equation, we need the origin and the direction. In the context of NeRF, we generate rays by taking the origin of the ray as the pixel position of the image plane and the direction as the straight line joining the pixel and the camera aperture. This is illustrated in Figure 10.

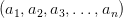

We can easily devise the pixel positions of the 2D image with respect to the camera coordinate frame using the following equations.

It is easy to locate the origin of the pixel points but a little challenging to get the direction of the rays. From the previous section, we have

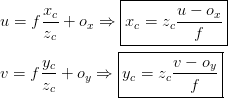

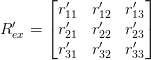

The camera-to-world matrix from the dataset is the

To define the direction vector, we do not need the entire camera-to-world matrix; instead, we use the

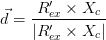

With the rotation matrix, we can get the unit direction vector by the following equation.

The difficult calculations are now over. For the easy part, the rays’ origin will be the translation vector of the camera-to-world matrix.

Let’s see how we can translate this to code. We will be continuing with the pyimagesearch/data.py file.

class GetRays: def __init__(self, focalLength, imageWidth, imageHeight, near, far, nC): # define the focal length, image width, and image height self.focalLength = focalLength self.imageWidth = imageWidth self.imageHeight = imageHeight # define the near and far bounding values self.near = near self.far = far # define the number of samples for coarse model self.nC = nC

On Lines 62-75, we create the class GetRays with a custom __call__ and __init__ function.

__init__: we initialize the focalLength, imageWidth, and imageHeight on Lines 66-68 and also the near and far bounds of the camera viewing field (Lines 71 and 72). We will need this to construct the rays to be marched into the scene, as shown In Figure 8.

def __call__(self, camera2world): # create a meshgrid of image dimensions (x, y) = tf.meshgrid( tf.range(self.imageWidth, dtype=tf.float32), tf.range(self.imageHeight, dtype=tf.float32), indexing="xy", ) # define the camera coordinates xCamera = (x - self.imageWidth * 0.5) / self.focalLength yCamera = (y - self.imageHeight * 0.5) / self.focalLength # define the camera vector xCyCzC = tf.stack([xCamera, -yCamera, -tf.ones_like(x)], axis=-1) # slice the camera2world matrix to obtain the rotation and # translation matrix rotation = camera2world[:3, :3] translation = camera2world[:3, -1]

__call__: we input the camera2world matrix to this method which in turn returns

rayO: the origin pointsrayD: the set of direction pointstVals: the sampled points

On Lines 79-83, we create a meshgrid of the image dimension. This is the same as the Image Plane shown in Figure 10.

Next, we obtain the camera coordinates (Lines 86 and 87) using the equation derived from our previous blog.

We define a homogeneous representation (Lines 90 and 91) of the camera vector xCyCzC by stacking the camera coordinates.

On Lines 95 and 96, we extract the rotation matrix and the translation vector from the camera-to-world matrix.

# expand the camera coordinates to xCyCzC = xCyCzC[..., None, :] # get the world coordinates xWyWzW = xCyCzC * rotation # calculate the direction vector of the ray rayD = tf.reduce_sum(xWyWzW, axis=-1) rayD = rayD / tf.norm(rayD, axis=-1, keepdims=True) # calculate the origin vector of the ray rayO = tf.broadcast_to(translation, tf.shape(rayD)) # get the sample points from the ray tVals = tf.linspace(self.near, self.far, self.nC) noiseShape = list(rayO.shape[:-1]) + [self.nC] noise = (tf.random.uniform(shape=noiseShape) * (self.far - self.near) / self.nC) tVals = tVals + noise # return ray origin, direction, and the sample points return (rayO, rayD, tVals)

We then transform the camera coordinates to world coordinates using the rotation matrix (Lines 99-102).

Next, we calculate the direction rayD (Lines 105 and 106) and the origin vector rayO (Line 109).

On Lines 112-116, we sample points from the ray.

Note: We will learn about sampling points on a ray in the following section.

Finally we return rayO, rayD, and tVals on Line 119.

Sample Points

After the generation of rays, we need to draw sample 3D points from the rays. To do this, we suggest two ways.

- Sample points at regular intervals: The name of the method is self-explanatory. Here, we sample points on the ray at regular intervals, as shown in Figure 11.

The sampling equation is as follows:

where

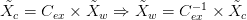

- Sample points randomly: In this method, we add randomness into the process of sampling points. The idea here is that if the sample points come from random positions of the ray, the model will be exposed to new data. This will regularize it to produce better results. The strategy is shown in Figure 12.

This is demonstrated by the equation below:

where

NeRF Multi-Layer Perceptron

Each sample point is of 5 dimensions. The spatial location of the point is a 3D vector (

These 5D points serve as the input to the MLP. This field of rays with 5D points is referred to as the neural radiance field in the paper.

The MLP network predicts each input point’s color

The MLP architecture is displayed in Figure 13.

An important point to note here is that:

We encourage the representation to be multiview consistent by restricting the network to predict the volume density

as a function of only the location

while allowing the RGB color

to be predicted as a function of both locations and viewing direction.

With all that theory out of the way, we can start building the NeRF architecture in TensorFlow. So, let’s open the file pyimagesearch/nerf.py and start digging.

# import the necessary packages from tensorflow.keras.layers import Dense from tensorflow.keras.layers import concatenate from tensorflow.keras import Input from tensorflow.keras import Model

We begin with importing our necessary packages on Lines 2-5.

def get_model(lxyz, lDir, batchSize, denseUnits, skipLayer): # build input layer for rays rayInput = Input(shape=(None, None, None, 2 * 3 * lxyz + 3), batch_size=batchSize) # build input layer for direction of the rays dirInput = Input(shape=(None, None, None, 2 * 3 * lDir + 3), batch_size=batchSize) # creating an input for the MLP x = rayInput for i in range(8): # build a dense layer x = Dense(units=denseUnits, activation="relu")(x) # check if we have to include residual connection if i % skipLayer == 0 and i > 0: # inject the residual connection x = concatenate([x, rayInput], axis=-1) # get the sigma value sigma = Dense(units=1, activation="relu")(x) # create the feature vector feature = Dense(units=denseUnits)(x) # concatenate the feature vector with the direction input and put # it through a dense layer feature = concatenate([feature, dirInput], axis=-1) x = Dense(units=denseUnits//2, activation="relu")(feature) # get the rgb value rgb = Dense(units=3, activation="sigmoid")(x) # create the nerf model nerfModel = Model(inputs=[rayInput, dirInput], outputs=[rgb, sigma]) # return the nerf model return nerfModel

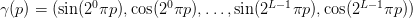

Next, on Lines 7-46, we create our MLP model in the function called get_model. This method takes in the following inputs:

lxyz: the number of dimensions used for positional encoding of the xyz coordinateslDir: the number of dimensions used for positional encoding of the direction vectorbatchSize: the batch size of the datadenseUnits: the number of units in each layer of MLPskipLayer: the layer at which we want the skip connection

On Lines 9-14, we define the rayInput and the dirInput layers. Next, we create the MLP with the skip connection (Lines 17-25).

To align with the paper (multiview consistency), only the rayInput is passed through the model to produce sigma (volume density) and a feature vector on Lines 28-31. Finally, the feature vector is concatenated with the dirInput (Line 35) to produce color (Line 39).

On Lines 42 and 43, we build the nerfModel using the Keras functional API. Finally, we return the nerfModel on Line 46.

Volume Rendering

In this section, we study how to achieve volume rendering. We use the predicted color and volume density from the MLP to render the 3D scene.

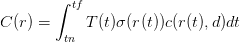

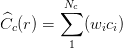

The predictions from the network are plugged into the classical volume rendering equation to derive the color of one particular point. For example, the equation for the same is given below:

Sounds complicated?

Let us break this equation down into simple parts.

- The term

is the color of the point of the object.

is the ray that is fed into the network where the variable stands for the following:

as the origin of the ray point

is the direction of the ray

is the set of uniform samples between the near and far points used for the integral

is the volume density which can also be interpreted as the differential probability of the ray terminating at the point

.

is the color of the ray at the point

These are the building blocks of the equation. Apart from these, there is another term

This represents the transmittance along the ray from near point

Now when we have all the terms together, we can finally make sense of the equation.

The color of an object in the 3D space is defined as the sum over of (

Let’s look at how to express this in code. First, we will look at the render_image_depth in the pyimagesearch/utils.py file.

def render_image_depth(rgb, sigma, tVals): # squeeze the last dimension of sigma sigma = sigma[..., 0] # calculate the delta between adjacent tVals delta = tVals[..., 1:] - tVals[..., :-1] deltaShape = [BATCH_SIZE, IMAGE_HEIGHT, IMAGE_WIDTH, 1] delta = tf.concat( [delta, tf.broadcast_to([1e10], shape=deltaShape)], axis=-1) # calculate alpha from sigma and delta values alpha = 1.0 - tf.exp(-sigma * delta) # calculate the exponential term for easier calculations expTerm = 1.0 - alpha epsilon = 1e-10 # calculate the transmittance and weights of the ray points transmittance = tf.math.cumprod(expTerm + epsilon, axis=-1, exclusive=True) weights = alpha * transmittance # build the image and depth map from the points of the rays image = tf.reduce_sum(weights[..., None] * rgb, axis=-2) depth = tf.reduce_sum(weights * tVals, axis=-1) # return rgb, depth map and weights return (image, depth, weights)

On Lines 15-42, we are building a render_image_depth function which takes as inputs:

rgb: the red-green-blue color matrix of the ray pointssigma: the volume density of the sample pointstVals: the sample points

It produces the volume-rendered image (image), its depth map (depth), and the weights (required for hierarchical sampling).

- On Line 17, we reshape

sigmafor ease of calculation. Next, we calculate the space (delta) between adjacenttVals(Lines 20-23). - Next we create

alphausingsigmaanddelta(Line 26). - We create the transmittance and weight vector (Lines 33-35).

- On Lines 38 and 39, we create the image and depth map.

Finally, we return image, depth, and weights on Line 42.

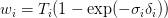

Photometric Loss

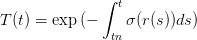

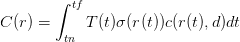

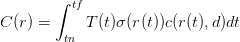

We refer to the loss function used by NeRF as the photometric loss. This is computed by comparing the colors of the synthesized image with the ground-truth image. Mathematically this can be expressed as:

where

Breather

Let’s take a moment here to realize how far we have come. Take a deep breath like our friend in Figure 14.

We have learned about computer graphics and their fundamentals in the first part of our blog series. In this tutorial, we have taken those concepts and applied them to 3D scene representation. Here we have:

- Built an image and a ray dataset from the given

jsonfiles. - Sampled points from the rays using the random sampling strategy.

- Passed these points into the NeRF MLP.

- Rendered a novel image using the color and volume density predicted by the MLP.

- Established a loss function (photometric loss) with which we will optimize the parameters of the MLP.

These steps are sufficient to train a NeRF model and render novel views. However, this vanilla architecture will eventually produce renderings of low quality. To mitigate these issues, Mildenhall et al. (2020) propose additional enhancements.

In the next section, we will learn about these enhancements and their implementation using TensorFlow.

Enhancing NeRF

Mildenhall et al. (2020) propose two methods to enhance the renderings from NeRF.

- positional encoding

- hierarchical sampling

Positional Encoding

Positional Encoding is a popular encoding format used in architectures like transformers. Mildenhall et al. (2020) justify using this to better render high-frequency features such as texture and details.

Rahaman et al. (2019) suggest that deep networks are biased toward learning low-frequency functions. To bypass this problem NeRF proposes mapping the input vector

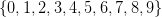

Let’s say we have 10 positions indexed as

The binary system is an easy encoding system. The only problem we face here is that the binary system is filled with zeros, making it a sparse representation. We would want to make this system continuous and compact.

The encoding function used in NeRF is as follows:

To draw a parallel between the binary and the NeRF encoding, let’s look at Figure 16.

The sine and cosine functions make the encoding continuous, and the

A visualization of the positional encoding function

We can create this fairly simply in a function called encoder_fn in the pyimagesearch/encode.py file.

# import the necessary packages import tensorflow as tf def encoder_fn(p, L): # build the list of positional encodings gamma = [p] # iterate over the number of dimensions in time for i in range(L): # insert sine and cosine of the product of current dimension # and the position vector gamma.append(tf.sin((2.0 ** i) * p)) gamma.append(tf.cos((2.0 ** i) * p)) # concatenate the positional encodings into a positional vector gamma = tf.concat(gamma, axis=-1) # return the positional encoding vector return gamma

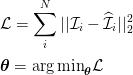

We start with importing tensorflow (Line 2). On Lines 4-19, we define the encoder function, which takes in the following parameters:

p: position of each element to be encodedL: the dimension into which the encoding will take place

On Line 6, we define a list that will hold the positional encoding. Next, we iterate over dimensions and append the encoded values into the list (Lines 9-13). Lines 16-19 are used to convert the same list into a tensor and finally return it.

Hierarchical Sampling

Mildenhall et al. (2020) found another problem with the original structure. The random sampling method would sample N points along each camera ray. This means we don’t have any prior understanding of where it should sample. That ultimately leads to an inefficient rendering.

They propose the following solution to remedy this:

- Build two identical NeRF MLP models, the coarse and fine network.

- Sample a set of

points along the camera ray using the random sampling strategy, as shown in Figure 12. These points will be used to query the coarse network.

- The output of the coarse network is used to produce a more informed sampling of points along each ray. These samples are biased towards the more relevant parts of the 3D scene.

To do this, we rewrite the color equation:

As a weighted sum of all sample colors.

where the term.

- The weights, when normalized, produce a piecewise-constant probability density function.

The entire procedure of turning the weights into a probability density function is visualized in Figure 18.

- From the probability density function, we sample the second set of

locations using the inverse transform sampling method, as shown in Figure 19.

- Now we have both

and

set of sampled points. We send these points to the fine network to produce the final rendered color of the ray.

This process of converting weights to a new set of sample points can be expressed through a function called sample_pdf. First, let’s refer to the utils.py file inside the pyimagesearch folder.

def sample_pdf(tValsMid, weights, nF): # add a small value to the weights to prevent it from nan weights += 1e-5 # normalize the weights to get the pdf pdf = weights / tf.reduce_sum(weights, axis=-1, keepdims=True) # from pdf to cdf transformation cdf = tf.cumsum(pdf, axis=-1) # start the cdf with 0s cdf = tf.concat([tf.zeros_like(cdf[..., :1]), cdf], axis=-1) # get the sample points uShape = [BATCH_SIZE, IMAGE_HEIGHT, IMAGE_WIDTH, nF] u = tf.random.uniform(shape=uShape) # get the indices of the points of u when u is inserted into cdf in a # sorted manner indices = tf.searchsorted(cdf, u, side="right") # define the boundaries below = tf.maximum(0, indices-1) above = tf.minimum(cdf.shape[-1]-1, indices) indicesG = tf.stack([below, above], axis=-1) # gather the cdf according to the indices cdfG = tf.gather(cdf, indicesG, axis=-1, batch_dims=len(indicesG.shape)-2) # gather the tVals according to the indices tValsMidG = tf.gather(tValsMid, indicesG, axis=-1, batch_dims=len(indicesG.shape)-2) # create the samples by inverting the cdf denom = cdfG[..., 1] - cdfG[..., 0] denom = tf.where(denom < 1e-5, tf.ones_like(denom), denom) t = (u - cdfG[..., 0]) / denom samples = (tValsMidG[..., 0] + t * (tValsMidG[..., 1] - tValsMidG[..., 0])) # return the samples return samples

This code snippet has been inspired by the official NeRF implementation. On Lines 44-86, we create a function called sample_pdf that takes in the following parameters:

tValsMid: the midpoints between two adjacenttValsweights: the weights used in the volume rendering functionnF: number of points used by the fine model

On Lines 46-49, we define the probability density function from the weights and then convert the same into a cumulative distribution function (cdf). This is then converted back into sample points for the fine model using inverse transform sampling (Lines 52-86).

We recommend this supplementary reading material to understand hierarchical sampling better.

Credits

This tutorial was inspired by the work of Mildenhall et al. (2020).

What's next? I recommend PyImageSearch University.

30+ total classes • 39h 44m video • Last updated: 12/2021

★★★★★ 4.84 (128 Ratings) • 3,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 30+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 30+ Certificates of Completion

- ✓ 39h 44m on-demand video

- ✓ Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 500+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

We have gone through the core concepts proposed in the paper NeRF and also implemented them using TensorFlow.

We can recall what we have learned so far in the following steps:

- Building the image and ray dataset for 5D scene representation

- Sample points from the rays using any of the sampling strategies

- Passing these points through the NeRF MLP model

- Volume rendering based on the output of the MLP model

- Calculating the photometric loss

- Using positional encoding and hierarchical sampling to improve the quality of rendering

In next week’s tutorial, we will cover how to utilize all of these concepts to train the NeRF model. In addition, we will also render a 360-degree video of a 3D scene from 2D images.

We hope you enjoyed this week’s tutorial, and as always, you can download the source code and try it out yourself.

Citation Information

Gosthipaty, A. R., and Raha, R. “Computer Graphics and Deep Learning with NeRF using TensorFlow and Keras: Part 2,” PyImageSearch, 2021, https://hcl.pyimagesearch.com/2021/11/17/computer-graphics-and-deep-learning-with-nerf-using-tensorflow-and-keras-part-2/

@article{Gosthipaty_Raha_2021_pt2,

author = {Aritra Roy Gosthipaty and Ritwik Raha},

title = {Computer Graphics and Deep Learning with {NeRF} using {TensorFlow} and {Keras}: Part 2},

journal = {PyImageSearch},

year = {2021},

note = {https://hcl.pyimagesearch.com/2021/11/17/computer-graphics-and-deep-learning-with-nerf-using-tensorflow-and-keras-part-2/},

}

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

on the rays as shown in Figure 5. We must note that these points are located on the rays, making them 3D points inside the box.

on the rays as shown in Figure 5. We must note that these points are located on the rays, making them 3D points inside the box.

is the ray

is the ray is the origin of the ray

is the origin of the ray is the unit vector for the direction of the ray

is the unit vector for the direction of the ray

![C_{ex}^{-1} = \left[\begin{matrix} r_{11}^\prime & r_{12}^\prime & r_{13}^\prime & t_x^\prime\\ r_{21}^\prime & r_{22}^\prime & r_{23}^\prime & t_y^\prime\\ r_{31}^\prime & r_{32}^\prime & r_{33}^\prime & t_z^\prime\\ 0 & 0 & 0 & 1\\ \end{matrix}\right] C_{ex}^{-1} = \left[\begin{matrix} r_{11}^\prime & r_{12}^\prime & r_{13}^\prime & t_x^\prime\\ r_{21}^\prime & r_{22}^\prime & r_{23}^\prime & t_y^\prime\\ r_{31}^\prime & r_{32}^\prime & r_{33}^\prime & t_z^\prime\\ 0 & 0 & 0 & 1\\ \end{matrix}\right]](https://b2524211.smushcdn.com/2524211/wp-content/latex/3fb/3fb724b44806ff7c77441aa47e805565-ffffff-000000-0.png?lossy=1&strip=1&webp=1)

![t_i = U \left[t_n\displaystyle\frac{i-1}{N}(t_f-t_n),t_n+\displaystyle\frac{i}{N}(t_f-t_n)\right] t_i = U \left[t_n\displaystyle\frac{i-1}{N}(t_f-t_n),t_n+\displaystyle\frac{i}{N}(t_f-t_n)\right]](https://b2524211.smushcdn.com/2524211/wp-content/latex/fa5/fa5a48840e1054d2b40754c0296b5353-ffffff-000000-0.png?lossy=1&strip=1&webp=1)

while allowing the RGB color

while allowing the RGB color

is the color of the point of the object.

is the color of the point of the object. is the ray that is fed into the network where the variable stands for the following:

is the ray that is fed into the network where the variable stands for the following: as the origin of the ray point

as the origin of the ray point is the volume density which can also be interpreted as the differential probability of the ray terminating at the point

is the volume density which can also be interpreted as the differential probability of the ray terminating at the point

points along the camera ray using the random sampling strategy, as shown in Figure 12. These points will be used to query the coarse network.

points along the camera ray using the random sampling strategy, as shown in Figure 12. These points will be used to query the coarse network.

.

.

.

.

locations using the

locations using the

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.