In this tutorial, you will learn the architectural details of Progressive GAN, which enable it to generate high-resolution images. In addition, we will see how we can use Torch Hub to import a pre-trained PGAN model and use it in our projects to generate high-quality images.

This lesson is part 4 of a 6-part series on Torch Hub:

- Torch Hub Series #1: Introduction to Torch Hub

- Torch Hub Series #2: VGG and ResNet

- Torch Hub Series #3: YOLO v5 and SSD — Models on Object Detection

- Torch Hub Series #4: PGAN — Model on GAN (this tutorial)

- Torch Hub Series #5: MiDaS — Model on Depth Estimation

- Torch Hub Series #6: Image Segmentation

To learn how the Progressive GAN works and can be used to generate high-resolution images, just keep reading.

Torch Hub Series #4: PGAN — Model on GAN

Topic Description

The inception of Generative Adversarial Networks in 2014 and their ability to effectively model arbitrary data distributions well, took the computer vision community by storm. The simplicity of the 2-player paradigm and surprisingly fast sample generation at inference were the two main factors that made GANs ideal for practical applications in the real world.

However, for a long time after their emergence, the generative capabilities of a GAN were restricted to generating samples for relatively low-resolution datasets (e.g., MNIST (28×28 images), CIFAR10 (32×32 images), etc.). This can be attributed to the fact that generative adversarial networks struggled to capture several modes of the underlying data distributions and intricate low-level details in high-resolution images. This is limited in practice as applications in the real world usually demand sample generation at high resolutions.

Owing to this, the computer vision community witnessed concerted efforts to improve the underlying network architectures and introduce new training techniques to stabilize the training process and improve the quality of generated samples.

In this tutorial, we will look into one such technique, namely, progressive growing, that played a pivotal role in bridging this gap and enabling GANs to generate high-quality samples at higher resolutions, which is essential for various practical applications. Specifically, we will discuss the following in detail:

- The architectural details of Progressive GAN (PGAN)

- The progressive growing technique and salient features that enable PGAN to generate high-resolution samples with stable and efficient training

- Importing pre-trained Generative models like PGAN from Torch Hub for quick and seamless integration into our deep learning projects

- Generation of high-resolution face images using pre-trained PGAN model

- Walk through the latent space of a trained PGAN

Progressive GAN Architecture

For a long time, Generative Adversarial Networks have struggled to generate high-resolution images. This can be mainly attributed to the fact that high-resolution images comprise a lot of information in the form of high-level structure and intricate low-level details, which are hard to learn all at once.

This can be understood in terms of the multi-player training paradigm that forms an integral part of training a GAN. As discussed in a previous tutorial, the main component for a GAN is to update the generator to fool the discriminator at each step. However, when a GAN is employed to generate high-resolution images, it is difficult for the generator to holistically model the high and intricate low-level details of images simultaneously. This is true especially during the initial stages of the training when its weights are more or less random.

This makes the discriminator stronger since it can easily tell apart the generator samples from the real samples disturbing the generator and discriminator balance which is pivotal for the optimal convergence of a GAN.

Furthermore, in another tutorial, we discussed that GANs are prone to mode collapse. The problem described above can also be viewed from the perspective of modeling a distribution which is the objective of a GAN. Specifically, it is difficult for the generator to effectively capture all the modes of the data distribution at once. This problem becomes more potent when the data is high-resolution and comprises multiple modes related to high-level structure and finer semantic details.

Progressive GAN aims to mitigate the aforementioned problem by dividing the complex task of generating high-resolution images into simpler subtasks. This is mainly implemented by asking the generator to solve simpler generative tasks at each step.

Specifically, during the training process, the generator is initially required to learn low-resolution versions of images. As it slowly gets better at generating images at lower resolutions, the resolution of images that the generator is asked to generate, increases. This keeps the final goal of generating high-resolution images intact but does not overburden the generator at once and allows it to capture the high- and low-level details in images gradually. The motivation behind this technique is effectively captured in Figure 1.

Figure 2 illustrates the progressive growing paradigm that forms the basis for PGAN. Specifically, we start with a generator that generates images at low-resolution (say, 8×8) and a corresponding discriminator which takes the generated 8×8 image as input along with the real image (which is originally 1024×1024) resized to 8×8 dimension.

At this point, the generator learns to model the high-level structure of the image distribution. Gradually, new layers are added synchronously to the generator and the discriminator, enabling them to process images at higher resolutions. This enables the generator to follow a smooth and gradual learning curve and slowly learn the fine details in higher-resolution images.

The paradigm of progressive growth can also be viewed from a hypothesis estimation perspective. Instead of requiring the generator to learn a complex function at once, we ask it to learn simpler sub-functions at each step. Owing to this, the generator is required to capture fewer modes at a particular time step. Therefore, it can gradually capture the different data distribution modes as the training progresses.

Furthermore, since the generator is presented with simpler tasks of generating low-resolution images, especially at the initial stages of training, the generator can do reasonably well on these tasks maintaining the balance between the strength of the discriminator and generator.

Progressive Growing Technique

We discussed in the previous section how the PGAN network approaches the complex problem of generating high-resolution images by dividing them into simpler tasks. In addition, we observed that this requires synchronous progressive growing of the generator and discriminator architecture to gradually process images with higher resolutions starting from low-resolution images.

In this part of the tutorial, we will discuss how the PGAN architecture implements the progressive growing technique. Specifically, the overall goal is to slowly add layers to both the generator and discriminator at each stage.

Figure 3 shows the gist of the progressive growing paradigm. Formally, we want to gradually add layers in the generator and discriminator and ensure that they are smoothly and gradually integrated with the networks without any abrupt changes to the training process.

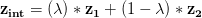

In the case of the Generator (i.e., Figure 3, top), let’s assume that at a given stage, we get input as shown) and we want to double the output resolution of the generator. To achieve this, we first use the Upsample() operation, which increases the spatial dimension of the image by a factor of ×2. In addition, we add a new convolution layer that outputs a 2N×2N dimensional image (shown on the right branch). Notice that the final output is the weighted sum of the left and right branch outputs with

Let’s now understand the role of

Initially, the convolution layer is initialized randomly, and it will not compute any meaningful functions or output. Thus the output from the convolution layer is given a low non-zero weighting coefficient (say,

Even though the coefficient

A similar approach is also followed for the discriminator, as shown in Figure 3 (bottom), to add layers gradually. The only difference here is that the discriminator gets the output image from the generator and downsamples the spatial dimension as shown. Note that layers are simultaneously added to both the discriminator and generator at a given stage since the discriminator has to process the output image from the generator at any given time step.

Now that we have discussed the PGAN architecture, let’s go ahead and see the network in action!!

The CelebA Dataset

In this tutorial, we will be using the CelebA dataset, which consists of high-resolution face images of various celebrities, making it an apt choice for our tutorial. Specifically, the CelebA dataset has been collected by researchers at MMLAB, The Chinese University of Hong Kong, and consists of 202,599 face images that belong to 10,177 unique celebrities. In addition, the dataset also provides facial landmarks and binary attributes annotations for the images, which help localize the facial features and represent semantic features.

Configuring Your Development Environment

To follow this guide, you need to have the PyTorch library, torchvision module, and matplotlib library installed on your system.

Luckily, these packages are quite easy to install using pip:

$ pip install torch torchvision $ pip install matplotlib

If you need help configuring your development environment for OpenCV, we highly recommend that you read our pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

Now that we have discussed the intuition behind PGAN and the progressive growing technique, we are ready to dive into code and see our PGAN model in action. We start by describing the project structure of our directory.

├── output ├── pyimagesearch │ ├── config.py ├── predict.py └── analyze.py

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

We begin by understanding the structure of our project directory. Specifically, the output folder will store the plots for the images generated from our pre-trained PGAN model and our latent space analysis.

The config.py file in the pyimagesearch folder stores our code’s parameters, initial settings, and configurations.

Finally, the predict.py file enables us to generate high-resolution images from our Torch Hub pre-trained PGAN model, and the analyze.py file stores the code for analyzing and walking through the latent space of the PGAN model.

Creating the Configuration File

We start by discussing the config.py file, which contains the parameter configurations we will use for our tutorial.

# import the necessary packages import torch import os # define gpu or cpu usage DEVICE = "cuda" if torch.cuda.is_available() else "cpu" USE_GPU = True if DEVICE == "cuda" else False # define the number of images to generate and interpolate NUM_IMAGES = 8 NUM_INTERPOLATION = 8 # define the path to the base output directory BASE_OUTPUT = "output" # define the path to the output model output and latent # space interpolation SAVE_IMG_PATH = os.path.join(BASE_OUTPUT, "image_samples.png") INTERPOLATE_PLOT_PATH = os.path.sep.join([BASE_OUTPUT, "interpolate.png"])

We start by importing the necessary packages on Lines 2 and 3. Then, on Lines 6 and 7, we define the DEVICE and USE_GPU parameters, which determine whether we will use a GPU or CPU for generating our images based on availability.

On Line 10, we define the NUM_IMAGES parameter, which defines the number of output images we will visualize for our PGAN model. Furthermore, the NUM_INTERPOLATION parameter defines the number of points we will use for linear interpolation and walking through our latent space (Line 11).

Finally, we define the path to our output folder (i.e., BASE_OUTPUT) on Line 14 and the corresponding paths for storing the generated images (i.e., SAVE_IMG_PATH) and latent space interpolation plots (i.e., INTERPOLATE_PLOT_PATH) on Lines 18 and 19.

Generating Images Using PGAN Model

Now that we have defined our configuration parameters, we can generate face images using a pre-trained PGAN model.

In the previous tutorials of this series, we observed how Torch Hub is used to import and seamlessly integrate pre-trained PyTorch models with our deep learning pipelines and projects. Here, we will be using the functionalities of Torch Hub API to import a PGAN model pre-trained on the CelebAHQ dataset.

Let’s open the predict.py file from our project directory and get started.

# USAGE

# python predict.py

# import the necessary packages

from pyimagesearch import config

import matplotlib.pyplot as plt

import torchvision

import torch

# load the pre-trained PGAN model

model = torch.hub.load("facebookresearch/pytorch_GAN_zoo:hub",

"PGAN", model_name="celebAHQ-512", pretrained=True,

useGPU=config.USE_GPU)

# sample random noise vectors

(noise, _) = model.buildNoiseData(config.NUM_IMAGES)

# pass the sampled noise vectors through the pre-trained generator

with torch.no_grad():

generatedImages = model.test(noise)

# visualize the generated images

grid = torchvision.utils.make_grid(

generatedImages.clamp(min=-1, max=1), nrow=config.NUM_IMAGES,

scale_each=True, normalize=True)

plt.figure(figsize = (20,20))

plt.imshow(grid.permute(1, 2, 0).cpu().numpy())

# save generated image visualizations

torchvision.utils.save_image(generatedImages.clamp(min=-1, max=1),

config.SAVE_IMG_PATH, nrow=config.NUM_IMAGES, scale_each=True,

normalize=True)

On Lines 5-8, we import the necessary packages and modules, including our config file from the pyimagesearch folder (Line 5) and the matplotlib library (Line 6), to visualize our generated images. In addition, we import the torchvision module (Line 7) and the torch library (Line 8) to access various PyTorch functionalities.

On Lines 11-13, we use the torch.hub.load function to load our pre-trained PGAN model. Notice that the function takes the following arguments:

- The location where the model is stored. This corresponds to the PyTorch GAN zoo (i.e.,

facebookresearch/pytorch_GAN_zoo:hub), which provides the models and trained weights for various GAN models trained on different datasets. - The name of the model that we intend to load (i.e.,

PGAN) - The dataset name (i.e.,

celebAHQ-512) lets us load dataset-specific pre-trained weights for a given model. - The

pretrainedparameter, which when set to True, directs the Torch Hub API to download the pre-trained weights of the selected model and load them. - The

useGPUBoolean parameter signifies if the model has to be loaded on GPU or CPU for inference (defined byconfig.USE_GPU).

Since we had selected the celebAHQ-512 dataset, the model will generate images of dimension 512×512.

Next, on Line 16, we use the built-in buildNoiseData() function of our PGAN model, which takes as a parameter the number of images we intend to generate (i.e., config.NUM_IMAGES) and samples those many 512-dimensional random noise vectors.

Since we are only using a pre-trained model for inference, we direct PyTorch to switch off the gradient computation with the help of torch.no_grad() as shown on Line 19. We then provide our random noise vectors as input to the built-in test() function of the Torch Hub PGAN model that processes the input through the pre-trained PGAN and stores the output images in the variable generatedImages (Line 20).

On Lines 23-25, we use the make_grid() function of torchvision.utils package to plot our images in the form of a grid. The function takes as argument the tensor of images to be plotted (i.e., generatedImages), the number of images displayed in a single row (i.e., config.NUM_IMAGES) and two other Boolean parameters, that is, scale_each and normalize which scale and normalize the image values in the tensor.

Notice that we clamp the generatedImages tensor to the range [-1, 1] before passing it to the make_grid function. Furthermore, setting the normalize=True parameter shifts the image to the range (0, 1). The scale_each=True parameter ensures that the images are scaled at an instance level rather than scaling based on all images. Setting these parameters ensures that the images are normalized in the specific ranges required by the make_grid function for optimal visualization of the results.

Finally, on Lines 26 and 27, we set the size of the figure and display our generated images using the imshow() function of matplotlib. We save our outputs at the location defined by config.SAVE_IMG_PATH, on Lines 30-32, using the save_image() function of the torchvision.utils module as shown.

Figure 5 shows the generated images from the PGAN model. Notice that even at a high resolution of 512×512, the model can capture the high-level structure and fine details (e.g., complexion, gender, hairstyle, expression, etc.) of the face images that determine the semantics of a particular face.

Walk the Latent Space

We have discussed how the progressive growing technique helps PGAN capture the high-level structure and fine details in images and generate high-resolution faces. A potential way to further analyze how well the GAN has captured the semantics of the data distribution is to walk the latent space of the network and analyze the transition in generated images. For a network that has successfully captured the underlying data distribution, the transition between images is expected to be smooth. Specifically, in this case, the estimated probability distribution function will be smooth with appropriate probability mass evenly distributed over the images of the distribution.

The pre-trained PGAN network is a deterministic mapping from points in the noise space to the image space. This simply means that if a specific noise vector (say,

The above fact implies that, given two different noise vectors,

Thus, to analyze the transition between images in the output image space of the PGAN, we walk in the noise latent space. We can take 2 random noise vectors,

where

(0, 1).

Finally, we can pass the interpolated vector

(0, 1).

Next, let’s open the analyze.py file and implement this in code to analyze the structure learned by our PGAN.

# USAGE

# python analyze.py

# import the necessary packages

from pyimagesearch import config

import matplotlib.pyplot as plt

import torchvision

import numpy as np

import torch

def interpolate(n):

# sample the two noise vectors z1 and z2

(noise, _) = model.buildNoiseData(2)

# define the step size and sample numbers in the range (0, 1) at

# step intervals

step = 1 / n

lam = list(np.arange(0, 1, step))

# initialize a tensor for storing interpolated images

interpolatedImages = torch.zeros([n, 3, 512, 512])

# iterate over each value of lam

for i in range(n):

# compute interpolated z

zInt = (1 - lam[i]) * noise[0] + lam[i] * noise[1]

# generate the corresponding in the images space

with torch.no_grad():

outputImage = model.test(zInt.reshape(-1, 512))

interpolatedImages[i] = outputImage

# return the interpolated images

return interpolatedImages

# load the pre-trained PGAN model

model = torch.hub.load("facebookresearch/pytorch_GAN_zoo:hub",

"PGAN", model_name="celebAHQ-512", pretrained=True, useGPU=True)

# call the interpolate function

interpolatedImages = interpolate(config.NUM_INTERPOLATION)

# visualize output images

grid = torchvision.utils.make_grid(

interpolatedImages.clamp(min=-1, max=1), scale_each=True,

normalize=True)

plt.figure(figsize = (20, 20))

plt.imshow(grid.permute(1, 2, 0).cpu().numpy())

# save visualizations

torchvision.utils.save_image(interpolatedImages.clamp(min=-1, max=1),

config.INTERPOLATE_PLOT_PATH, nrow=config.NUM_IMAGES,

scale_each=True, normalize=True)

We import the necessary packages on Lines 5-9, as we had seen earlier.

On Lines 11-34, we define our interpolate function, which takes as input a parameter n, which is the number of points we want to sample on the line joining the two noise vectors; and returns the interpolated images output from our GAN model (i.e., interpolatedImages).

On Line 13, we sample the two noise vectors that we will use for our interpolation using the built-in buildNoiseData() function. Then, on Line 17, we will define the step size (i.e., 1/n since we want to sample points uniformly in the interval of size 1), and on Line 18, we use the np.arange() function to sample points (i.e., our

(0, 1) at intervals defined by step. Notice that the np.arange() function takes as input the starting and ending points of the interval we want to sample in (i.e., 0 and 1) and the step size (i.e., step).

On Line 21, we initialize a tensor (i.e., interpolatedImages), which helps us store the output interpolations in the images space from our GAN.

Starting on Line 24, we iterate over each value in our list of lambdas defined by lam to get the corresponding interpolations. On Line 26, we use the ith value in the list lam and use it to get the corresponding interpolated point on the line joining

noise[0] and noise[1] vector). Then, on Lines 29-31, we invoke the torch.no_grad() mode as discussed earlier and pass the interpolated zInt through the PGAN model to get the corresponding output image and store it in the outputImage variable. Finally, we store the outputImage at the ith index of our interpolateImages tensor, which collects all interpolations in the image space in sequence. Finally, on Line 34, we return the interpolatedImages tensor.

Now that we have defined our interpolation() function, we are ready to walk in our latent space and analyze the transition in the corresponding outputs in the image space.

On Lines 37 and 38, we import our pre-trained PGAN model from Torch Hub, as discussed earlier.

On Line 41, we pass the config.NUM_INTERPOLATION parameter (which defines the number of points we want to sample on the line joining the two noise vectors) to our interpolate() function and store the corresponding outputs in the interpolatedImages variable.

Finally, on Lines 44-48, we use the make_grid() function and matplotlib library to display our interpolated outputs, as we had seen earlier in the post. We save our visualizations at the location defined by config.INTERPOLATE_PLOT_PATH using the save_image() function of the torchvision.utils module as discussed in detail earlier (Lines 51-53).

Figure 6 shows the corresponding outputs in the image space of the PGAN when the interpolated points on the line joining

and

and  .

.What's next? I recommend PyImageSearch University.

30+ total classes • 39h 44m video • Last updated: 12/2021

★★★★★ 4.84 (128 Ratings) • 3,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 30+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 30+ Certificates of Completion

- ✓ 39h 44m on-demand video

- ✓ Brand new courses released every month, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 500+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, we discussed the architectural details of the Progressive GAN network that enable it to generate high-resolution images. Specifically, we discussed the progressive growing paradigm, which allows the generator of PGAN to gradually learn the fine details integral for generating images at high resolution. Moreover, we learned how Torch Hub is used to import pre-trained GAN models and seamlessly integrate them without deep learning projects. Furthermore, we saw the PGAN network in action by visualizing generated images and walking through its latent space.

Citation Information

Chandhok, S. “Torch Hub Series #4: PGAN,” PyImageSearch, 2022, https://hcl.pyimagesearch.com/2022/01/10/torch-hub-series-4-pgan-model-on-gan/

@article{shivam_2022_THS4,

author = {Shivam Chandhok},

title = {Torch Hub Series \#4: {PGAN}},

journal = {PyImageSearch},

year = {2022},

note = {https://hcl.pyimagesearch.com/2022/01/10/torch-hub-series-4-pgan-model-on-gan/},

}

Want free GPU credits to train models?

- We used Jarvislabs.ai, a GPU cloud, for all the experiments.

- We are proud to offer PyImageSearch University students $20 worth of Jarvislabs.ai GPU cloud credits. Join PyImageSearch University and claim your $20 credit here.

In Deep Learning, we need to train Neural Networks. These Neural Networks can be trained on a CPU but take a lot of time. Moreover, sometimes these networks do not even fit (run) on a CPU.

To overcome this problem, we use GPUs. The problem is these GPUs are expensive and become outdated quickly.

GPUs are great because they take your Neural Network and train it quickly. The problem is that GPUs are expensive, so you don’t want to buy one and use it only occasionally. Cloud GPUs let you use a GPU and only pay for the time you are running the GPU. It’s a brilliant idea that saves you money.

JarvisLabs provides the best-in-class GPUs, and PyImageSearch University students get between 10 - 50 hours on a world-class GPU (time depends on the specific GPU you select).

This gives you a chance to test-drive a monstrously powerful GPU on any of our tutorials in a jiffy. So join PyImageSearch University today and try for yourself.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.